by Karl-Filip Faxén

Parallel programming is no longer optional. As improvements in processor clock frequencies have levelled out, the only way to increase performance is to place several processor cores on each chip. In contrast to increases in clock frequency, multiple cores do not automatically help existing programs, a problem addressed by a new multicore initiative from SICS.

While offering improved performance, multicore processors pose new demands on software; existing applications will in general not see any improvements at all. This is because each core on a multicore chip is no more powerful than a traditional processor; the performance improvements come from using more than one core at the same time. For this to happen, each core must have its own program. If one wishes to run a single application faster, then that application must be divided into sub-programs, or threads, that cooperate to deliver the desired functionality.

Traditionally, most programs consist of only one thread. This is no accident; it is easier to think about a program that does one thing at a time than at a program that does several things at a time. So not only do we need to rewrite a lot of code; we need to make it parallel, which is currently very difficult. Thus we need better ways both to write new parallel programs and to make our legacy code threaded.

The main obstacle in this partitioning process is dependencies. If one part B of the program has the output of another part A as input, then A must run before B. Other dependencies arise if two parts of the program use the same memory area for temporary storage: this means they cannot run in parallel. Other kinds of dependencies arise through I/O and when the execution result of one part of a program determines whether another part should be executed at all (think of conditionals).

SICS, as an industrial research institute, is starting a proactive program for cooperation with software-intensive Swedish industry in this area. Our work aims to help developers find the potential for parallelism in programs, in particular by providing efficient tool support. We are currently working on Embla, a dynamic dependence analyser capable of finding opportunities for manual parallelization of sequential applications.

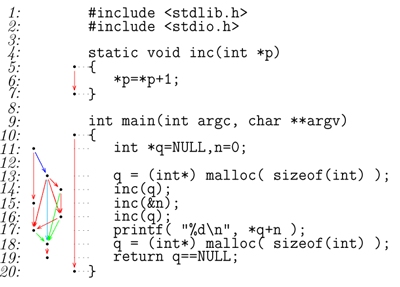

We are interested in the following methodology for constructing parallel programs. Start from a sequential program (old or new), identify independent parts of that program using Embla, and rewrite the program to obtain parallel execution of the independent parts. The figure shows the output of Embla for a trivial program with dependencies shown as arrows. Note that while a dependence exists between line 14 and line 16 since the two calls increment the same variable, both of these lines are independent of line 15.

Embla observes the actual data dependencies that occur during program execution, decides which program parts are affected by the dependence and presents the results. Since Embla observes particular runs with particular inputs, it might happen that when the program is run with other inputs, other dependencies occur. The user of Embla is responsible for selecting program inputs that generate representative program executions with good coverage.

If all dependencies are found, and the parallelizing transformations made do not violate these, the parallel program is guaranteed to behave like the sequential version. Conversely, if the parallel program behaves differently, the reason must be that a dependence was missed. The sequential program can then be run under Embla again with the offending input, revealing the missed dependence. Thus, we need never debug the parallel program itself; all debugging is made in the sequential context.

We also plan activities ranging from research on parallelization and execution environments for multicore processors to more applied projects in close cooperation with industry.

Please contact:

Karl-Filip Faxén

SICS, Sweden

Tel: +46 8 633 1610

E-mail: kff![]() sics.se

sics.se