by Moonisa Ahsan, Irene Viola and Pablo Cesar (CWI)

VOXReality is an ambitious project funded by European Commission, focusing on voice-driven interactions by combining Artificial Intelligence (AI), Natural Language Processing (NLP) and Computer Vision (CV) technologies in the Extended Reality (XR) use cases of VR Conference, AR Theater, and AR Training. We will develop innovative AI models that will combine language as a core interaction medium supported by visual understanding to deliver next-generation XR applications that provide comprehension of users’ goals, surrounding environment and context in mentioned use cases.

VOXReality [L1] is a European research and development project focusing on developing voice-drive applications in XR. It aims to facilitate the convergence of NLP and CV technologies in the XR field. Our first use case is VR Conference that aims to support virtual assistance in navigation and real-time translation facilitate networking and participant interactions. The second use case is Augmented Theater that will combine language translation, audiovisual user associations, and AR VFX triggered by predetermined speech which will be driven by VOXReality’s language and vision pretrained models. The last use case is the Training Assistant with audiovisual spatio-temporal contexts awareness allowing better training simulations in AR environments.

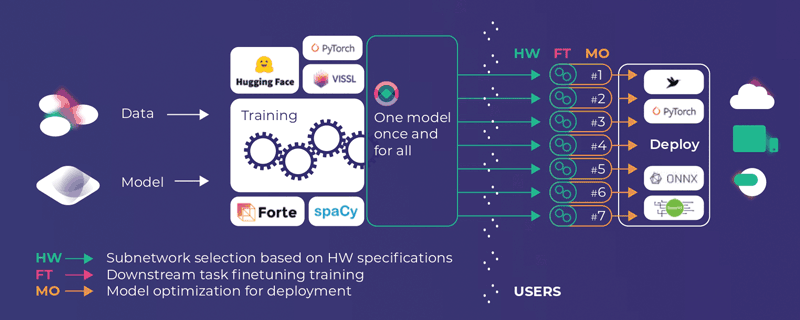

Our goal is to conduct research and develop new AI models [1] to drive future XR interactive experiences, and to deliver these models to the wider European market. These new models will address above mentioned three use cases: human-to-human interaction in unidirectional (Augmented Theater) and bidirectional (Virtual Conference) settings, as well as human-to-machine interaction by building the next generation of personal assistants (Training Assistant). VOXReality will develop large-scale self-supervised models that will be fine-tuned to specific downstream tasks with minimal re-training.

Along with technical advancements, one of the crucial contributions is understanding user needs and leveraging this knowledge to develop innovative solutions that enhance the user experience. This is where Centrum Wiskunde & Informatica (ERCIM Member CWI) comes with leading contributions in human-centric design approaches and dialogue system development. The scientific researchers from Distributed & Interactive Systems (DIS) group at CWI are sharing their expertise in gathering user requirements for the project shaping the user experience and design and also contributing to developing large-language models to support dialogue systems [2] within the applications of the project. We actively engage with users, collecting feedback and insights to ensure that our XR interactions align with their needs, preferences, and expectations resulting in high-quality user requirements. This human-centric approach ensures that our XR interactions are not only technologically advanced but also user-friendly and intuitive.

Figure 1: Lifecycle of VOXReality AI Models. VOXReality will follow an economical approach by employing the 'one-train-many-deployments' methodology. 1) Design a model able to perform various tasks; 2) Train the model on a large, diverse dataset; 3) Extract sub-networks based on their deployment hardware target; 4) Fine-tune the extracted model; 5) Perform model optimization.

The project consortium comprises ten partners, i.e. Maggioli (Coordinator), Centrum Wiskunde & Informatica (CWI), University of Maastricht (UM), VRDays, Athens Festival (AEF), CERTH, Synelixis, HOLO, F6S and ADAPT, mainly from the Netherlands, Italy, Greece, Austria and Germany, spanning from research, academia, industry and SMEs sectors. VOXReality started on 1st October 2022 and is expected to finish on 30th September 2025, with a total duration of three years.

VOXReality Team members, at the General Assembly of the project hosted by CWI, Science Park, Amsterdam.

We are also focusing on making sustainable and resource-friendly contributions to the XR community. Therefore, VOXReality aims to further extend the use cases and the application domains by funding five partners’ projects through OpenCalls+ (OC) worth 1 million Euro funds, where the maximum amount to be granted to each third party is € 200,000. The objective is to ensure reproducibility and repeatability of our research findings, promote open data and interfaces standardisation, avoiding narrow de-facto standards and demonstrate clear and efficient integration paths for the European industry uptake. Moreover, these OpenCalls+ (OC) will focus on building XR applications deploying the VOXReality models based, to demonstrate the clear integration path of the VOXReality pretrained models across the EU SME ecosystem.

In addition to our proof-of-concept of R&D demonstrations and OpenCalls, the expected results of the project include scientific publications, articles, blog posts, and community outreach efforts which can be found on the official website [L1]. Through these avenues, we share our findings, insights, and technological breakthroughs with the global XR and AI communities, fostering collaboration and knowledge exchange.

Links:

[L1] https://www.voxreality.eu

References:

[1] A. Maniatis, Z. Apostolos, et al., “VOXReality: immersive XR experiences combining language and vision AI models,” Human Interaction and Emerging Technologies (IHIET-AI 2023): Artificial Intelligence and Future Applications, 70.70, 2023.

[2] W. Deng, et al., “Intent-calibrated Self-training for Answer Selection in Open-domain Dialogues,” Transactions of the Association for Computational Linguistics, vol. 11, 2023.

Please contact:

Moonisa Ahsan, CWI, The Netherlands

+393498511147