by Silvia Rossi, Irene Viola (CWI) and Pablo Cesar (CWI and TU Delft)

Thanks to the recent advance of Extended Reality (XR) and Artificial Intelligence (AI) technologies, researchers in the European TRANSMIXR project are working on the future of media experiences by advancing the state-of-the-art in media production, delivery and consumption. In this context, we developed an open-source system for volumetric real-time interaction for social VR, collected user requirements and proposed new metrics to evaluate the quality and user experience in XR environments.

Our experience with media content has changed over the past few years: from being a merely passive activity, it is now becoming more immersive and interactive. While keeping a pivotal role in our information and entertainment world, traditional media content, which is typically two-dimensional and non-interactive, is facing new challenges: our society seeks more realism, social connectivity, and interactive engagements in their virtual experiences. This shift has seen XR technologies emerging as a new medium that allows users to share immersive experiences with others, making the vision of a feeling of “being remotely together” a realistic goal. At the same time, the development of mature AI tools has unlocked unique opportunities across several sectors. Without a doubt, the synergetic use of these emerging technologies will be crucial in writing the future of media experiences. This is indeed the vision of a new European project, TRANSMIXR, which aims at revolutionising the cultural and creative sector.

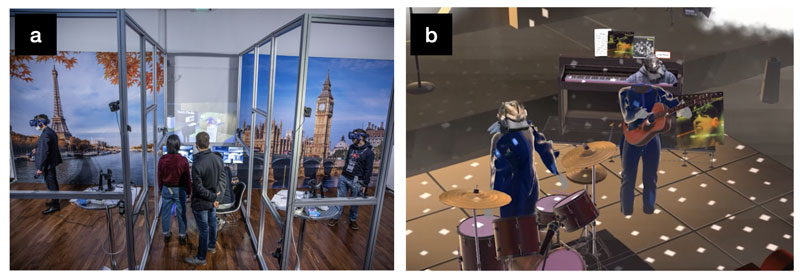

TRANSMIXR wants to step forward in the entire production chain of future immersive media experiences, from creation to consumption. Developing a range of AI and XR solutions, the project aims at reshaping digital co-creation, interaction and engagement experiences, focusing on three main domains of the cultural and creative sector, such as news media and broadcasting, performing arts, and cultural heritage. The vision is to introduce a novel XR Creation Environment to foster cross-investigations and facilitate remote collaboration between media and content creators. In the performing arts sector and cultural heritage, the idea is to design XR Media Experience Environments for the delivery and consumption of highly dynamic and socially immersive media experiences across multiple platforms to audiences who may not have regular access to arts and culture. For example, Figure 1 shows on the left side the physical set up of two participants experiencing a museum in social VR while on the right side their virtual experience while interacting with heritage artefacts [L2].

Figure 1: a) Physical setup of two participants (left and right) experiencing a museum in social VR with two operators (middle); b) Virtual experience of the two participants within the virtual museum.

In the context of TRANSMIXR project, the focus of the Distributed and Interactive Systems group at CWI [L3] lays in three main research directions: (i) to gather user requirements and understand current production workflow; (ii) to improve the technology that allows the creation of immersive and interactive experiences; and (iii) to define a range of metrics to evaluate the quality and user experience.

Gathering User Requirements

While the production workflow for traditional media is well understood by both clients and producers, creating XR experiences presents an additional challenge in how to manage communication and expectations between technology enablers, designers, and final consumers. A series of user-centred workshops have been conducted in order to explore what possibilities and opportunities lie in enabling social XR in various stages of the production workflow and thus, provide a clear set of tools that will be useful for the production of next-generation immersive applications. We found pain points in each of the stages for XR production: pre-production, production, post-production, and post-release. In particular, our workshops highlighted the need for social XR tools to improve communication in each step of the workflow, for example to show initial sketches and final prototypes to the clients, making communication easier and more effective.

Improving XR Technology

To enable efficient communication tools in social XR and make the immersive experience more realistic, it is essential to guarantee immersion, presence and interactivity to any final users. Due to technological limitations, many solutions still offer immersive experiences using synthetic avatars to represent users, which decrease the sense of presence with respect to photorealistic representation. Therefore, our research efforts have been put into developing VR2Gather platform, an open-source system for volumetric real-time communication and interaction that can also enable social VR experiences [1]. This platform is composed of different modules to capture, encode, transmit and render volumetric content in real time and can be adapted to different 3D capturing devices and camera configurations, network protocols and rendering environments. Thus, our system represents an initial solution that can be used for enhanced communication and collaboration between professionals of the creative and cultural sector.

Developing Metrics for Quality and User Experience in XR

New XR experiences need metrics that are able to adequately capture how users perceive this new medium, and how they navigate and interact. For example, understanding how users behave in virtual spaces can help us improve their experience and tailor our technology around their needs. In this context, we focused on enabling the detection of correlations and similarities among users while experiencing social VR by investigating new methodologies for better modelling user behaviour and novel tools of clustering [2]. Similarly, understanding how users perceive emerging 3D content such as point cloud, and what features of the content are more salient in terms of visual attention, is fundamental to optimise compression and delivery without compromising the user experience. Thus, we also filled this gap by proposing new objective quality assessment metrics and a novel publicly available dataset of eye-gaze data acquired in VR environment [3].

The TRANSMIXR project is within the Horizon Europe framework, the European Union’s research and innovation programme for the 2021–2027 period [L1]. The TRANSMIXR consortium includes 19 organisations (seven universities and research centres, seven media practitioners and five industry partners) from 12 European countries holding advanced and complementary expertise and skillsets in European research, media and innovation programmes, as well as in-depth knowledge of AI and XR and their application to the media sector.

The work presented in this article was in part supported through the European Commission Horizon Europe program under grant 101070109 TRANSMIXR [L1].

Links:

[L1] https://transmixr.eu

[L2] https://www.cwi.nl/en/news/cwi-and-the-netherlands-institute-for-sound-vision-gave-a-sneek-peek-into-the-future-of-cultural-heritage/

[L3] https://www.dis.cwi.nl/

References:

[1] I. Viola, et al., “VR2Gather: a collaborative, social virtual reality system for adaptive, multiparty real-time communication,” in IEEE MultiMedia, vol. 30, no. 2, pp. 48–59, April–June 2023. https://doi.org/10.1109/MMUL.2023.3263943

[2] S. Rossi, et al., “Extending 3-DoF metrics to model user behaviour similarity in 6-DoF immersive applications,” in Proc. of the 14th Conf. on ACM Multimedia Systems (MMSys), ACM, 2023.

https://doi.org/10.1145/3587819.3590976

[3] X. Zhou, et al., “QAVA-DPC: eye-tracking based quality assessment and visual attention dataset for dynamic point cloud in 6-DoF,” in Proc. of the IEEE Int. Symposium on Mixed and Augmented Reality (ISMAR).

https://doi.org/10.1109/ISMAR59233.2023.00021

Please contact:

Silvia Rossi, Centrum Wiskunde & Informatica (CWI), The Netherlands