by Gerasimos Arvanitis (University of Patras, AviSense.ai), Aris S. Lalos (ISI, ATHENA R.C.), and Konstantinos Moustakas (University of Patras)

In recent years, Extended Reality (XR) technologies have transformed the interaction between humans and machines, especially in dynamic environments like automotive and transportation. By providing drivers with relevant Augmented Reality (AR) information about their surroundings, we can enhance drivers’ capabilities and potentially improve their behaviour, safety, and decision-making in critical situations within rapidly changing environments. Connected Autonomous Vehicles (CAVs) offer the advantage of intercommunication, creating a network of connected vehicles known as cooperative situational awareness. This connectivity presents numerous opportunities, including the sharing of AR information related to the observed objects-of-interest between vehicles within the system (simultaneous and/or asynchronous). Such information transmission allows one vehicle to relay valuable observations to another, enhancing overall system awareness and efficiency.

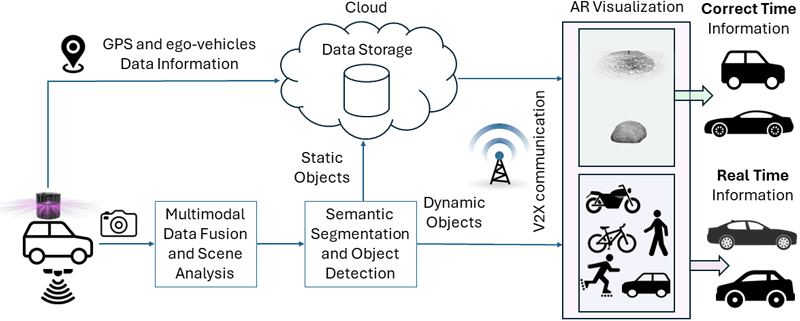

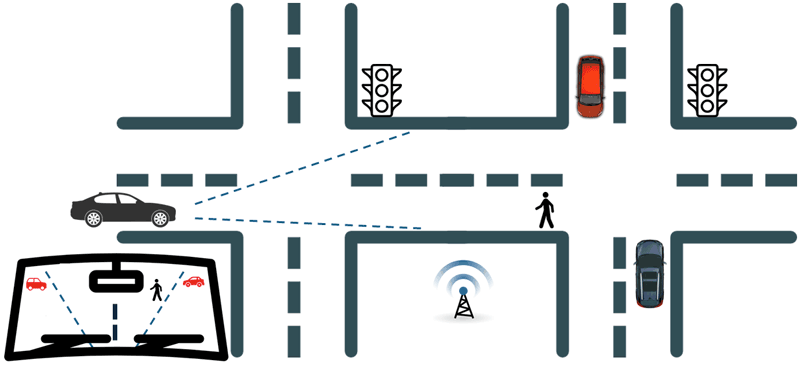

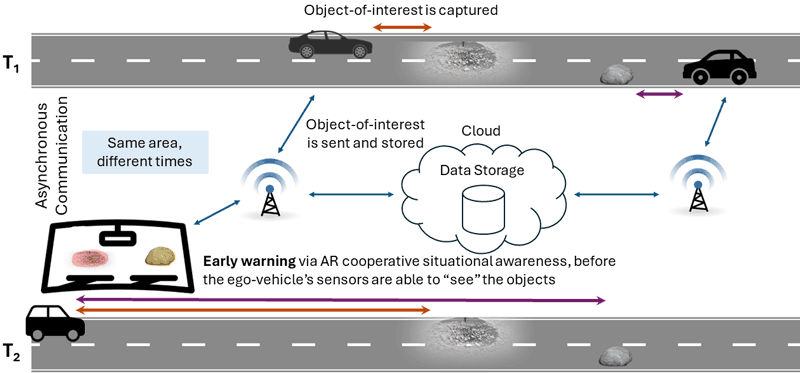

The proposed solution aims to provide intuitive and subtle AR information to improve the driver’s situational awareness and promote a sense of safety, trust, and acceptance. Cooperative information from connected vehicles, facilitated through Vehicle-to-Everything (V2X) infrastructures, will be leveraged to enhance the accuracy of the results. The key components of the methodology are illustrated in the schematic diagram (Figure 1). In this study, we consider two distinct use cases: i) the presence of CAVs with spatiotemporal 4D connectivity, where multiple vehicles share the same map of a town and move simultaneously (Figure 2); and ii) vehicles with only spatial 3D connectivity, where multiple vehicles are in spatial proximity (on the same map) but not necessarily temporal proximity (at different times) (Figure 3).

Figure 1: Methodology of the proposed method.

Figure 2: Cooperative situational awareness in the simultaneously communication use case.

Figure 3: Cooperative situational awareness in the asynchronous communication use case.

To ensure the driver is efficiently informed about potentially dangerous situations while driving, we have developed an AR rendering notification system, alerting drivers for potentially hazarous objects-of-interest along the road, such as potholes and bumps (static objects) or moving vehicles and Vulnerable Road Users VRU (dynamic objects), facilitated through a centralised server. The transmitted information includes the Global Positioning System (GPS) location, geometrical characteristics, timestamp, and semantic label of the detected objects. The system then sends the relative AR information to nearby vehicles, providing early warning from a significant distance. This helps overcome the limitation of sensors in identifying potentially dangerous objects from long ranges. For drivers, the AR interface of the vehicle displays the location and nature of the potentially upcoming dangerous object in a non-distracting manner. Through this communication system, drivers can receive early visual warning about potential hazards that may not yet be within their field of view or are obstructed by other objects, thereby enhancing their performance and decision-making abilities.

The detected objects-of-interest are displayed on the AR interface within the driver’s field of view, which may be a head-up display (HUD) or a windshield display. AR object visualisation is achieved through projection. Assuming the positions of both the AR interface and the system of cameras/LiDARs relative to the world are known, a transformation matrix is constructed to map the points of the LiDAR’s point cloud from its coordinate system to the AR interface’s coordinate system. This transformation between the two coordinate systems typically involves a series of operations: scaling, rotation, and translation. However, since both coordinate systems are orthonormal, scaling can be omitted. Furthermore, given that the LiDAR and AR interface are both fixed on the vehicle and aligned with each other, the rotation matrix can also be omitted, as there is no loss of generality in assuming that the two coordinate systems are already aligned. Based on these assumptions, the transformation of LiDAR coordinates into the AR interface’s coordinates involves a straightforward translation. To project the points of the point cloud onto the AR interface, we adopt a simple pinhole camera model [1]. For instance, if the AR interface is an AR windshield, then the windshield serves as the image plane, and the driver’s head serves as the principal point with coordinates (x0, y0). Therefore, the focal distance f = (fx, fy) represents the distance from the driver to the image plane. With the dimensions of the image plane (windshield), including the aspect ratio, known, the frustum is fully defined, enabling the projection from a point in 3D windshield coordinates (x, y, z) to pixels (u, v) on the image plane.

To enhance road safety, the automotive industry initially aimed to create fully autonomous vehicles. However, the technology is not yet mature enough to completely replace the driver. Additionally, unresolved issues concerning legal and societal matters, as well as the trust and acceptance of end-users, hinder the achievement of full-scale autonomy in the near future [2]. In response to this challenge, we assess methods that facilitate a safe, seamless, progressive, and dependable cooperative situational awareness, fostering feelings of trust and acceptance. In future work, explainable AI technologies will be employed to enhance the effectiveness and timeliness of informing drivers about potential hazards or other pertinent AR information. This optimisation of visual content will focus on factors such as explainability, trustworthiness, and ease of understanding. The collected information will be categorised and prioritised to ensure that drivers are not overwhelmed or distracted by irrelevant data. Additionally, efforts will be made to present information in a non-intrusive manner, striking a balance between enhancing situational awareness and avoiding information overload that could distract the driver.

This work is implemented in the framework of the prestigious basic research flagship project PANOPTIS (H.F.R.I. Project Number: 16469), aiming to develop AI-based AR tools to strengthen users’ abilities and improve cooperative situational awareness, to avoid critical cases of situational disabilities, reduced perceptual capacity due to physical limitations of human sensors, mental and physical fatigue.

References:

[1] G. Arvanitis, et al, “Cooperative saliency-based pothole detection and AR rendering for increased situational awareness,” in IEEE Transactions on Intelligent Transportation Systems. https://doi.org/10.1109/TITS.2023.3327494

[2] G. Arvanitis and K. Moustakas, “Digital twins and the city of the future: sensing, reconstruction and rendering for advanced mobility,” in ITS2023: Intelligent Systems and Consciousness Society, Patra, 2023.

Please contact:

Gerasimos Arvanitis, University of Patras, AviSense.ai, Greece