by Filip Škola, Václav Milata and Fotis Liarokapis (CYENS)

This article introduces an intelligent Extended Reality (XR) teaching assistant communicating with the users in natural language. The application uses artificial intelligence (ChatGPT) to understand and communicate with the students using realistic-looking avatars with body language. Teachers can customise the application by adding their slides to be taught or choosing a teaching topic that will be discussed by two intelligent agents.

Thanks to their positive influence on learning and engagement in educational settings, XR technologies have become influential in the field of education. XR, which includes virtual reality (VR), augmented reality (AR), and mixed reality (MR), in combination with traditional teaching methods, can greatly enhance learning by conveying practical experiences to the students. The knowledge acquisition with XR can more easily get beyond teaching theory only, which allows teaching some skills more effectively [1, 2]. However, creating XR applications for educational purposes is currently a complex task demanding XR-specific skills, significant time, effort, and financial resources. The XR4ED EU project [L1] aims to address areas needing improvement to position Europe at the forefront of advanced educational technologies. The project’s goals include bringing together the EdTech sector (the field of education technology in Europe) and XR communities and resources to foster innovation and create a central hub (open marketplace) for XR learning and training applications. The XR4ED project has cascade funding and will fund 20 projects, up to €230000. It will support start-ups, small- and medium-sized enterprises (SMEs), and industries active in the education sector, as well as schools, high schools, universities, and vocational education and training (VET) institutions, via two Open Calls, each funding ten projects, with a 12-month duration. Additionally, facilitating the ecosystem of XR technologies and tools for teachers and learners is among the priorities of the project.

One of the major outputs of the XR4ED project is an XR Intelligent Assistant, an AI-powered virtual teacher that interacts with the students in an AR environment created by the Extended Experiences [L2] group in CYENS – Centre of Excellence (Nicosia, Cyprus). The front end of the application consists primarily of a virtual agent (or two agents) represented by avatars, talking in and understanding natural languages and featuring advanced body language. Realistic avatars (from Character Creator 4 [L3]) have been utilised, but the teachers can choose their own. Ready Player Me animation library [L4] was utilised to implement the body language. In an idle state, the avatars show subtle movements such as shifting weight, blinking, and occasional head turns, mimicking the natural resting state of a human. During speech, the body language becomes more pronounced; avatars gesture with their hands, facial expressions, and posture changes. This dynamic range of body language which enhances lip-syncing during the speech facilitates the avatars’ lifelike presence and interactivity in the AR space. Communication with the agents stands on the speech-to-text (STT) and text-to-speech (TTS) capabilities, both implemented using Azure Cognitive Services [L5]. This allows interaction in several different languages, thus the far-too-common limitation of using English only is not present. TTS also produces visemes that serve to ensure correct lip-syncing behaviour across the different languages.

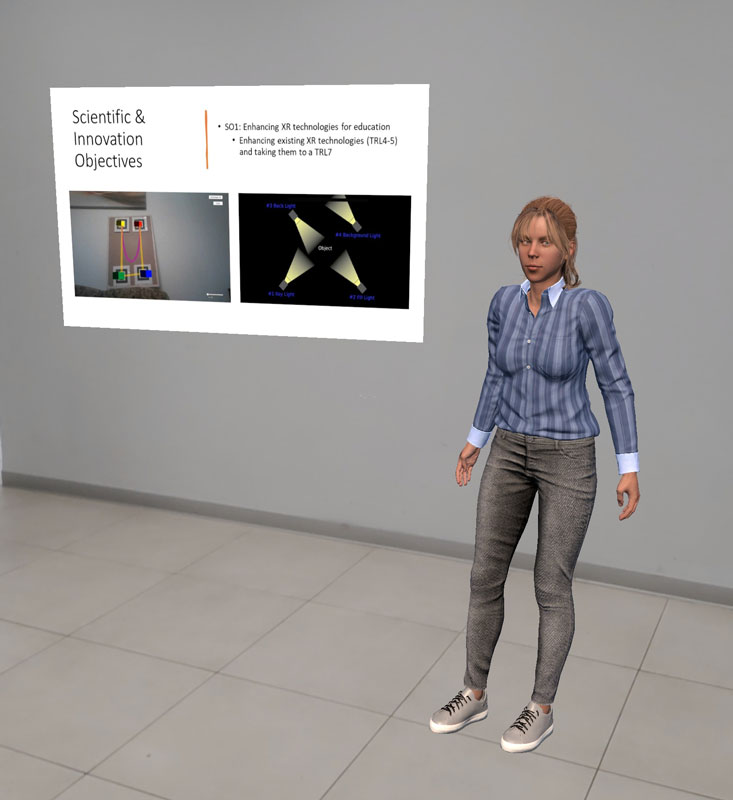

Figure 1: XR Intelligent assistant presenting prepared PowerPoint slides.

Most importantly, the spoken input from the user translated into text is fed into ChatGPT [L6], the online large language model (LLM) AI service, which allows advanced forms of natural language communication. This is especially powerful in combination with PowerPoint and video integration. With the PowerPoint presentation, the teachers can use the application to present their slides (Figure 1). The AI capabilities allow presentation of the slides in natural language, while being capable of going into arbitrary depth of individual topics. The students using the application for knowledge acquisition can interrupt the narration at any point and ask the XR assistant questions. Using ChatGPT enhanced with the PowerPoint slides’ data, the assistant can answer the questions and continue in the teaching process. The assistant can also navigate within the PowerPoint document and understand references to specific slide numbers. When video or PowerPoint slides are not included, the learners can use the application in a mode where two AI agents are having an intelligent conversation over a teaching topic (Figure 2). The learner can again interrupt these AI agents and ask questions.

Figure 2: Two intelligent agents discussing a topic in the panoramic image mode.

Although the application is primarily presented in an AR environment, it offers a panoramic image mode that can optionally be used to surround the user in the XR space (Figure 2). This shift towards VR along the MR spectrum brings more immersion and is especially suitable for certain learning scenarios, e.g. featuring learning about historical sites, natural ecosystems, or other content that is bound to a place. The XR Intelligent Assistant has been implemented on Magic Leap 2 [L7] AR head-mounted display (HMD) and for Android for usage on phones. The former offers a very immersive AR experience, but the cost of the equipment is much higher than that of an Android phone, making the latter a practical choice for a classroom. Nevertheless, implementation for HMDs such as Meta Quest 3 will be performed to extend the target user group. Especially in the “VR mode” of the application (with surrounding panorama), the qualities of the real-world reproduction of the target device no longer matter.

To conclude, the presented XR Intelligent Assistant is an interactive, multilingual, and highly customisable (in terms of adding own teaching materials, personalised avatars, and panoramic images) novel teaching tool that utilises cutting-edge technology from both XR and AI fields. It showcases the possibilities of engaging and dynamic knowledge transfer using XR technology, where the teachers are required to prepare their lecture (e.g. create the slides), but the actual presentation is conveyed by the technology exclusively. However, before the XR Intelligent Assistant can be employed in classrooms, several challenges and issues must be solved. These include topics of privacy, ethics, and regulation. After the practical issues are resolved, the XR Intelligent Assistant bears the potential to significantly transform the process of teaching and learning with technology.

This work has received funding from the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No 739578 and the Government of the Republic of Cyprus through the Deputy Ministry of Research, Innovation and Digital Policy. It has also received funding from the European Union under grant agreement No 101093159. Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union. Neither the European Union nor the granting authority can be held responsible for them.

Links:

[L1] https://xr4ed.eu/

[L2] https://ex.cyens.org.cy/

[L3] https://www.reallusion.com/character-creator/

[L4] https://docs.readyplayer.me/ready-player-me/integration-guides/unity/animations/ready-player-me-animation-library

[L5] https://azure.microsoft.com/en-us/products/ai-services

[L6] https://chat.openai.com/

[L7] https://www.magicleap.com/magic-leap-2

References:

[1] G. Papanastasiou et al., “Virtual and augmented reality effects on K-12, higher and tertiary education students’ twenty-first century skills,” Virtual Reality, vol. 23.

[2] P. Pantelidis et al., “Virtual and augmented reality in medical education,” in Med. Surg. Educ. - Past, Present Future, 2018.

[3] F. Škola et al., “Perceptions and Challenges of Implementing XR Technologies in Education: A Survey-Based Study” in Interactive Mobile Communication, Technologies and Learning, 2023.

Please contact:

Fotis Liarokapis, CYENS – Centre of Excellence, Cyprus