by Asterios Leonidis, Maria Korozi and Constantine Stephanidis (FORTH-ICS)

Recent advancements in Extended Reality (XR) technology, have expanded the scope of potential applications, enabling highly immersive solutions that blend digital and physical elements seamlessly. These developments have introduced applications like ARcane Tabletop and CRETE AR, which offer immersive experiences in gaming, historical simulations, and virtual exploration. Additionally, systems like GreenCitiesXR, Research Assistant, and the Digitised Material Preservation Application leverage XR technology to enrich collaboration and creativity.

Over recent years, XR applications have increased in popularity among researchers, enterprises, and consumers alike. With a continuous flow of new XR devices and accessories entering the market, such as HoloLens, Magic Leap, Meta Quest 3, Apple Vision Pro and many other forthcoming products, the XR field is expected to revolutionise the world of computing.

As a result of these recent innovations, the scope of potential applications has increased substantially, and nowadays it is possible to develop highly immersive solutions that harmoniously integrate digital and physical modalities [1]. XR technologies enhance interaction with real-world digital content, provide contextual information, improve spatial awareness, and deliver personalised experiences. In conjunction with tangible interaction, which enables physical engagement, reduces cognitive load, leverages physical affordances and enhances accessibility, users experience intuitive, memorable interactions that stimulate their senses, promote ease of use and improve user acceptance [2].

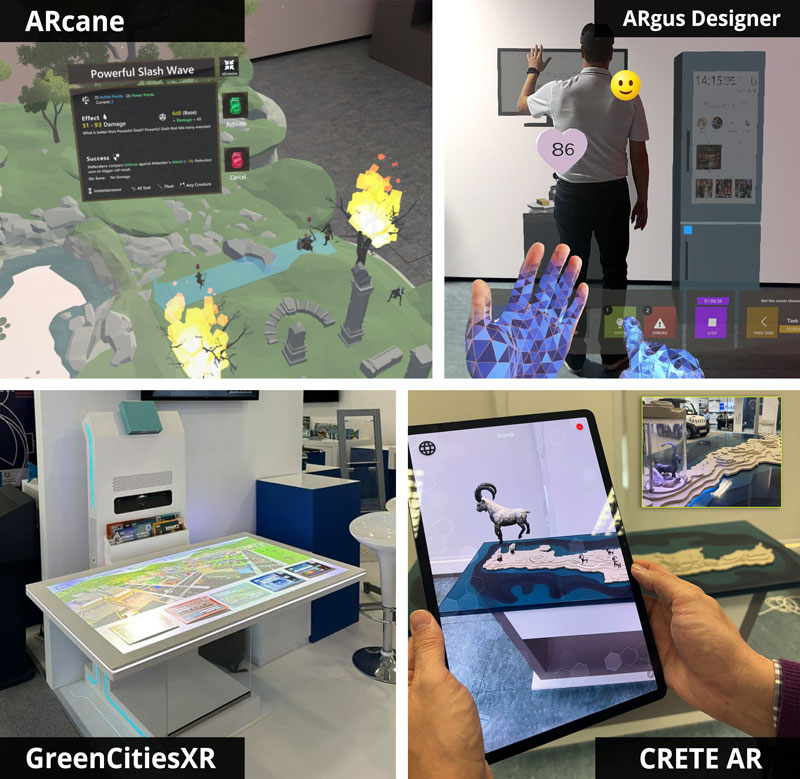

Recognising these possibilities, the HCI Laboratory at FORTH-ICS, in the context of the Ambient Intelligence (AmI) Programme [L1], has been developing a series of applications (Figure 1) and artefacts (e.g. AugmenTable [L2]) targeting various domains (e.g. education, culture, entertainment), which aim to leverage these technologies to enrich User Experience (UX) and promote natural and user-friendly interaction.

Figure 1: Immersive XR applications developed in the context of the AmI programme.

ARcane Tabletop offers a unique blend of physical and digital elements, providing an immersive multiplayer experience in turn-based board games through XR. Players gathered around a table wear Augmented Reality (AR) headsets and interact with holographic 3D terrains and characters. Each character possesses abilities for dynamic interactions, adapting to various scenarios and rulesets. This hybrid approach retains the social and tactile aspects of traditional board games while incorporating immersive virtual enhancements like sound effects and holographic visualisations. Beyond gaming, ARcane enables interactive simulations of historical events (e.g. Battle of Salamis, Battle of Waterloo), allowing players to engage first-hand in strategic battles and better explore historical contexts.

ARgus Designer [3] revolutionises the evaluation of XR applications with its comprehensive toolkit for real-time testing, data collection, and analysis. It streamlines the process and fosters collaboration among experts, enabling assessment of Intelligent Environments (IEs) using devices like the HoloLens2. It also focuses on IE prototyping, crucial for environments in developmental stages that may lack essential elements like smart devices or furniture. This feature underscores its adaptability to varying development phases, ensuring comprehensive evaluation capabilities. In detail, ARgus Designer empowers the research team to meticulously craft virtual experiment scenes, seamlessly integrating digital objects. They can select specific metrics and features tailored to the XR environment, fostering collaborative efforts among stakeholders. During runtime, the team can observe real-time metrics in XR and utilise the ARgus Workstation for experiment control and data collection. Virtual questionnaires further enhance expert supervision and minimise disruptions during evaluations. Post-study, the system facilitates thorough data review and experiment replay directly within the XR environment, enabling comprehensive analysis and iterative improvements.

GreenCitiesXR is a serious game that combines physical and digital elements to promote sustainability and environmental awareness. By allowing players to collaborate and make decisions about city infrastructure, the game not only educates them about the importance of sustainable practices but also provides them with a tangible way to see the consequences of their choices. The use of physical cards representing different infrastructure options (e.g. public transport, LED lighting) adds a tactile element to the gameplay, making it more immersive and interactive. By selecting a card, the city’s “green-o-meter” is impacted, and immediate visual feedback is provided through the interactive surface and the accompanying MR glasses. That kind of direct visualisation reinforces learning and encourages players’ critical thinking towards more environmentally friendly decisions. For example, in a gaming context when players opt for renewable energy the sky is clear; otherwise, if fossil-based energy is used, the sky gets polluted with smoke. In a more formal context, municipal authorities can use a 3D projected digital twin of the city to visualise policy outcomes, aiding informed decisions for citizen well-being.

Research Assistant constitutes a flexible system designed to support various research processes (e.g. school/university projects, market analyses, literature studies, text classification, etc.). The system augments printed documents with digital information, and allows the addition of virtual bookmarks, labels and notes atop stacks of physical documents. This augmentation is not limited to projection onto paper surfaces, but extends to presenting relevant visual data via wearable headsets to facilitate user exploration and minimise visual clutter on the working area. Additionally, the system features an intelligent digital character projected within the headset, capable of understanding and responding to user commands via text or voice input. Acting as a virtual assistant, it provides on-demand support akin to a physical aid, supporting users throughout the analysis process.

The Digitised Material Preservation Application is an educational tool that integrates printed materials, physical implements (e.g. scissors, glue, chisels, spatula), and digital content to teach the conservation and restoration process of cultural heritage documents, such as maps, in a playful and interactive way. Utilising Mixed Reality, users can visualise the application of 3D printed tools to complete tasks, such as applying a thin layer of glue or replacing a torn part of a vintage map with a piece of washi paper, enhancing the learning experience through immersive visualisation.

Finally, in the field of tourism and culture, CRETE AR [L3] is a system that promotes the virtual exploration of the island of Crete by exploiting cutting-edge XR and tangible technologies. It allows the user to navigate to various points of interest (e.g. cities, monuments, sights) through a combination of digital and haptic interaction. By selecting a point from the 3D representation of Crete, appropriate digital content (e.g. 3D animated models) is projected onto the Mixed Reality glasses, offering a unique interactive experience. For instance, selecting the Cretan wild goat triggers 3D animals to roam areas where encounters are likely, while a larger of a goat appears beside the user, enabling close interaction and detailed exploration.

Links:

[L1] https://ami.ics.forth.gr/en/home/

[L2] https://ami.ics.forth.gr/en/project/augmentable/

[L3] https://ami.ics.forth.gr/en/installation/innodays-2023/

References:

[1] F. Flemisch, et al., “Let’s get in touch again: Tangible AI and tangible XR for a more tangible, balanced human systems integration,” in Intelligent Human Systems Integration 2020: Proc. of IHSI, pp. 1007–1013, Springer, 2020.

[2] L. Angelini, et al., “Internet of Tangible Things (IoTT): Challenges and opportunities for tangible interaction with IoT,” in Informatics, vol. 5, no. 1, p. 7, MDPI, 2018.

[3] H. Stefanidi, et al., (2022, October). “The ARgus designer: supporting experts while conducting user studies of AR/MR applications,” in 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), pp. 885–890, IEEE, 2022.

Please contact:

Asterios Leonidis, FORTH-ICS, Greece