by Marco Callieri, Daniela Giorgi (CNR-ISTI), Andrea Maggiordomo (University of Milan) and Gianpaolo Palma (CNR-ISTI)

Successful Extended Reality (XR) applications require 3D contents able to provide rich sensory feedback to the users. In the European project SUN, the Visual Computing Lab at CNR-ISTI is investigating novel techniques for 3D asset creation for XR solutions, with 3D objects featuring both accurate appearance and estimated mechanical properties. Our cutting-edge research leverages on Artificial Intelligence (AI), computer graphics, and modern sensing and Computational Fabrication techniques. The application fields include XR-mediated training environments for industries; remote social interaction for psychosocial rehabilitation; and personalised physical rehabilitation.

One of the essential components of an XR application is the 3D environment, which is populated by 3D assets, i.e. 3D digital models specifically prepared for use in the application. 3D assets are fundamental to the interaction between the user and the virtual/augmented world. Therefore, the quality of assets is pivotal to the quality of the user experience.

The European project SUN – Social and hUman ceNtered XR [L1] is investigating techniques for high-quality 3D asset creation, by improving either the quality of existing assets or the very process of 3D asset creation. The goal is to populate XR applications with 3D content featuring faithfully reconstructed geometry and appearance, and augmented with estimates of physical and mechanical properties, such as inertia properties. The SUN project started in December 2022, led by the National Research Council of Italy, and involves 18 European academic and industrial partners.

Photogrammetry, due to its versatility and cost-effectiveness, is the most-used technique for the rapid creation of 3D models from real-world objects. It was then a natural choice to address the issues arising in this digitisation workflow. The quality and completeness of the 3D reconstruction depends a lot on the quality of the input photos. Problems such as specular highlights hide the local information needed by the photogrammetric process, resulting in noisy geometry or areas missing in the 3D reconstruction. We thus developed an AI technique to correct, in the input photos, those local visual problems that affect the photogrammetric pipeline. By training a CNN with examples of rendered images of objects with and without specular behaviour, it was possible to obtain a network able to correct the regions of the input images affected by specular highlights, or just to detect them (to mask them out in the photogrammetry software). This approach of pre-processing the input dataset works independently from the software used in the 3D reconstruction, preserving the original working pipeline, and can be easily extended to correct other common problems of the input photographic dataset.

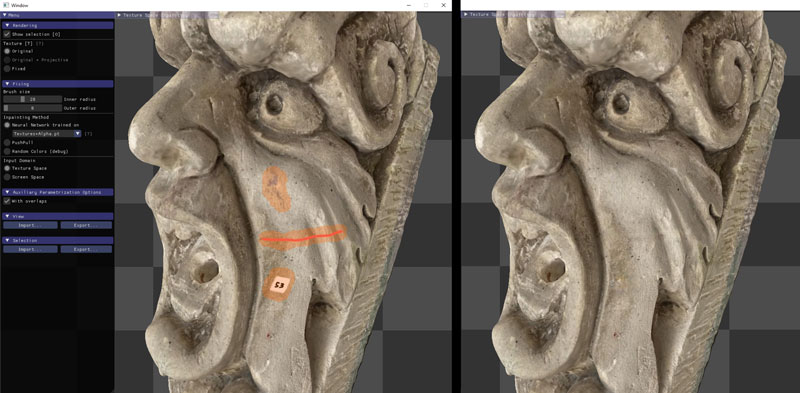

In 3D assets created through photogrammetry, the colour information is generally managed as a texture map generated by the projection of the input photos. This process, however, can often cause visual artefacts due to small projection errors, local geometry inconsistencies, and difference in the illumination in the input images. The only solution is to correct the final texture, but it is a tedious process that requires specific skills. We developed an AI-based inpainting tool [1] that works interactively, providing a paint interface to make it possible for the asset creator to easily and quickly correct local errors in the current texture (Figure 1). We are working on a more automatic process, where visual errors are automatically detected and inpainted.

Figure 1: The AI-based inpainting tool can be used to locally correct errors in texture maps using a paint interface.

Finally, we are working on a technique for acquiring the physical properties of a 3D object, such as its centre of mass and inertia tensor. In the realm of 3D data acquisition, the focus is typically on capturing the shape and appearance of objects; however, when it comes to creating truly immersive interaction experiences within an XR environment, the physical properties of 3D objects such as mass distribution play a fundamental role, as they support the simulation and rendering of plausible physical behaviour and realistic feedback to humans. Many earlier efforts to acquire mechanical properties either made assumptions about a uniform and well-known object density, or demanded costly laboratory setups. Our challenge is achieving precise and dependable estimations of an object’s physical properties from multimodal data obtained from inexpensive hardware sources. Our technique leverages a novel acquisition device featuring an image acquisition box, a sensorised gripper for controlled object manipulation, and a 3D-printed object with controllable mass distribution for generating training data. AI techniques and data fusion algorithms are under study to learn the expected inertia tensor and other relevant physical characteristics that best match the anticipated sensor readings.

The techniques described above will find application in different scenarios. A first scenario is developing effective XR applications for training the workforce in industries, thus answering the pressing demand for continuous workforce upskilling and reskilling. The fast virtualisation of the environments where industrial procedures take place, and the rendering of physical interaction in XR, would enable the creation of a safe yet realistic environment for training for safety and security procedures, and for practicing real-time decision-making in potentially dangerous situations.

As a second scenario, curated 3D assets for XR environments can support rehabilitation, both for psychosocial and physical issues. Novel techniques for high-quality 3D asset creation would support the variability and adaptation of exercises to the patients’ needs, by giving therapists a means to rapidly enrich the virtual scenario with objects from the physical world. Also, the recorded data about patients can be augmented with information about the physical interaction with manipulated 3D objects, to assess progress during treatment.

A realistic XR environment cannot prescind from assets generated from real-world objects, but this digitisation process is currently a bottleneck. Beside the objective of obtaining better 3D models, our work in the SUN project aims at streamlining this process in a way that does not disrupt the standard tools and procedures already established in the field, to make the creation of XR environments cost-effective and scalable.

Link:

[L1] https://www.sun-xr-project.eu/

Reference:

[1] A. Maggiordomo, P. Cignoni, and P. M. Tarini, “Texture inpainting for photogrammetric models,” Computer Graphics Forum, 42: e14735, 2023. https://doi.org/10.1111/cgf.14735

Please contact:

Daniela Giorgi, CNR-ISTI, Italy