Leveraging the wisdom of the crowd to improve software quality through the use of a recommendation system based on StackOverflow, a famous Q&A website for programmers.

Debugging continues to be a costly activity in the process of developing software. However, there are techniques to decrease overall the costs of bugs (i.e., bugs get cheaper to fix) and increase reliability (i.e., on the whole, more bugs are fixed).

Some bugs are deeply rooted in the domain logic but others are independent of the specificity of the application being debugged. This latter category are called “crowd bugs”, unexpected and incorrect behaviours that result from a common and intuitive usage of an application programming interface (API). In this project, our research group here at Inria Lille (France), set out to minimize the difficulties associated with fixing crowd bugs. We propose a novel debugging approach for crowd bugs [1]. This debugging technique is based on matching the piece of code being debugged against related pieces of code reported by the crowd on a question and answer (Q&A) website.

To better define what a “crowd bug” is, let us first begin with an example. In JavaScript, there is a function called parseInt, which parses a string given as input and returns the corresponding integer value. Despite this apparently simple description and self-described signature, this function poses problems to many developers, as witnessed by the dozens of Q&As on this topic (http://goo.gl/m9bSJS). Many of these specially relate to the same question, “Why does parseInt(“08”) produce a ‘0’ and not an ‘8’?” The answer is that if the argument of parseInt begins with 0, it is parsed as an octal. So why is the question asked again and again? We hypothesize that the semantics of parseInt are counter-intuitive for many people and consequently, the same issue occurs over and over again in development situations, independently of the domain. The famous Q&A website for programmers, StackOverflow (http://stackoverflow.com), contains thousands of crowd bug Q&As. In this project, our idea was to harness the information contained in the coding scenarios posed and answered on this website at debugging time.

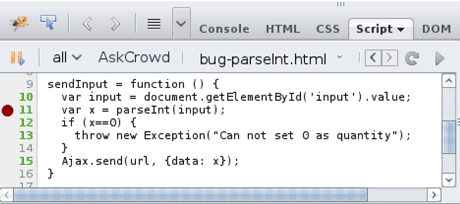

The new debugger we are proposing works in the following manner. When faced with an understandable bug, the developer sets a breakpoint in the code presumed to be causing the bug and then approaches the crowd by clicking the ‘ask the crowd’ button The debugger then extracts a ‘snippet’, which is defined as n lines of code around the breakpoint, and cleans them according to various criteria. This snippet acts as a query which is then submitted to a server which in turn, retrieves a list of Q&As that match that query. The idea is that within those answers lies a range of potential solutions to the bug and allows for those solutions to be reused. The user interface of our prototype is based on a crowd-based extension of Firebug, a JavaScript debugger for Firefox (Figure 1).

Figure 1: A screenshot of our prototype crowd-enhanced JavaScript debugger. The button ‘AskCrowd’ selects the snippet surrounding the breakpoint (Line 11: red circle). A list of selected answers that closely match the problem are then automatically retrieved from StackOverflow. In this case, the first result is the solution: the prefix has a meaning for parseInt which must be taken into account.

To determine the viability of this approach, our initial task was to confirm whether Q&A websites such as StackOverflow were able to handle snippet inputs well. Our approach only uses code to query the Q&As, as opposed to text elaborated by developers. To investigate this question, we took a dataset that comprised of 70,060 StackOverflow Q&As that were determined to possibly relate to JavaScript crowd-bugs (dataset available on request). From this dataset, 1000 Q&As and their respective snippets were randomly extracted. We then performed 1,000 queries to the StackOverflow search engine, using the snippets only as an input. This analysis yielded the following results: 377 snippets were considered to be non-valid queries, 374 snippets yielded no results (i.e., the expected Q&A was not found) and finally, 146 snippets yield a perfect match (i.e., the expected Q&A was ranked #1).

These results indicate that StackOverflow does not handle code snippets inputted as query particularly well.

In response to this issue, we introduced pre-processing functions aimed at improving matching quality between the snippets being debugged and the ones on the Q&A repository. We experimented with different pre-processing functions and determined that the best one is based on a careful filtering of the abstract syntax tree of the snippet. Thus, using this pre-processing function, we repeated the same evaluation followed above and on this occasion, 511 snippets yielded a #1 ranked Q&A (full results can be found in our online technical report [1]). Consequently, we integrated this pre-processing function into our prototype crowd-bug debugger to maximize the chances of finding a viable solution.

Beyond this case, we are conducting further research which aims to leverage crowd wisdom to improve the automation of software repair and contribute to the overarching objective of achieving more resilient and self-healing software systems.

Links:

https://team.inria.fr/spirals

http://stackoverflow.com

Reference:

[1] M. Monperrus, A. Maia: “Debugging with the Crowd: a Debug Recommendation System based on Stackoverflow”, Technical report #hal-00987395, INRIA, 2014.

Please contact:

Martin Monperrus

University Lille 1, France

Inria/Lille1 Spirals research team

Tel: +33 3 59 35 87 61

E-mail: