by Stefano Nativi, Keith G. Jeffery and Rebecca Koskela

RDA is about interoperation for dataset re-use. Datasets exist over many nodes. Those described by metadata can be discovered; those cited by publications or datasets have navigational information. Consequentially two major forms of access requests exist: (1) download of complete datasets based on citation or (query over) metadata and (2) relevant parts of datasets instances from query across datasets.

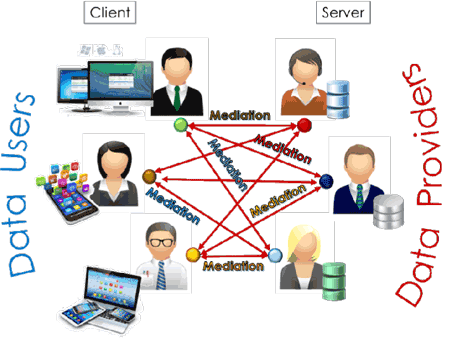

Conventional end-to-end Mediator

Client and/or server developers need to write mediation software that given two dataset models transforms instances of A to B, or B to A. Given n data models then this requires n*(n-1) (i.e. almost n2) mediation modules, as depicted in Figure 1. The programmer has to understand each data model and manually match/map attributes, specifying the algorithm for instance conversion. Clearly, this does not scale.

Figure 1: Conventional end-to-end mediation approach.

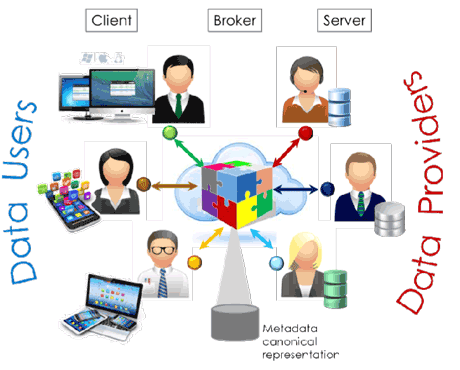

Figure 2: The C-B-S archetype.

Mediating with canonical representation

Increasingly mediators are written using a superset canonical representation internally and instances of A and B are converted to the canonical representation C. The programmer has to understand the dataset (already understanding the canonical representation) and manually match/map attributes, specifying the algorithm for the conversion of instances. C grows with increasing datasets causing issues of backward compatibility. This technique is applicable in a restricted research domain where datasets have similar data attributes. Although the number of conversions is reduced to n, the evolution of C and software maintenance cause significant cost and the restriction of research domain precludes multidisciplinary interoperability.

Extending client-server archetype: brokering services

To address these issues, it is possible to apply a new approach [1] based on a 3-tier architecture: Client-Broker-Server (C-B-S) introducing an independent broker, as showed by Figure 2. The mediation moves from the client and server to the broker. This provides the following benefits:

- Data providers and users do not have to be ICT experts so saving time for research;

- The broker developers are data scientists; they implement a complex canonical representation maintaining that for sustainability;

- They are domain agnostic and can implement optimised mediation across domains;

- A 3-tier mediation approach is well supported by cloud-based services.

However, this approach introduces new challenges:

• Broker governance: interoperability and sharing require trust;

• Community view of interoperability: a data scientist working on different domains is required while evolution from Client-Server interoperability to the revolutionary Bring-Your-Data (BYD) approach occurs.

Nowadays, a broker implements a mediating service based on C. The following sections describe the evolution of metadata-assisted brokering.

Metadata Assisted Simple Brokering: This places dataset descriptions (metadata) outside the conversion software. Tools assist the match/map between the metadata and the generation of software to do the instance conversions. There is no automated production software for this task [2] although some prototype systems providing part-automation exist [3]. They usually provide graph representations of dataset metadata for A and B, propose matching entities/attributes of A and B and allow the data scientist to correct the proposed matches. If the equivalent attributes are of different types (unusual) or in different units / precision, transformations are suggested or input. The instance conversion software is written, partially generated or generated depending on the sophistication of the tools based on the specification. The matching is difficult if the datasets differ in character representations/languages requiring sophisticated knowledge engineering with domain multilingual ontologies. Of course this technique resurrects the n*(n-1) problem with associated scalability issues.

Metadata Assisted Canonical Brokering: The next step has canonical metadata outside the conversion software with which the metadata of any dataset of interest is matched/mapped. Again, the kinds of tools mentioned above are used. This reduces the n*(n-1) problem to n. However, a canonical metadata scheme for all datasets is a huge superset. Restricting the canonical metadata (based on commonality among entities/attributes) to that required for contextualisation (of which discovery is a subset) maintains the benefits of the reduction from n*(n-1) to n for much of the processing and only when connecting data to software with detailed, domain-specific schemas is it necessary to write or generate specific software.

Metadata Assisted Canonical Brokering at Domain Level: The above technique for contextual metadata may be applied at detailed level restricted to a domain. This is not applicable for multidisciplinary interoperation. In any particular domain there is considerable commonality among entities/attributes e.g. in environmental sciences many datasets have geospatial and temporal coordinates and time-series data have similar attributes (e.g. temperature and salinity from ocean sensors).

The advantage of Metadata

The use of metadata and associated matching/mapping in brokering either provides a specification for the programmer writing or generates or partially generates the mediating software. Data interoperability – researched intensively since the 1970s – remains without automated processes. Partial automation, based on contextual metadata has reduced considerably the cost and time required to provide interoperability.

Metadata Assisted Brokering in RDA

Metadata is omnipresent in RDA activities; four groups specialise in metadata as mentioned in an accompanying article. These groups are now working closely with the Brokering Governance WG (https://rd-alliance.org/groups/brokering-governance.html) to promote techniques for dataset interoperation.

Link:

https://rd-alliance.org/groups/brokering-governance.html

References:

[1] S. Nativi, M. Craglia, J. Pearlman: “Earth Science Infrastructures Interoperability: The Brokering Approach”, IEEE JSTARS, Vol. 6 N. 3, pp. 1118-1129, 2013.

[2] M. J. Franklin, A. Y. Halevy, D. Maier: “A first tutorial on dataspaces”, PVLDB 1(2): 1516-1517 (2008). http://www.vldb.org/pvldb/1/1454217.pdf

[3] K. Skoupy, J. Kohoutkova, M. Benesovsky, K.G. Jeffery: “‘Hypermedata Approach: A Way to Systems Integration”, in proc. of ADBIS’99, Maribor, Slovenia, 1999, ISBN 86-435-0285-5, pp 9-15.

Please contact:

Stefano Nativi

CNR, Italy

E-mail: