by Liming Chen and Chris Nugent

OntoFarm aims to co-ordinate and streamline the core activities within the process of Activity Recognition within Ambient Assisted Living (AAL) towards a systematic solution that is scalable and applicable to real world use cases such as smart homes.

Ambient Assisted Living (AAL) is a recently emerged area of applied computing that aims to provide technology-driven solutions for independent living and ageing in place. It is a multi-faceted process that involves activity and environment monitoring, data collection and processing, activity modelling and recognition and assistance provisioning. Among these processes, activity modelling and recognition play a pivotal role. Activity assistance can only be provided once the activity an inhabitant performs has been detected. Activity recognition has received much attention in recent years. Techniques considered have progressed from image and video based processing systems to those that make use of an array of heterogeneous sensors, seamlessly embedded within the environment. Although contemporary machine learning techniques have been successfully applied within this domain, the ability of an activity recognition system to manage the diversity and uniqueness of all possible scenarios lies at the heart of the challenge in producing a truly scalable solution. In an effort to address this challenge a trend has been adopted which has moved towards using domain knowledge such as user activity profiles, domain-specific constraints and common sense heuristics as the basis for a new generation of approaches to activity modelling and recognition.

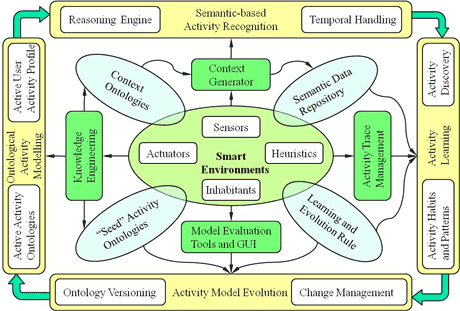

Figure 1: The ontology-based framework for activity modelling and recognition.

We have developed OntoFarm, an ontology-based framework for activity modelling, evolution and recognition as presented in Figure 1. OntoFarm aims to co-ordinate and streamline the core activities within the process of Activity Recognition within AAL towards a systematic solution that is scalable and applicable to real world use cases such as smart homes. The central concept of OntoFarm is that extensive a priori and domain knowledge are available in AAL, and formal knowledge modelling, representation and reasoning techniques can be used to develop a top-down approach to activity modelling and recognition. Ontologies have been adopted as the unified conceptual backbone for modelling, representing and inferring Activities of Daily Living (ADL), user activity profiles and contexts. Ontology engineering along with the Semantic Web technologies, such as ontology editors, languages, reasoners and semantic repositories, provide the enabling technologies for OntoFarm.

OntoFarm consists of four key tasks, namely Ontological Activity Modelling, Semantic-based Activity Recognition, Activity Learning and Activity Model Evolution. Each task is undertaken by a number of supportive components. The goal of the Ontological Activity Modelling task is to create a number of formal knowledge models by the Ontology Engineering component. These models include the initial activity ontologies, user activity profiles, context ontologies, and learning and evolution rules. The initial activity models are stored in the “Seed” Activity Ontologies component and used as the starting Active activity models for activity recognition.

The Semantic-based Activity Recognition task performs ontological classification using semantic subsumption reasoning to realise continuous progressive activity recognition in real time. It takes as inputs the active activity models and a context generated by the Context Generator and Context Ontologies components, and generates a sequence of activity traces in the Activity Trace Management component. These traces could be labelled activities already modelled in the seed ontologies or unlabelled activities that do not exist in the seed activity ontologies.

The purpose of the Activity Learning task is to identify the activities that a user performs which have not been modelled in the activity ontologies, and to learn the specific manner in which a user performs an activity. In this way, it can learn and adapt activity models in an evolutionary way according to a user’s behaviour, thus addressing model incompleteness and accuracy. The task is accomplished by reasoning the activity traces and time series of sensor data using learning rules in the Learning and Evolution Rules component.

The Activity Model Evolution task identifies changes that need to be made for the previous version of models based on the discovered and learnt activities. In addition, it recommends the locations and labels for these new activities in the hierarchy of the ADL ontologies. Model evolution is supported by ontology change management and versioning techniques. Human intervention is required to review and validate the changes for activity models, which is facilitated by the Model Evaluation Tools component. Once the current version of the activity models is updated, it serves as the latest active activity models for future activity recognition.

The four key tasks interact with each other and form an integral lifecycle that can iterate indefinitely. As such, OntoFarm enables and supports the following compelling features, a top-down approach to activity modelling and recognition, incremental activity discovery and learning, adaptive activity model evolution, activity recognition at both coarse-grained and fine-grained levels and increased accuracy of activity recognition.

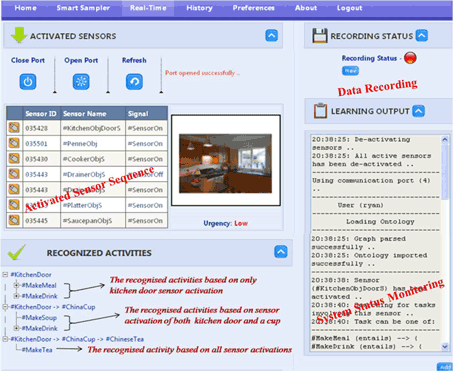

Figure 2: An OntoFarm-based assistive system.

To date, OntoFarm has been investigated in three separate projects each addressing a specific aspect, ie, semantic context management, knowledge-driven activity recognition and activity learning and evolution. A prototype assistive system based on this framework has been developed and deployed in our dedicated smart environment lab where experiments and evaluation have been performed (see Figure 2).

Future work will focus on technical extension and full-scale implementation. We shall examine the more complex use scenarios such as interleaved, concurrent activity modelling and recognition. We shall also investigate temporal reasoning and uncertainty handling

Link:

Smart Environments Research Group: http://serg.ulster.ac.uk

Please contact:

Liming Chen, Chris D. Nugent

University of Ulster, UK

Tel: +44 28 90368837/68330

E-mail: {l.chen, cd.nugent}@ulster.ac.uk