by Konstantinos Kapelonis, Sven Karsson, and Angelos Bilas

Tagged Procedure Calls (TPC) is a new approach addressing the problem of the high programmer effort needed to achieve scalable execution. TPC is targeted at architectures ranging from small embedded systems to large-scale multi-core processors and provides an efficient programming model easy to understand and exploit.

A huge opportunity and challenge we face today is the design of embedded systems that will support demanding application domains. Current technology trends in building such embedded systems advocate the use of parallel systems with (i) multi-core processors and (ii) tightly-coupled interconnects. However, exploiting parallelism has traditionally resulted in significant programmer effort. The programming model plays an important role in reducing this effort. The main challenges in the programming model are to expose mechanisms that need to be used directly by the programmer and to hide mechanisms that can be used transparently.

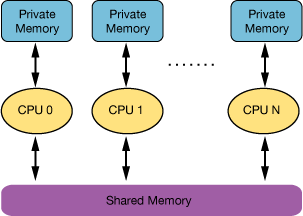

It is predicted that multi-core processors will use the increasing number of transistors more efficiently than traditional single-core processors and offer higher performance. However, multi-core processors require a large degree of parallelism that has traditionally demanded additional programming effort. There is a need for an intuitive programming model for multi-core processors.

Moreover, future interconnects will play an important role in such systems as they interconnect all the cores in a system. To be efficient, the programming model must facilitate efficient use of the interconnect.

Tagged procedure calls (TPC), are a new programming model that re-draws the balance between what the programmer needs to specify and what the architecture should provide. Furthermore, TPC aim at unifying intra- and inter-chip programming abstractions. Informally, the main points behind TPC are:

-

TPC allow the programmer to augment procedure declarations and calls with tags that control the way these procedures will be executed.

-

TPC require the programmer to specify parallelism through special, tagged, asynchronous procedure calls. TPC also provide primitives that block execution until certain procedure calls have completed, see Figure 1.

-

TPC discourage the programmer from accessing global data within tagged procedures.

-

TPC allow the programmer to use pointers to global data as arguments to procedures.

void procedure(...){

...

}

void main()

{

/* Define a handle for function */

TPC:DECL(hdl,procedure)

...

/* call asynchronously procedure()

* it changes argument s */

TPC:CALL(hdl,procedure,s) c,i

/* Continue execution */

...

/* Use handle hdl to wait for

* procedure() to complete */

TPC:WAIT(hdl)

/* s can now be accessed */

...

}

Tags placed on procedure calls by the programmer define an abstract representation of the required execution semantics, see Figure 1. The programmer (a) has to identify the available parallelism, (b) is urged to identify the data used during parallel computations and (c) has to specify how parts of the code will execute, eg as atomic or serializable regions. However, they need not worry about how this will be achieved. Finally, TPC aim at unifying intra- and inter-processor programming models and dealing with the inherent heterogeneity of future embedded systems in a simple and intuitive manner.

TPC encourage the programmer to specify parallelism and data used during parallel execution, but do not expose the underlying mechanisms for communication, synchronization, etc. We believe that this balance between what the programmer needs to specify and what can be done transparently will result in both efficient execution and reduced programmer effort on future embedded systems.

Our current work focuses on a prototype implementation of TPC on an embedded platform. We are using an FPGA-prototype (see Figure 2) with multiple cores. Using this implementation platform, we are currently experimenting with the semantics of TPC. We are porting a number of existing parallel applications. This effort will allow us to examine the cost introduced by the runtime system when transparently dealing with communication and synchronization issues.

This work has been partially supported by the European Commission in the context of the SARC project.

Please contact:

Angelos Bilas, FORTH-ICS and University of Crete, Greece

E-mail: bilas![]() ics.forth.gr

ics.forth.gr

http://www.ics.forth.gr/~bilas/