by Attila Bekkvik Szentirmai (University of South-Eastern Norway)

A browser-based augmented reality research platform demonstrates how lightweight, on-device AI can function as scientific infrastructure. By lowering technical and ethical barriers, it enables fast, privacy-preserving experimentation with computational sensing in real-world settings and across diverse user groups.

Limitations of AR Tools for Universal Design Studies

Augmented reality (AR) offers new opportunities for studying how people perceive, comprehend, navigate, and interact in space [1][2]. For accessibility and universal design (UD) research in particular, the combination of computer vision, contextual awareness, and multimodal information delivery, including audio, visual, and tactile feedback, makes AR a promising platform for investigating how digital systems can adapt to users with diverse abilities, including blind and low-vision users [3].

Despite this potential, many existing AR tools are poorly suited for use as scientific instruments in accessibility or UD studies. Research-oriented AR development often depends on proprietary hardware, AR-specific development kits, and cloud-based services. These requirements increase costs and development time, limit reproducibility, and make it difficult to involve participants early. They also raise ethical concerns when video from personal environments must be transmitted to or stored by third parties. Together, these constraints slow scientific iteration and restrict studies in everyday settings.

SensAI as Scientific Infrastructure

The SensAI project [L1], developed at Universal Design Studio USN–Bø in 2025, addresses these challenges by demonstrating how lightweight, off-the-shelf AI models can serve as dependable components in scientific research workflows. Rather than introducing new AI methods, SensAI focuses on using existing models as infrastructure that supports rapid experimentation, field-based studies, and participatory research.

The prototype is designed for researchers who need fast and reliable tools for longitudinal studies, co-design sessions, and evaluations outside laboratory environments. SensAI enables computational sensing and multimodal information delivery to be tested directly in situ, without requiring software installation or transmission of camera data to external servers. This reduces overhead while also simplifying ethical approval processes.

What the System Does

The SensAI prototype runs entirely in modern web browsers using TensorFlow.js [L2] and the COCO-SSD [L3] object detection model. As the device camera captures the user’s environment, the system identifies and visualises objects while providing short spoken cues, with an optional visual overlay (see Fig. 1c).

The feedback style follows the synthetic speech commonly used by screen readers. This supports accessibility while allowing the same system to be used by participants with different sensory abilities. Rather than generating long descriptions, SensAI delivers brief, audio-centred object cues. This reframes AR information delivery beyond visual-first paradigms and supports research into alternative perceptual strategies.

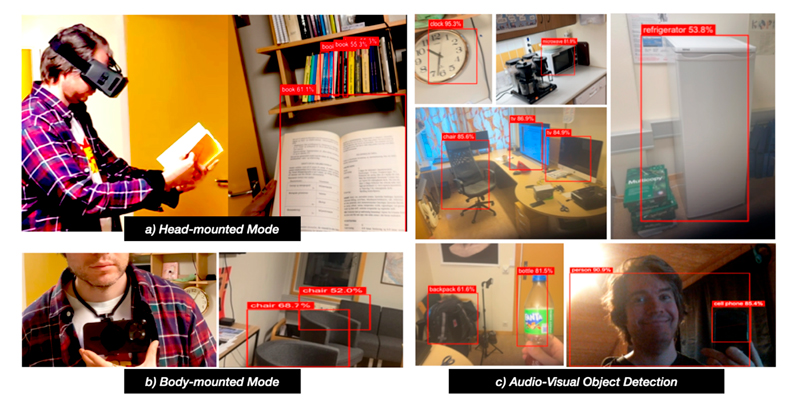

Figure 1: AR and body-mounted user interaction modalities: (A) head-mounted mode, (B) body-mounted mode, and (C) multimodal audio–visual object detection.

Interaction Modalities

The prototype supports several interaction modalities:

- Handheld smartphone mode

- Body-mounted, hands-free mode with low physical effort (see Fig. 1b)

- Immersive head-mounted AR passthrough mode using cardboard accessories (see Fig. 1a)

- Stationary mode using a desktop or laptop webcam, or a tablet.

This flexibility reflects UD principles by accommodating different comfort levels and preferences for movement and device use. For researchers, it enables direct comparison of interaction styles across contexts using the same system, without building multiple prototypes.

Why On-Device AI Matters for Scientific Research

One of SensAI’s key contributions is showing how small, on-device AI models can support scientific AR studies. Many AR research platforms rely on proprietary hardware and cloud-based processing. While powerful, these approaches increase development time, require specialised expertise, and complicate data management and ethical review.

By running COCO-SSD directly in the browser, the prototype offers properties that are directly relevant to scientific practice:

- Real-time audiovisual cues that support studies of perception and interaction

- Offline use once the model is loaded, enabling fieldwork in varied environments

- Strong privacy protection, since no images or personal information leave the device

- Instant deployment and replication through a simple URL share.

Together, these characteristics make the system suitable for exploratory studies, comparative experiments, and reproducible research beyond controlled laboratory settings.

Implications for AI for Science

SensAI illustrates how AI can function as scientific infrastructure rather than as a standalone application. Lightweight, browser-native AI components allow researchers to focus on study design and scientific questions instead of system integration and deployment logistics. This approach is particularly relevant for accessibility and UD research, where early and repeated participant involvement is essential. More broadly, this work shows that progress in AI for science does not always depend on large-scale or cloud-based systems. Carefully chosen, openly available AI components can support trustworthy and reproducible research across disciplines.

Links:

[L1] SensAI: https://doi.org/10.5281/zenodo.17931940

[L2] TensorFlow.js: https://www.tensorflow.org/js

[L3] https://www.npmjs.com/package/@tensorflow-models/coco-ssd

References:

[1] M. Billinghurst, A. Clark, and G. Lee, “A survey of augmented reality,” Foundations and Trends® in Human–Computer Interaction, vol. 8, no. 2–3, pp. 73–272, 2015.

[2] A. B. Szentirmai, “Universally designed augmented reality as interface for artificial intelligence assisted decision-making in everyday life scenarios,” Studies in Health Technology and Informatics, vol. 320, pp. 469–476, 2024.

[3] A. B. Szentirmai, “Enhancing accessible reading for all with universally designed augmented reality: AReader,” in Proc. Int. Conf. on Human-Computer Interaction, pp. 282–300, Springer Nature, 2024.

Please contact:

Attila Bekkvik Szentirmai, University of South-Eastern

Norway, Department of Business and IT, Norway