by George Hatzivasilis and Sotirios Ioannidis (Technical University of Crete) and François Hamon (Greencityzen)

Environmental sciences increasingly rely on AI foundation models to integrate heterogeneous data and support sustainable decision-making. Their real-world impact, however, depends on security, trustworthiness, and resilient deployment, as illustrated by secure smart watering systems.

Environmental Sciences in the Era of Foundation Models

Environmental systems are among the most complex domains addressed by contemporary computing. Phenomena such as water availability, soil conditions, air quality, and climate dynamics emerge from interactions across multiple spatial and temporal scales and are observed through highly heterogeneous data sources. These include in-situ sensor networks, satellite imagery, meteorological forecasts, and numerical simulations.

Traditional machine learning approaches, typically trained for a single task or dataset, struggle to cope with this complexity. In response, AI foundation models have emerged as a transformative paradigm. Trained on large, diverse datasets, foundation models learn general representations that can be adapted to multiple downstream tasks. In environmental sciences, this enables unified modelling of physical processes, contextual reasoning across data modalities, and efficient adaptation to new regions or conditions.

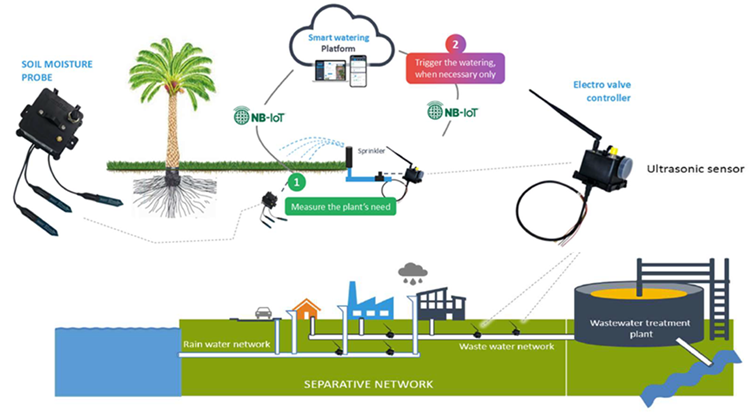

For applications such as water management (see Figure 1), foundation models enable irrigation strategies that go beyond fixed rules. Instead, decisions can be informed by learned relationships between soil moisture, weather evolution, seasonal patterns, and historical behaviour, improving both sustainability and resilience under increasing climatic variability.

Figure 1: Water management system.

Yet, while foundation models offer unprecedented analytical power, their deployment outside controlled research environments introduces new risks.

From Environmental Intelligence to Operational Systems

Environmental AI increasingly influences cyber-physical systems. Smart irrigation platforms connect sensors, AI-driven decision logic, and automated actuators in continuous feedback loops. In these settings, AI outputs directly trigger physical actions, such as opening or closing irrigation valves.

This transition exposes a critical gap. Many AI systems are evaluated primarily for predictive accuracy, with limited consideration of how they behave under adversarial conditions, component failures, or malicious interference. In operational environments, such omissions can lead to resource waste, service disruption, or safety risks.

To be viable in practice, environmental AI systems must therefore satisfy three fundamental properties:

- Security: to resist cyber threats across devices and software

- Trustworthiness: to ensure explainable and reliable decisions

- Resilience: to maintain functionality despite faults or attacks.

These requirements are especially pressing for systems that rely on foundation models, which are often reused, updated, and integrated across multiple services.

Smart Watering as a Representative Use Case

Smart watering systems [L1] exemplify this convergence of environmental intelligence and operational risk. Distributed sensors monitor soil and environmental conditions, AI-based logic determines irrigation needs, and actuators execute watering actions automatically. When operating correctly, such systems reduce water consumption and improve plant health.

However, these infrastructures are also exposed. Compromised sensors may report misleading data, manipulated controllers can trigger excessive irrigation, and unauthorised access to AI components can distort decision-making in subtle but impactful ways. Addressing these risks requires systematic security assessment that explicitly accounts for AI-driven functionality.

Layer-Aware Security Assessment with SecOPERA

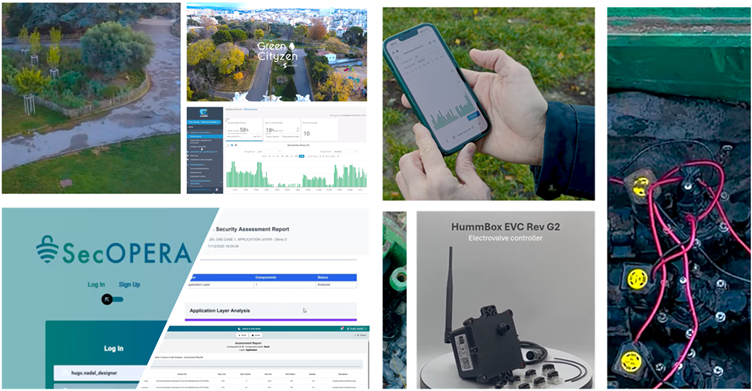

The SecOPERA framework [1] addresses this challenge by providing a layer-aware security assurance process that decomposes a system into device, network, application, and cognitive layers, enabling targeted assessment of each component (see Figure 2). This approach is particularly significant for AI-enabled environmental systems. Rather than treating machine learning models as opaque artefacts, SecOPERA explicitly recognises them as cognitive assets that must be assessed and protected..

Figure 2: SecOPERA use case of smart water management.

The cognitive security assessment focuses on the integrity and protection of AI decision-making at inference time. Since many AI components are implemented as opaque deep neural networks, the assessment does not depend on exposing internal model parameters. Instead, it evaluates the inference-layer exposure of the AI system (e.g., serving APIs, edge deployments, or automated decision pipelines), analysing risks related to unauthorised access, model extraction/inversion attempts, adversarial manipulation, and abnormal query behaviour that could undermine reliable decision-making.

These analyses are integrated with broader security testing across the system, ensuring that AI-driven decisions are evaluated in the context of the physical processes they control.

From Assessment to Trustworthy Deployment

A defining strength of the SecOPERA approach is its closed-loop assurance workflow. Assessment results are collected in structured reports and evaluated against predefined security objectives [2]. When weaknesses are identified, developers can apply targeted hardening measures, such as reducing unnecessary dependencies, integrating security-assured modules, or reconfiguring exposed interfaces.

Crucially, AI components are re-assessed after adaptation, supporting iterative improvement rather than one-off validation. This process enables continuous monitoring and response across distributed environmental infrastructures and supports long-term operational trust.

Conclusion

Foundation models are becoming indispensable to environmental sciences, enabling integrated reasoning across complex, multi-scale systems. Their societal value, however, depends on more than analytical performance. Without embedded security and trustworthiness, even the most advanced environmental AI cannot be responsibly deployed.

The smart watering use case demonstrates how foundation-model–enabled intelligence, combined with layer-aware security assessment and cognitive-layer analysis, can support sustainable and reliable environmental services. By treating AI models as security-relevant components rather than black boxes, frameworks such as SecOPERA provide a practical path toward trustworthy environmental AI.

As environmental decision-making increasingly relies on automated intelligence, security-by-design will be essential, not as an afterthought, but as a foundation for responsible innovation.

Link:

[L1] https://youtu.be/jZrJWNAhx4M

References:

[1] A. Fournaris et al., “Providing Security Assurance & Hardening for Open Source Software/Hardware: The SecOPERA approach”, IEEE CAMAD 2023.

[2] M. Papoutsakis et al., “SESAME: Automated Security Assessment of Robots and Modern Multi-Robot Systems”, Electronics, MDPI, vol. 14, issue 5, article 923, pp. 1-27.

Please contact:

George Hatzivasilis

Technical University of Crete (TUC), Greece