by Susie Ruston McAleer (21c) and Spiros Borotis (Maggioli S.p.A)

THEMIS 5.0 is generating evidence, tools, and methods that help scientific communities evaluate when AI systems can be trusted. Through pilots in healthcare, maritime operations, and journalism, the project is exploring how trustworthiness assessments can help organizations take up AI in a responsible manner.

As AI becomes central to modelling, prediction, and analysis, work in domains of critical societal importance increasingly relies on systems that operate as collaborators rather than mere computational tools. But such reliance requires that AI models are used responsibly. Hence, organizations need to know: How accurate is the model? How stable is it under different conditions? Is it fair across groups? What risks might it amplify? Without rigorous answers, AI can introduce hidden errors into critical work processes, distort outputs, or produce misleading guidance.

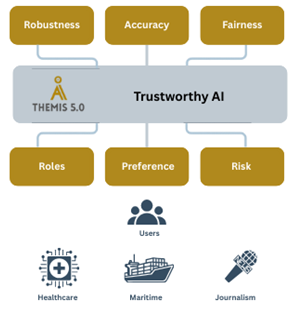

THEMIS 5.0 [L1] addresses this emerging challenge. Instead of treating trustworthiness as an abstract principle, the project investigates how scientists and practitioners understand trustworthiness and what it takes to allow for decisions to be based on model outputs. Towards this end, the project has involved users and stakeholders within healthcare, port operations, and journalism in requirements identification, scenario development, and feedback on trustworthiness components. These activities produced empirical insights, which illuminates what people truly need to use AI responsibly and confidently in their research and work. Figure 1 illustrates how technical performance and user priorities together shape trust in AI-supported decision making [1].

Figure 1: Elements of Trustworthy AI.

Protecting the Integrity of Decision Making with AI

Reliable performance is the primary concern for professionals using AI. In THEMIS 5.0 user and stakeholder involvement, clinicians have stressed that high global accuracy is essential not only for patient safety but also to ensure that downstream research and decisions are based on stable predictions. Journalists highlighted how accuracy varies across languages, noting that cross-lingual inconsistencies can distort content analysis and misinformation research. Port operators similarly emphasised robustness, the ability of models to remain stable under changing conditions, as a prerequisite for reproducible simulation and planning.

In response, research in THEMIS 5.0 integrates tools to assess trustworthiness characteristics, at the level of individual samples. This allows for a tiered approach to accuracy assessment, augmenting assessments of global and group-specific accuracy with assessments of accuracy for individual samples.

Preventing Bias from Entering Decisions

Fairness is a critical requirement for trustworthy AI, as systematic differences in model behaviour across groups can distort data, misrepresent populations, and propagate bias into scientific research. In the feedback from users and stakeholders gathered through THEMIS 5.0, clinicians stressed the need to distinguish genuine medical variation from algorithmic bias, while port operators warned that preferential treatment of certain vessels or companies could undermine economic and logistics research. Journalists similarly noted that fairness failures in media AI can skew studies of online behaviour and misinformation.

To address these risks, THEMIS 5.0 is developing a Fairness Evaluator to provide clear, contextual explanations rather than isolated statistical metrics from fairness assessments. This narrative approach helps users and stakeholders identify whether observed disparities arise from data, modelling choices, or contextual factors, supporting more accurate and representative analysis.

Making the Use of AI Responsible and Transparent

The requirements for AI decision support are shaped by users and stakeholders’ roles, responsibilities, and values. THEMIS 5.0 has explored this through the concept of a Persona Analyser, which identifies what users and stakeholders of different domains prioritise when assessing AI trustworthiness. User and stakeholder involvement in the project shows that for preference modelling to support trustworthy AI, it must be accessible and non-intrusive. Users and stakeholders require clear, high-level summaries of their preferences with an option to examine details on demand.

Drawing on these insights the Persona Analyser communicates preferences in straightforward, qualitative terms, for example, that a researcher prioritises fairness over robustness, helping align trustworthiness evaluation with scientific responsibilities without burdening users. This ensures that AI evaluation remains transparent and appropriate to the context in which decisions are made.

Making AI Failures Visible

Many AI-related risks are not visible through accuracy or fairness metrics alone. In THEMIS 5.0, the risk modelling tool Spyderisk [L2] helps users and stakeholders see how risks arise from the interaction of models, data, and real environments. The tool is useful for the governance of the trustworthy AI assessment process: it may in particular be essential for pre-deployment checks and for validation of deployed AI decision making support, but may also have potential use for operational application. THEMIS is now refining Spyderisk with clearer narrative explanations so teams can conduct risk assessment and mitigate threats before they influence evidence or decisions.

A Clearer Picture of Trustworthy AI

Across all domains, THEMIS shows that AI used for decision support must be subject to trustworthiness assessment not only for safe deployment, but also to safeguard the reliability of knowledge produced through AI-supported work. Inaccurate models can distort data, unfair models can misrepresent populations, fragile models undermine reproducibility, and unseen risks can compromise both decisions and the scientific insights derived from them. By providing interpretable trustworthiness assessments, THEMIS enables users and stakeholders to judge when AI outputs are reliable enough to inform decisions and analyses in practice. In this way, the project contributes to the development of human-centred trustworthy AI as a foundational capability for scientific and evidence-informed decision making, without compromising accuracy, fairness, or public trust [2].

THEMIS 5.0 has received funding from the EU Horizon Europe Research and Innovation programme under grant agreement No. 101121042, and from UKRIs funding guarantee.

Links:

[L1] www.themis-trust.eu

[L2] www.it-innovation.soton.ac.uk/projects/spyderisk

References:

[1] P. V. Johnsen, et al., “SPARDACUS SafetyCage: A new misclassification detector,” in Proc. 6th Northern Lights Deep Learning Conf. (NLDL), Proc. Mach. Learn. Res., vol. 265, pp. 133–140, 2025. [Online]. Available: https://proceedings.mlr.press/v265/johnsen25a.html

[2] M. Fikardos, et al., “Trustworthiness optimisation process: A methodology for assessing and enhancing trust in AI systems,” Electronics, vol. 14, no. 7, Art. no. 1454, 2025, doi: 10.3390/electronics14071454.

Please contact:

Susie Ruston McAleer

21c, United Kingdom