by René Berndt, Hillary Farmer and Eva Eggeling (Fraunhofer Austria)

Improving the reviewer selection process for conferences and journals using AI and large language models (LLMs) can significantly enhance both efficiency and quality. AI-driven systems can analyse manuscripts and match them with potential reviewers based on their expertise, publication history, and prior reviewing experience. By leveraging semantic understanding rather than relying solely on manually assigned keywords, LLMs enable more accurate and nuanced reviewer–paper alignment.

The Importance of Publishing in Scientific Research

In scientific disciplines, and particularly within research contexts, the publication of results constitutes an essential aspect of scholarly activity. Sharing research findings with other researchers not only advances knowledge but also contributes to the scientific community’s collective understanding. The reputation of a researcher is largely determined by bibliometric data, such as the venues where their work is published (impact factor) and the frequency with which it is cited by other researchers like the h-Index (Hirsch index or Hirsch number).

One key process for ensuring the quality of scientific papers is peer review. In this process, a submitted manuscript is evaluated by other experts from the same field, commonly referred to as peers. Peer review is a fundamental component of scientific publishing, as it guarantees the rigor, validity, and credibility of scientific work. By relying on experts to assess research, the process upholds high standards and enhances the quality of published studies.

Challenges in the Peer Review Process

Effective peer review is dependent on the careful matching of manuscripts with qualified reviewers. This task is critical but time-consuming. With the continual increase in the number of scientific publications, finding suitable reviewers and managing the peer review process has become cumbersome and demands significant effort.

The task of finding the most suitable reviewer has been addressed in systems like the Toronto Paper Matching System (TPMS) [2], which relies on reviewers uploading their own publications. This works fine if you have a closed pool of possible reviewers, usually the programme committee of a conference. But when you need additional reviewers, which are not part of that pool, these systems cannot provide this information. SARA – Services for Aiding Reviewer Assignment suggests reviewers using only manuscript abstracts. Unlike TPMS, SARA leverages LLM embeddings to compare abstract semantics with those in its database, without referencing full publication texts.

Data backbone

The prototype system uses the monthly datasets from DBLP[L1], a large and openly accessible bibliographic database that focuses on computer science research. However, DBLP does not include abstracts. This is where the Initiative for Open Abstracts (I4OA) becomes relevant. I4OA represents a partnership among publishers, infrastructure organizations, librarians, researchers, and others who work together to promote free and open access to the abstracts of scholarly works—especially journal articles and book chapters—in trusted repositories that are both open and accessible to machines and submit them to Crossref [1].

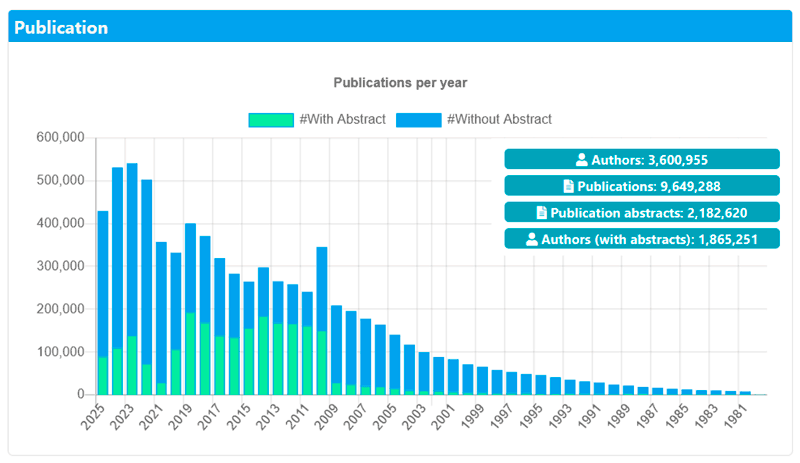

The data backbone of SARA contains over 2 million publications with abstract with 1.8 million authors (see Fig. 1).

Figure 1: Overview of the SARA data backbone based on DBLP and I4OA, showing the distribution of publications with available abstracts versus records without abstracts.

Integration with SRMv2

SARA has been integrated in SRMv2 [L2], the submission and reviews system of the Eurographics – a professional association founded in 1980 that promotes research, development, and education in computer graphics across Europe and internationally.

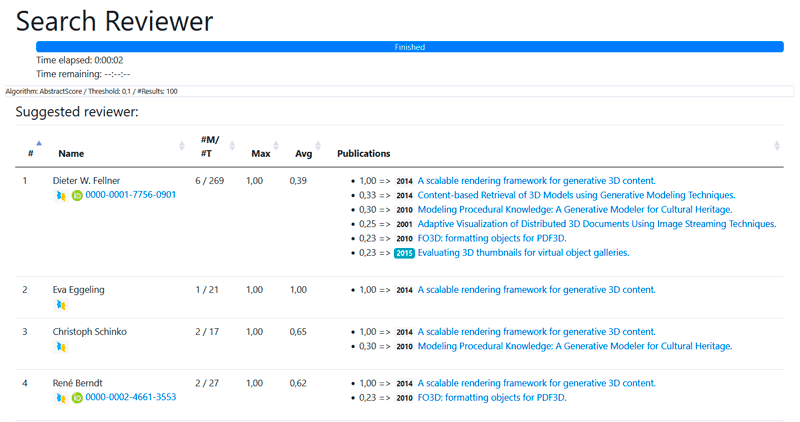

The functionality is accessible via a single link, which sends the abstract to SARA. The resulting output is a list of individuals qualified to serve as reviewers for the submission. Various metrics are provided to assist in identifying the most suitable candidates according to different criteria (see Figure 2).

Figure 2: Example output of the SARA reviewer identification system integrated into SRMv2, presenting ranked reviewer candidates with similarity metrics, publication statistics, and links to ORCID profiles and DOI-referenced publications.

It provides several details, including the name, DBLP link, ORCID page, and additional information:

- #M – Number of matching publications of the person above the threshold

- T – Total number of publications

- Max: The maximum similarity score of the matching publications

- Avg: The Average of the similarity scores of the various publications.

The publication column shows matched titles and years by similarity score, with each title linked to its official repository, typically via DOI.

This information assists editors or conference chairs in selecting appropriate reviewers, as these metrics indicate the reviewer’s level of expertise within a particular field (number of relevant publications) and their overall seniority (total number of publications). For example, a candidate who closely matches the abstract but has published only one or two papers may be less suitable than another with over thirty publications, including ten directly related to the topic of the abstract.

Links:

[L1] https://dblp.org/

[L2] https://srmv2.eg.org

References:

[1] I4OA: Initiative for Open Abstracts, [Online]. Available: https://i4oa.org/. Accessed: Oct. 12, 2025.

[2] L. Charlin and R. Zemel, “The Toronto Paper Matching System: An automated paper-reviewer assignment system,” May 2013. [Online]. Available: https://www.cs.toronto.edu/~lcharlin/papers/tpms.pdf. Accessed: Nov. 19, 2025.

Please contact:

René Berndt, Fraunhofer Austria