by Jean-Baptiste Burnet and Olivier Parisot (LIST)

Cyanobacteria blooms pose growing risks to drinking water supplies and recreational waters, a challenge intensified by climate change and inadequately captured by current regulatory monitoring strategies. Our study demonstrates how low-cost, ground-based RGB cameras combined with machine learning enable near real-time detection of blooms. By deploying automated photo traps and YOLO-based detection models at a major freshwater reservoir in Luxembourg, we open new pathways for early warning systems and improved understanding of harmful cyanobacteria bloom dynamics.

Cyanobacteria (also called blue-green algae) blooms in freshwater supplies worldwide threaten the health of bathers, domestic animals and livestock due to the toxins released by these microorganisms. Fuelled by climate change, this phenomenon further undermines the environmental health of aquatic ecosystems. The highly fluctuating dynamics of harmful cyanobacteria blooms (CyanoHABs) require representative monitoring frameworks that are hardly achieved using regulatory monitoring strategies currently in place. New tools are therefore needed to enhance our understanding of bloom dynamics and provide early warning systems to proactively react to such events, both for safe drinking water supply and for beach management [1]. Ground-based remote sensing is an interesting alternative to satellite imagery, which is limited by cloud cover and revisit times.

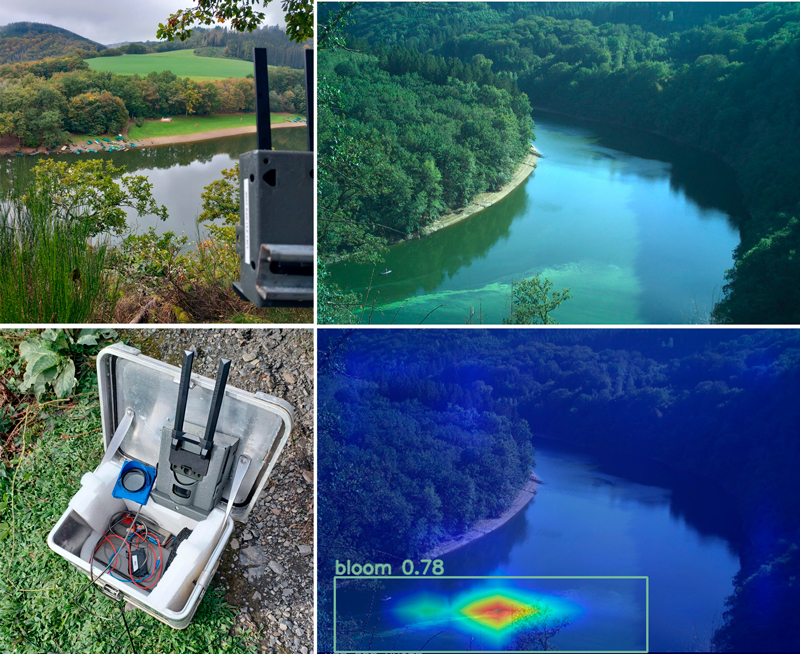

At LIST, we have implemented a fully automated in situ image acquisition workflow to detect blooms at near real-time (Fig. 1). The study was performed on the Upper-Sûre Lake, the main drinking water supply in Luxembourg and a major recreational area that experiences cyanobacteria blooms every year in late summer. Two sites were equipped since 2021 with commercial photo traps (Hyperfire 2, Reconyx). Pictures (in RGB) were taken hourly from June-November and sent in real-time to the researchers at LIST using the standard simple mail transfer protocol (SMTP).

Figure 1: Harmful cyanobacteria blooms are detected in the Upper-Sûre Lake, Luxembourg, in near real-time using machine learning combined with ground-based remote sensing.

Source: LIST (© LIST).

For automatic bloom detection using machine learning, we adapted the classic object detection process. The first phase consisted of creating an annotated dataset containing images and the position of blooms (in the form of bounding boxes). The original images came from different stations at different times of the year and were then cut into 640 x 640 patches. Based on our expertise in the field, we then meticulously edited the bounding boxes using MakeSense software [L1]. In the end, we preselected a set of 1,061 images, which were then divided as follows: 599 images for training, 181 images for validation, and 281 images for testing. We aimed to retain enough ‘background’ images for validation and testing to reduce the occurrence of obvious false positives, particularly in cases of sunlight reflecting off water, mirror effects with trees, etc. The second phase consisted of training a detection model based on this data, using the YOLO (You Only Look Once) architecture. There are different versions of YOLO, but we chose version 7 [2] because it offers a highly effective training and evaluation pipeline implementation [L1], as well as a software licence that is standard in an academic context (GPLv3). The annotated images were used to train a YOLOv7 model by applying transfer learning, i.e., using the default pre-trained YOLOv7 model. Finally, we performed multiple model trainings (500 epochs, different models’ sizes, with or without training-time data augmentation). Considering the overall metrics, the best-performing model relies on a tiny architecture (7M parameters; precision = 0.531, recall = 0.352, mAP50 = 0.325). However, it is worth noting that two models based on a normal architecture (37M parameters) achieve either higher precision (0.623) or higher recall (0.461). We then used Grad-CAM [L2], an explainable AI technique, to visualise the regions of the image that most strongly influence the model’s predictions, providing valuable insights into false positive and false negative cases. Other alternatives are currently being evaluated, including more recent versions of YOLO (8, 11, 12), RET-DETR (Vision Transformer-based real-time object detector), as well as older ones such as FasterRCNN (Real-Time Object Detection with Region Proposal Networks).

In 2024 and 2025, additional photo traps have been equipped with transmission systems and thousands of pictures have been taken during both seasons and analysed manually by LIST researchers. They will now serve to further train the selected YOLO models and improve their performance. Overall, these valuable datasets enable a better understanding of the fine spatial and temporal dynamics of cyanobacteria blooms and will constitute crucial inputs for the development of forecasting models.

Links:

[L1] https://www.makesense.ai/

[L2] https://github.com/jacobgil/pytorch-grad-cam

References:

[1] H. Almuhtaram, et al., “State of knowledge on early warning tools for cyanobacteria detection,” Ecol. Indic., vol. 133, Art. no. 108442, 2021.

[2] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” arXiv preprint, arXiv:2207.02696, 2022.

Please contact:

Jean-Baptiste Burnet, Luxembourg Institute of Science and Technology (LIST), Luxembourg