by Christoph Quix, Thomas Berlage and Matthias Jarke

Biomedical research applies data-intensive methods for drug discovery, such as high-content analysis, in which a huge amount of substances are investigated in a completely automated way. The increasing amount of data generated by such methods poses a major challenge for the integration and detailed analysis of the data, since, in order to gain new insights, the data need to be linked to other datasets from previous studies, similar experiments, or external data sources. Owing to its heterogeneity and complexity, however, the integration of research data is a long and tedious task. The HUMIT project aims to develop an innovative methodology for the integration of life science data, which applies an interactive and incremental approach.

Life science research institutes conduct high-content experiments investigating new active substances or with the aim of detecting the causes of diseases such as Alzheimer’s or Parkinson’s. Data from past experiments may contain valuable information that could also be helpful for current research questions. Furthermore, the internal data needs to be compared with other datasets provided by research institutes around the world in order to validate the results of the experiments. Ideally, all data would be integrated in a comprehensive database with a nicely integrated schema that covers all aspects of research data. However, it is impossible to construct such a solution since schemas and analysis requirements are frequently changing in a research environment. The a-priori construction of integrated schemas for scientific data is not possible, because the detailed structure of the data to be accumulated in future experiments cannot be known in advance. Thus, a very flexible and adaptable data management system is required in which the data can be stored irrespective of its structure.

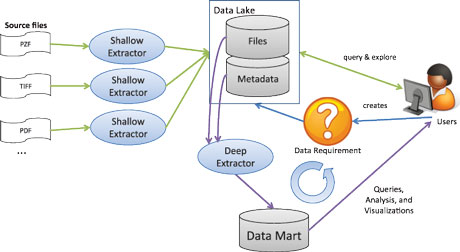

Figure 1: The HUMIT system.

The core idea of the HUMIT project is illustrated in Figure 1. Data management in life science is often file-based, as the devices (e.g., an automated microscope or a reader device) in a lab generate files as their main output. Further data processing is often done in scripting languages such as R or MATLAB by reading and writing the data from/to files. Therefore, the HUMIT system currently targets files as data sources, but database systems and other types of data sources can be integrated into the system later without changing the basic architecture. In a first step, a shallow extraction will be done for the input files in which the metadata of the source files is extracted and loaded into the data lake. “Data lake” is a new buzzword which refers to systems in which the data from the sources is copied, retaining its original structure, to a repository [3]. This idea is also applied in HUMIT: the source files will be copied to the repository and stored with a bi-directional link to their metadata. The objects in the repository are immutable and only an append operation will be supported (no updates or deletes), as reproducibility and traceability is an important requirement for data management in life science.

The metadata will be provided to the user for exploration. By browsing through the metadata repository, the user can see what kind of data is available and incrementally construct a data mart in which data for her specific requirements are collected. Data integration takes place at this stage. This type of integration is referred to as “pay-as-you-go-integration” [2], since only the part of the data lake required for a particular application is integrated, and the integration is done while the user is exploring the data. The extraction of the detailed data from the source files (known as “Deep Extraction”) will also be done at this stage if the data is required in the data mart. During the construction of the data mart, the user will be supported in the definition of data integration mappings by (semi-)automatic tools (e.g., for schema matching or entity resolution). Existing mappings can be also exploited for the definition of new mappings.

The requirements analysis for the project has been completed and a first version of the metadata management system is available. Metadata can be extracted from various file types and is stored in a generic metamodel, which is based on our experiences in developing the GeRoMe metamodel [1]. The framework for metadata extraction is extensible and can be easily adapted to new file types. The design of the interactive data exploration component is currently underway and will be one of the core components of the system.

The HUMIT project [L1] is coordinated by the Fraunhofer Institute for Applied Information Technology FIT and funded by the German Federal Ministry of Education and Research. Further participants are the Fraunhofer Institute for Molecular Biology and Applied Ecology IME and the German Center for Neurodegenerative Diseases (DZNE) as life science research institutes, and soventec GmbH as industry partner. The project started in March 2015 and will be funded for three years.

Link:

[L1] http://www.humit.de

References:

[1] D. Kensche, C. Quix, X. Li, Y. Li, M. Jarke: Generic schema mappings for composition and query answering. Data Knowl. Eng., Vol. 68, no. 7, pp. 599-621, 2009.

[2] A.D. Sarma, X. Dong, A.Y. Halevy: Bootstrapping pay-as-you-go data integration systems, in Proc. of SIGMOD, pp. 861-874, 2008.

[3] I. Terrizzano and Peter M. Schwarz and Mary Roth and John E. Colino: Data Wrangling: The Challenging Yourney from the Wild to the Lake, in Proc. of CIDR, 2015.

Please contact:

Christoph Quix, Thomas Berlage, Matthias Jarke

Fraunhofer Institute for Applied Information Technology FIT, Germany

E-mail: