by Costas Bekas

“Mantle convection” is the term used to describe the transport of heat from the interior of the Earth to its surface. Mantle convection drives plate tectonics – the motion of plates that are formed from the Earth’s crust and upper mantle – and is a fundamental driver of earthquakes and volcanism. We modelled mantle convection using massively parallel computation.

In 2013, a group of IBM and university scientists won the ACM Gordon Bell Prize for work on cavitation [1]. Completely against conventional wisdom, their work demonstrated that, with advanced algorithmic re-engineering, it is possible to achieve close to theoretical peak performance on millions of cores for computational fluid dynamics simulations. In the meantime, Professors Omar Ghattas (University of Texas at Austin), Michael Gurnis (California institute of Technology), and Georg Stadler (New York University) and their groups were collaborating on creating a very high resolution/high fidelity solver for convection in Earth’s mantle. The breakthrough represented by the Bell prize was the spark that brought together the Texas-Caltech-NYU team with the group of Costas Bekas at IBM to form a collaboration aimed at achieving unprecedented scaleout of the mantle convection solver.

Extreme scaleout would be a decisive step towards the goals of the original research, which aims to address such fundamental questions as: What are the main drivers of plate motion – negative buoyancy or convective shear traction? What is the key process governing the occurrence of earthquakes – the material properties between the plates or the tectonic stress? [2].

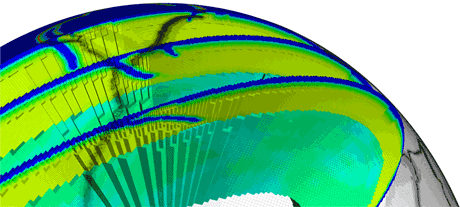

Figure 1: Establishing a variable grid for the Earth’s crust and mantel.

A series of key advances in geodynamic modelling, numerical algorithms and massively scalable solvers that were developed over the last several years by the academic members of the team was combined with deep algorithm re-engineering and massively parallel optimization knowhow from IBM Research, to achieve unparalleled scalability. This result offers geophysicists powerful tools to improve their understanding of the forces behind the origin of earthquakes and volcanism [2]. More broadly, the combination of advanced algorithms and the massively parallel optimizations demonstrated in the work applies much more generally and will enable scientists in multiple fields to make new discoveries and make it possible for industries to greatly reduce the time it takes to invent, test, and bring new products to market – including, for example, new materials and energy sources.

The problem of modeling mantle convection at a global scale with realistic material properties is notoriously difficult. The problem is characterized by an extremely wide range of length scales that need to be resolved—from less than a kilometre at tectonic plate boundaries to the 40,000 kilometre circumference of the Earth. Similarly, the rock that comprises the mantle has a viscosity (which represents resistance to shearing) that varies by over six orders of magnitude. The grid on which the computations were carried out thus had to be adapted locally to the length scale and viscosity variation present. Finally, the partial differential equations describing mantle convection are highly nonlinear. The result was a model with 602 billion equations and variables – the solution of which could be undertaken on only the most powerful supercomputers.

Rather than using simpler explicit solvers, which are easier to scale on large supercomputers (since they involve only nearest neighbour communication) but at the same time are extremely inefficient (as they require a very large number of iterations to converge), the team achieved extreme scaleout to millions of cores for an innovative implicit solver, previously developed by the Texas and NYU members of the team. This required extensive algorithmic implementation re-engineering and massively parallel adaptation as unforeseen bottlenecks arise when millions of cores are used. This success allowed the simulation of mantle convection with an unprecedented level of fidelity and resolution. Global high-resolution models such as this are critical for understanding the forces driving and resisting global tectonic plate motion.

Combining advances in mathematics, algorithms and massively parallel computing, simulations with the optimized mantle convection model demonstrated super-efficiency in running on two of the most powerful high-performance computers in the world [2]. Development and science runs utilized the Blue Gene/Q system at CCI, Rensselaer Polytechnic Institute and the Stampede system at the Texas Advanced Computing Center; Initial massive scaling runs were made on the Juqueen Blue Gene/Q supercomputer at Juelich Supercomputing Centre in Germany and then further scaled up to run on Sequoia, a Blue Gene/Q machine owned by the U.S. Lawrence Livermore National Laboratory. Sequoia comprises 1.6 million processor cores capable of a theoretical peak performance of 20.1 petaflops. A huge challenge is efficiently scaling to all of the1,572,864 processor cores of the full Sequoia. The overall scale-up efficiency using all 96 racks was 96% compared to the single rack run. This is a record-setting achievement and demonstrates that, contrary to widely-held beliefs, numerically optimal implicit solvers can indeed almost perfectly scale to millions of cores, with careful algorithmic design and massive scaleout knowhow.

Understanding mantle convection has been named one of the “10 Grand Research Questions in Earth Sciences” by the U.S. National Academies. The authors of [2] hope their work provides earth scientists with a valuable new tool to help them pursue this grand quest. The work won the 2015 ACM Gordon Bell Prize.

Many colleagues contributed to this work, too many to be included as authors here but all are heartily acknowledged: Johann Rudi, A. Cristiano I. Malossi, Tobin Isaac, Georg Stadler, Michael Gurnis, Peter W. J. Staar, Yves Ineichen, Costas Bekas, Alessandro Curioni and Omar Ghattas.

Links:

http://www.zurich.ibm.com/mcs/compsci/parallel/

http://www.zurich.ibm.com/mcs/compsci/engineering/

https://www.youtube.com/watch?v=ADoo3jsHqw8

References:

[1] D. Rossinelli et al.: “11 PFLOP/s simulations of cloud cavitation collapse”, Proc. of SC2013, Paper 3, Denver, Colorade, 2015.

[2] J. Rudi et al.: “An Extreme-Scale Implicit Solver for Complex PDEs: Highly Heterogeneous Flow in Earth’s Mantle”, in Proc. of SC2015, Paper 5, Austin Texas, 2015.

Please contact:

Costas Bekas, IBM Research – Zurich, Switzerland

E-mail: