Hoppla is an archiving solution that combines back-up and fully automated migration services for data collections in small office environments. The system allows user-friendly handling of services and outsources digital preservation expertise.

Small companies are often hardly aware of changes in their technological environment. This can have serious effects on their long-term ability to access and use their highly valuable digital assets. In some countries, the law requires that business transactions remain available and auditable for up to seven years. Moreover, essential assets such as construction plans, manuals, production process protocols or customer correspondence need to be at hand for even longer periods of time, in case of maintenance issues, lawsuits or for business value. To avoid the physical loss of data, companies implement various backup solutions and strategies. Although the bitstream preservation problem is not entirely solved, there exists many years of practical experience in the industry, with data being constantly migrated to current storage media types, and duplicate copies held to preserve bitstreams over years.

A much more pressing problem is logical preservation. The interpretation of a bitstream depends on the environment of hardware platforms, operating systems, software applications and data formats. Even small changes in this environment can cause problems in opening important files. There is no guarantee that a construction plan for part of an aircraft, stored in an application-specific format, can be rendered again in five, ten or twenty years. Logical preservation requires continuous activity to keep digital assets accessible and useable.

Digital preservation is mainly driven by memory institutions like libraries, museums and archives, which have a focus on preserving scientific and cultural heritage, as well as dedicated resources to care for their digital assets. Enterprises whose core business is not data curation are going to have an increased demand for knowledge and expertise in logical preservation solutions to keep their data accessible. Long-term preservation tools and services are developed for professional environments to be used by highly qualified employees in this area. In order to operate in more distant domains, automated systems and convenient ways to outsource digital preservation expertise are required.

With Hoppla, we are currently developing a solution that combines back-up and fully automated migration services for data collections in small institutions and small and home offices. The system builds on a service model similar to current firewall and antivirus software packages, providing user-friendly handling of services and an automated update service, and hiding the technical complexity of the software.

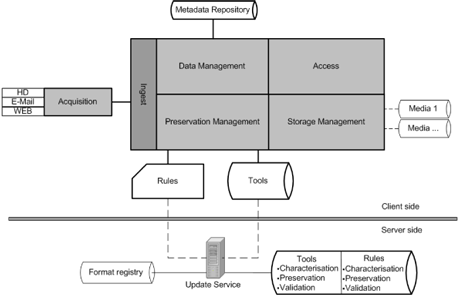

Figure 1: Architecture of the Hoppla system.

A central update service provides preservation rules and services to local Hoppla instances. The archiving system ingests data from a number of sources such as data carriers, email repositories and online storage locations. The user can define filter criteria for the collection, such as location, size and content type.

Metadata relating to the objects in the collection are important for later searching, retrieval and preservation. Hoppla collects available metadata from the source systems and additionally extracts metadata from the objects. In order to provide the system with appropriate preservation rules and tools, a collection profile is provided to the Web update service. For privacy reasons, the user can define the level of detail provided to the service by the profile. According to the received preservation rules and tools the objects in the collection are migrated.

Hoppla performs verification checks of the migration activity, and the archiving system supports versioning of objects. The storage module manages multiple backups of objects across different media. Hoppla can use offline and online storage media in both write-once as well as rewritable forms like DVDs, hard disks or cloud services for storage via plug-in infrastructure.

Missing in-house knowledge and expertise in digital preservation and data management of small institutions will be replaced by external expertise via a Web update service. Expert groups will provide guidelines and rules for the migration of endangered objects. We utilize this knowledge in an automated process and keep digital collections in an accessible form. The Web update service provides preservation rules and the relevant tools to the client side for migration and implements the preservation planning of the archiving system.

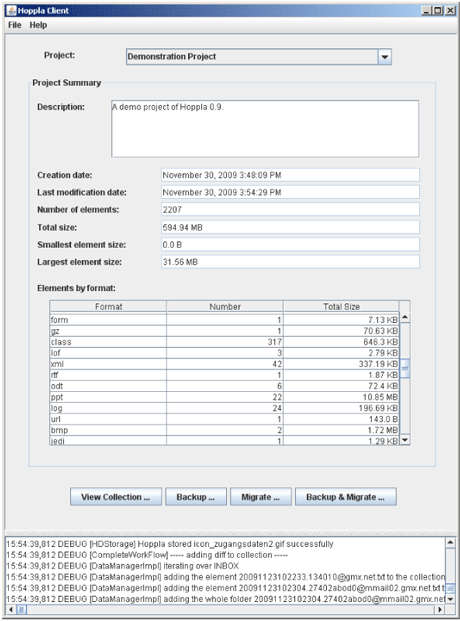

Figures 2 and 3: Hoppla screenshots.

Figures 2 and 3: Hoppla screenshots.

Hoppla is a research prototype development with a special focus on modular design and clearly defined interfaces between modules (Figure 2). This allows integration with existing solutions (eg as storage services), exchange of modules and easy enhancement for further acquisition sources, storage media or online back-ends. The architecture of the Hoppla archiving system is highly influenced by the reference model for an Open Archival Information System (OAIS), which has been widely accepted as a key standard reference model for long-term archival systems. As auditing and certification are becoming important issues for data storage, the system provides full documentation of all actions in the archive.

It assists in the fulfilment of software-related criteria of the TRAC (Trustworthy Repositories Audit & Certification) checklist by the OCLC/RLG Programs and National Archives and Records Administration (NARA).

Links:

http://www.ifs.tuwien.ac.at/dp/hoppla

Please contact:

Michael Greifeneder, Stephan Strodl, Petar Petrov and Andreas Rauber

Vienna Universtiy of Technology/AARIT

E-mail: {greifeneder, strodl, petrov, rauber}@ifs.tuwien.ac.at