At the Geometric Modelling and Computer Vision laboratory of SZTAKI, a new method for 3D reconstruction by fusing multimodal data obtained using a laser scanner, a camera and illumination sources is being developed. The system processes and fuses geometric, pictorial and photometric data using genetic algorithms and efficient methods of computer vision.

Building photorealistic 3D models of real-world objects is a fundamental problem in computer vision and computer graphics. To construct such models, both precise geometry and detailed surface textures are required. Textures allow one to obtain visual effects that are essential for high-quality rendering. Photorealism is enhanced by adding surface roughness in the form of so-called 3D texture, represented by a bump map. Different techniques exist for reconstructing the object surface and building photorealistic 3D models. Although the geometry can be measured by various methods of computer vision, laser scanners are usually used for precise measurements. However, most laser scanners do not provide texture and colour information, or if they do, the data is not accurate enough.

Our primary goal is to create a system that uses only a PC, an affordable laser scanner and a commercial uncalibrated digital camera. The camera can be used freely and independently from the scanner. No other equipment (special illumination, calibrated set-up etc) is used. Neither are any specially trained personnel required to operate the system: after training, a computer user with minimal engineering skills will be able to use it.

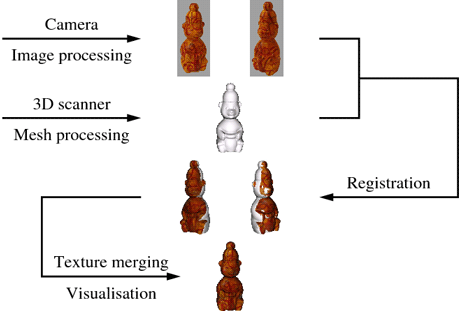

The 3D reconstruction system developed in our laboratory receives as input two datasets of diverse origin: a number of partial measurements (3D point sets) of the object’s surface made by a hand-held laser scanner, and a collection of good-quality images of the object acquired independently by a digital camera using a number of illumination sources. The partial surface measurements overlap and cover the entire surface of the object; however, their relative orientations are unknown since they are obtained in different, unregistered coordinate systems. A specially designed genetic algorithm automatically pre-aligns the surfaces and estimates their overlap. A precise and robust iterative algorithm developed in our laboratory is then applied to the roughly aligned surfaces to obtain a precise registration. Finally, a complete geometric model is created by triangulating the integrated point set.

The geometric model is precise, but lacks texture and colour information. The latter is provided by the other dataset, the collection of digital images. The task of precise fusion of the geometric and visual data is not trivial, since the pictures are taken freely from different viewpoints and with varying zoom. The data fusion problem is formulated as photo-consistency optimization, which amounts to minimizing a cost function with numerous variables represented by the internal and the external parameters of the camera. Another dedicated genetic algorithm is used to minimize this cost function.

Figure 1: Image-to-surface registration and texture merging.

When the image-to-surface registration problem is solved, we still face the problem of seamless blending of multiple textures, that is, images of a surface patch appearing in different views. This problem is solved by a surface-flattening surface algorithm that gives a 2D parameterization of the model. Using a measure of visibility as weight, we blend the textures providing a seamless solution that preserves details. The process of image-to-surface registration and texture merging is illustrated in Figure 1. A measured surface and a textured model are shown in Figure 2.

Figure 2: left: surface measured by laser scanner; right: textured model.

Figure 3: Refining the surface model by photometric stereo.

Figure 3: Refining the surface model by photometric stereo.

Finally, photometric data is added to provide a bump map reflecting the surface roughness. We use a photometric stereo technique developed in our lab to refine surface geometry. For this, a number of images are taken from the same viewpoint under varying illumination. The surface is assumed to be diffuse, but the lighting properties are unknown. The initial sparse 3D mesh obtained by the 3D scanner is exploited to calibrate light sources and then to recover surface normals. Figure 3 provides an example of surface refinement by photometric stereo.

All major components of the reconstruction software, including data registration, surface flattening and photometric stereo, have been developed at SZTAKI and are novel. Sample results of 3D reconstruction by our system are available on the Web page of the Geometric Modelling and Computer Vision laboratory (see Links). We are currently involved in a related national project IRIS: ‘Integrated Research for Innovative technological Solutions in static and dynamic 3D object and scene reconstruction’, funded by the National Office for Research and Technology of Hungary.

Links:

http://vision.sztaki.hu/

http://vision.sztaki.hu/iris-nkth/index.php

Please contact:

Dmitry Chetverikov

SZTAKI, Hungary

Tel: +36 1 2796161

E-mail: