by Gianluigi Folino and Francesco Sergio Pisani

We present a framework for generating decision-tree based models that take automatic decisions on the offloading of mobile applications onto a cloud computing platform using an algorithm based on the genetic programming (GP) approach.

A key problem of modern smartphones is the limited life of their batteries. The introduction of larger screens and the large usage and availability of cpu-consuming and network-based mobile applications has aggravated this problem. The offloading of computation on cloud computing platforms can considerably extend battery duration. However, it is important to be able to verify not only whether offloading guarantees real advantages with respect to the computing power needed for data transfer but also if user requirements are satisfied, with respect to the quality of service and the costs of using the clouds. All the issues involved in the offloading decision, such as network disconnections and variability, data privacy and security, variations in load of the server, etc. need to be evaluated carefully.

At ICAR-CNR, we have designed a framework for the automatic offloading of mobile application using a genetic programming approach, which attempts to address the issues listed above. The framework comprises two parts: a module that simulates the entire offloading process, and an inference engine that builds an automatic decision model to handle the offloading process.

The simulator and the inference engine both apply a taxonomy that defines four main categories concerning the offloading process: user, network, data and application. The simulator evaluates the performance of the offloading process of mobile applications on the basis of user requirements, of the conditions of the network, of the hardware/software features of the mobile device and of the characteristics of the application. The inference engine is used to generate decision tree based models that take decisions concerning the offloading process on the basis of the parameters contained in the categories defined by the taxonomy. This is based on a genetic programming tool that generates the models using the parameters defined by the taxonomy and driven by a function of fitness, giving different weights to the costs, time, energy and quality of service depending on the priorities assigned.

Taxonomy

A taxonomy of parameters and properties has been defined and is used to take decisions in order to build the model that decides the offloading strategy. The taxonomy only considers aspects that influence the offloading process and is based on four different categories: Application (parameters associated with the application itself), User (parameters assigned according to the user needs), Network (parameters concerning the type and the state of the network), and Device (parameters reflecting the hardware/software features of the devices). The decision model built by the GP tool will take the decision whether to offload or not on the basis of the parameters associated with these categories.

Architecture

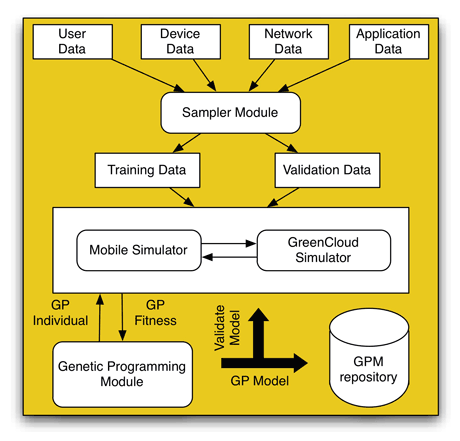

Figure 1 shows the software architecture of the framework.The first four modules contain sets of data for each of the four categories considered (user, device, network and application), which will be used by the sampler module in order to generate the training and validation datasets, simply by randomly combing the data. These two datasets will be used to generate and validate the decision models.

The two main modules depict the inference engine, consisting of a Genetic Programming module which develops a population of models capable of deciding the possible offloading of a mobile application, and the simulation module comprising the well-known GreenCloud simulator (simulating the cloud part of the offloading process) and an ad hoc mobile simulator modelling the mobile device's behaviour. In practice, each model generated by the GP module, will be input to the simulator module and a weighted fitness function will be computed that evaluates the performance of the model on the basis of the energy wasted, time consumed, costs and QoS.

At the end of the process, the best model (or the best models) will constitute the rules adopted by the offloading engine, which will decide whether an application must be offloaded, according to the conditions assigned (user requirements, bandwidth, characteristics of the mobile device and so on). All these models must be validated using the simulation engine with the validation dataset. If the result of this evaluation is above a predefined threshold, the model will be added to a model repository for future use.

Conclusions and Perspective

This work presents an automatic approach to generate decision-taking models for the offloading of mobile applications on the basis of user requirements, conditions of the network, the hardware/software features of the mobile device and the characteristics of the application. The system constitutes a general framework for testing offload algorithms and includes a mobile simulator, which computes the energy wasted in the process of offloading. Ongoing and future activities involve testing the framework with real datasets and verifying whether the obtained models improve battery performance in real environments.

Reference:

K. Kumar and Y.-H. Lu: “Cloud computing for mobile users: Can offloading computation save energy?”, IEEE Computer, 43(4), 2010.

Links:

http://www.genetic-programming.org/

http://www.icar.cnr.it/

Please contact:

Gianluigi Folino

ICAR-CNR, Italy

E-mail: