One of the main challenges of software evolution is to provide software applications with a maintenance environment with maximum time efficiency at minimum cost and the strongest accuracy. This includes managerial aspects of software evolution, like effort estimation, prediction models, and software processes. In this work, we focused on formative evaluation processes to give early feedback on the evolution of development metrics, global quantitative goals, non-functional evaluation, compliance with user requirements, and pilot applications results. Our results were applied to a monitoring control platform for remote software maintenance.

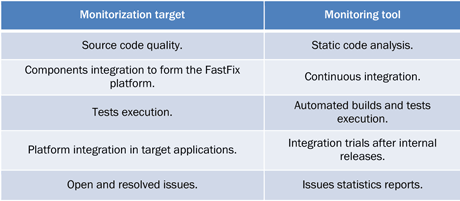

In the European project FASTFIX (ICT- 258109, June 2010-November 2012), a monitoring control platform for remote software maintenance is being developed. Within this project, we have been working on a formative evaluation process that evaluates methods, concepts and prototypes as the project is being developed. This process allows for a continuous integration and enhancement of the platform. As a result, the development process is steadily monitored and evaluated, and early feedback is provided to the designers, developers and architects. The metrics on which the formative evaluation process is based are described in Table 1. Targets are monitored by several open source tools on top of the developed codebase and industrial trials.

Table 1: Formative Evaluation Metrics

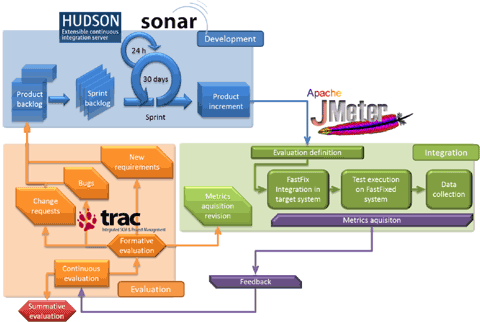

The formative evaluation process that we have defined can be split in three stages as described in Figure 1. Each stage is composed of several open-source tools for the incremental and continuous verification and validation of non-functional aspects of evolving software. The overall benefit of the process is to correct problems and to include new requirements before the production phase of the software project and during the whole life of the project.

Following an agile development process (Scrum), the process starts with incremental internal releases that are produced based on the requirements specified for the platform. Tools and methods to monitor the development process have been chosen and integrated. Continuous construction and testing from subversion commits are supported through the Hudson platform, while systematic analysis of code metrics is realized through the quality management platform Sonar that reports on release quality. Only working qualified versions are issued and sent to the trial integration stage. The project roadmap aligns internal releases with integration tests to be executed after each milestone.

Following each internal release, there is an integration trial with an industrial application in order to provide early feedback. A complete plan of trials has been set up, including two industrial trials on top of the final platform to provide a measurement of the introduced global improvements. Tools and methods to obtain metrics have been chosen and deployed. The JMeter platform collects information about program execution and user interaction. As such, it allows identification of symptoms of safety errors during execution, critical errors like performance degradation or changes in user behaviour.

Finally, the data gathered during the trial after each internal release provides feedback for the development process on issues and new requirements. Feedback strategies are defined and management tools for them are deployed, in particular the Trac issue management system. This allows tight control of product families and software versioning.

Trials for the final evaluation of the platform are defined and they are used as an input for the summative evaluation process. They cover both quantitative and qualitative metrics.

The results to date are very encouraging: the formative evaluation process can be integrated within any software product lifecycle from early analysis stages to final maintenance stages; it gives feedback on the evolution of development metrics, global quantitative goals, non-functional evaluation, compliance with user requirements, and pilot application results.

In our research, we collaborated with the Irish Software Engineering Research Center (LERO, Ireland), the Technische Universitaet Muenchen (TUM, Germany), the Instituto de Engenharia de Sistemas e Computadores, Investigaçao e desenvolvimento in Lisbon (INESC-ID, Portugal), and the companies TXT E-Solutions SPA (Milan, Italy) and S2 Grupo (Valencia, Spain).

This research is also part of a horizontal task with another ICT Software and Service Architectures, Infrastructure and Engineering project, called FITTEST (Future Internet Testing), that deals with performance and efficiency issues related to the monitoring activity, and with fault correlation and replication techniques. Our work is partially supported by the Spanish MEC INNCORPORA-PTQ 2011 program.

Link:

http://www.fastfixproject.eu

Please contact:

Javier Cano

Prodevelop, Spain

Tel: +34 963 510 612

E-mail: