Keeping the implementation of a software system in-line with the original design of the system is major challenge in developing and maintaining a system. One way of dealing with this issue is to use metrics to quantify important aspects of the architecture of a system and track the evolution of these metrics over time. We are working towards extending the set of available architecture metrics by developing and validating new metrics aimed at quantifying specific quality characteristics of implemented software architectures.

Most software systems start out with a designed architecture, which documents important aspects of the design such as the responsibilities of each of the main components and the way these components interact. Ideally, the implemented architecture of the system corresponds exactly with the design. Unfortunately, in practice we often see that the original design is not reflected in the implementation. Common deviations are more (or fewer) components, undefined dependencies between components and components implementing unexpected functionality.

Such discrepancies can be prevented by regularly evaluating both the designed and implemented architecture. By involving the developers as well as the architects in these evaluations, the implemented and the designed architecture can evolve together. Many evaluation methods are available, varying greatly in depth, scope and required resources. A valuable outcome of such an evaluation is an up-to-date overview of the implemented architecture and the corresponding design.

But when should such an evaluation take place? Depending on the resources required the evaluation can take place once or twice during a project, or periodically (for example every month). Unfortunately, in between the evaluations issues can still arise which may lead to deviations between the design and the implementation. The later these deviations are discovered the more costly it is to fix them.

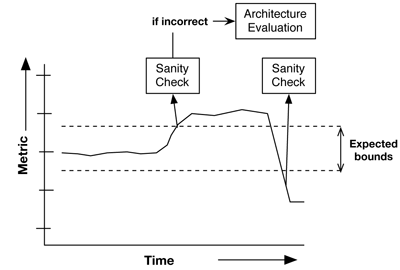

One way to deal with these problems is to continuously monitor important aspects of the implemented architecture by the means of software metrics. Basic metrics, for example the number of components, are straight-forward to calculate after each change and can be used as a trigger for performing sanity checks as soon as the metric is changing outside its expected bounds. If the sanity check fails (ie when the change is incorrect) a more detailed evaluation should be performed, potentially leading to a full-scale architecture evaluation. See Figure 1 for an illustration of this process.

Figure 1: The process of continuous architecture evaluations by the means of metrics. Simple metrics characterizing the architecture are measured over time and as soon as a large deviation occurs a sanity check is performed, potentially leading to a full-scale architecture evaluation if the change in the metric is incorrect.

Basic metrics (number of modules, number of connections) are easy to measure and provide relevant information. However, an examination of these two metrics in isolation does not allow for detection of all types of change, for example when a single component is implementing too much of the overall functionality.

In our current research project we are extending the set of available architecture metrics with the addition of new metrics which are related to quality aspects as defined in ISO-9126. More specifically, we have designed and validated two new metrics which quantify the Analyzability and the Stability of an implemented software architecture.

The first is called “Component Balance”. This metric takes into account the number of components as well as the relative sizes of the components. Owing to the combination of these two properties, both systems with a large number of components (or just a few components) as well as systems in which one or two components contain most of the functionality of the system receive a low score. We validated this metric against the assessment of experts in the field of software quality assessments by means of interviews and a case study. The overall result is a metric which is easy to explain, can be measured automatically and can therefore be used as a signaling mechanism for either light-weight or more involved architecture evaluations.

The other concept we introduced is the “Dependency Profile”. For this profile each module (ie source-file or class) is assigned to one of four categories:

- modules hidden inside a component

- modules that are part of the required interface of a component

- modules that are part of the provided interface of a component

- modules that are part of both the required and the provided interface of a component.

The summation of all sizes of the modules within a category provides a system-level quantification of the encapsulation of a software system. This metric has been validated by an empirical experiment in which the changes which occurred within a system are correlated to the values of each of the four categories. The main conclusion of the experiment is that with more code encapsulated within the components of a system, more of the changes remain localized to a single component, thus providing a measure for the level of encapsulation of a system which can be calculated on a single snapshot of the system.

Both of the metrics have shown to be useful in isolation. We are taking the next step by determining how these metrics can best be combined in order to reach a well-balanced evaluation of an implemented architecture. In order to answer the question when a more elaborate evaluation should take place we are planning to determine appropriate thresholds for these two metrics. The combined results of these studies will ensure that these metrics can be embedded within new and existing processes for architecture evaluations.

Links:

http://www.sig.eu/en/Research/Key%20publications/Archive/923.html

http://www.sig.eu/nl/Research/Wetenschappelijke%20publicaties/1023/__Dependency_Profiles_for_Software_Architecture_Evaluations__.html

Please contact:

Eric Bouwers,

Software Improvement Group,

The Netherlands

Tel:+31 20 314 09 50,

E-mail:

Arie van Deursen

Delft Technical University,

The Netherlands

E-mail: