Comparing tools is a difficult task because standard benchmarks are usually not available. We propose a Web application that supports a collaborative environment for the comparative analysis of design pattern detection tools.

Design pattern detection (DPD) is a topic that has received much attention in recent years. Finding design pattern (DP) instances in a software system can give useful hints for the comprehension and evolution of the system and can provide insight into the design rationale adopted during its development. Identifying design patterns is also important during the re-documentation process, in particular when the documentation is poor, incomplete, or out of date.

Several DPD approaches and tools have been developed, exploiting different techniques for detection, such as fuzzy logic, constraints solving techniques, theorem provers, template matching methods, and classification techniques. However, the results obtained by these tools are often quite unsatisfactory and differ from one tool to another. Many tools find pattern candidates which are false positives, missing other correct ones. One common difficulty in DPD is the so-called variant problem: DPs can be implemented in several different ways. These variants are the cause of the failure of most pattern instance recognition tools using rigid detection approaches, based only on canonical pattern definitions.

In fact, it is difficult to perform a comparative analysis of design pattern detection tools and only a few benchmark proposals for their evaluation can be found in the literature. In order to establish which are the best approaches and tools, it is important to agree on standard definitions of design patterns and to be able to compare results. The adoption by the DPD community of a standard benchmark would improve cooperation among the researchers and promote the reuse of existing tools instead of the development of new ones.

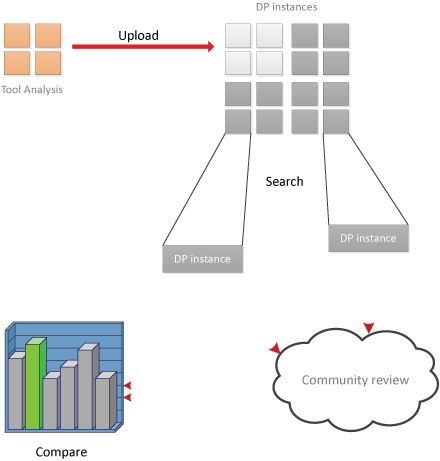

With this goal in mind, we have developed a benchmark web application to compare the results of DPD tools (see Figure 1). Subsequently we aim to extend and refine this application through the involvement of the community.

Figure 1: A schema of the benchmark definition process. Users upload tool analyses, contribuiting to the build up of a repository of DP instances. Each instance can be searched, analyzed and reviewed. The resulting data are used to create a benchmark of the associated tools by comparing their estimated precision rates.

Our approach is characterized by:

- a general meta-model for design pattern representation;

- a new way to compare the performance of different tools;

- the ability to analyze instances and validate their correctness.

Our aim is not only to introduce a “competition” between tools but also to create a container for design pattern instances that, through user testing and evaluation, will lead to the building of a large and “community validated” dataset for tool testing and benchmarking.

We are interested in integrating other comparison algorithms, preferably after discussions with and input from the DPD community, in order to let users choose the algorithm they think most appropriate. We are also investigating the possibility of installing some kind of web service that allows registered users to automatically upload their analysis into the application.

We have included in our benchmark application the contents of PMARt , a repository developed by Y. G. Guéhéneuc, that contains the pattern identification analysis of specific versions of different open source programs, in order to provide a good reference for comparing results and identifying correct instances. In addition, we have already uploaded some results provided by the following DPD tools: WoP, developed by J. Dietrich and C. Elgar, and DPD-Tool, a tool developed by N. Tsantalis, A. Chatzigeorgiou, G. Stephanides, and S. T. Halkidi.

We are interested in testing the correctness and completeness of our approach by exchanging data with other applications and models, such as DEEBEE (DEsign pattern Evaluation BEnchmark Environment), a web application for evaluating and comparing design pattern detection tools developed by L. Fulop, R. Ferenc, and T. Gyimothy.

The availability and simple interchange of DPD results could also be helpful to support DPD techniques such as those proposed by G. Kniesel and A. Binum, who compare different design pattern detection tools and propose a novel approach based on a synergy of proven techniques.

Through our online web application, we intend to provide a flexible underlying model and aim at supporting the comparison of DPD results in a collaborative environment.

Link: http://essere.disco.unimib.it/DPB/

Please contact:

Andrea Caracciolo

University of Milan-Bicocca, Italy

E-mail: