by Alan Payne and Peter Fry

We are in the process of developing a system for taking museum and gallery visitors on a journey of discovery through a digital collection. It is entirely image-led and allows the visitor to wander through a collection following semantic relationships between objects in the collection.

In recent years, many collection owners from museums, art galleries and libraries have put a lot of effort into digitizing their collections. This involves either document scanning or careful photography of the artefacts then combining the resulting digital images in a database with textual descriptions of the artefacts. Following this exhaustive process, the standard method of accessing the newly-formed digital collection is by keyword searching, often along with some kind of structured categorization and browsing of image thumbnails. This suits the expert and other visitors who know exactly what they are looking for, but for many visitors who just want a general exploration of a collection, current systems don't work well.

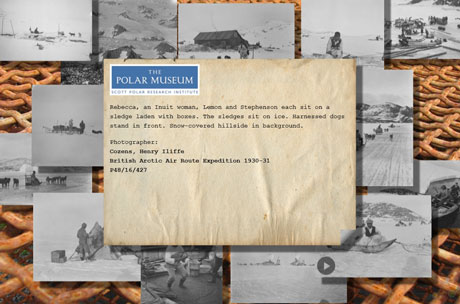

We have developed a system for visual semantic browsing - ViziQuest. This allows the visitor to explore a collection in a very different way. It is entirely image-led and takes the visitor on a journey of discovery through a collection based on the visitor's individual selection of images that they find interesting. The visitor is presented with a montage of images; Figure 1, for instance, shows a central image surrounded by other images from the collection which are related by varying degrees to the central image. By selecting one of the surrounding images that interests them, this image moves to the centre of the display and is surrounded by new images that are related to this selected one (Figure 2). The textual descriptions are readily accessed by "flipping" the image (Figure 3).

Figure 1: A central image surrounded by other images from the collection which are related by varying degrees to the central image.

Figure 2: A selected image moves to the centre of the display and is surrounded by new images that are related to this selected one.

Figure 3: The textual descriptions are readily accessed by "flipping" the image.

The collection owner simply supplies their database of images and descriptive texts. There is no further "pre-work" to be done on the data by the collection owner. We carefully examine the collection and build an ontology to describe the scope and depth of the collection. Ideally, this ontology is reviewed and refined with the collection owner or other domain expert. The ontology is then used to direct the building of relationships between the artefacts using Natural Language Processing (NLP) techniques.

A system based on this work has been installed at the Scott Polar Museum in Cambridge, UK. It is proving very popular with visitors, both "experts" and casual visitors, and with all age groups. The image-centric nature of the system (it requires no textual input whatever) acts as a clear draw. The museum curators are delighted with it, particularly in the fact that it is enabling visitors to unearth images from all parts of the collection, not just a narrow slice as would be found with a traditional search.

The basic system has now been considerably enhanced by the addition of audio and movie files that were part of an archive from a further polar collection. This new system has recently been installed in the museum, where again visitor feedback is very positive.

A current development project is to build some targeted educational elements into the browsing experience. We are testing a modified version of ViziQuest with a group of children (aged 9-11 years old) from a local primary school. A crucial element of this stage in their learning is the ability to create and format narratives, both fictional and factual. The system provides nuance to the narrative by calling up related images and exploits the textual descriptions in the museum digital collections. This modified version requires the children to select images for hard copy that act as props for them to build a story around. In this way they are producing their personalized stories and enriching them in ways not possible with ordinary search methods. Serendipitous browsing, favoured by the semantic browser, promotes lateral thinking which in turn has a strong role in creativity. By providing the tools described, the students will better understand the formal aspects of narrative and appreciate what constitutes good practice in storytelling and report writing. As well as assisting the pupils with their story-telling skills, exploring the collection in this way inevitably teaches them about the collection as a whole which in many cases has stimulated them to pay a live visit to the museum where the collection resides.

Visual semantic browsing provides a new way to access digital collections, opening up collections to a wider audience, unearthing hidden depths of collections and extending the use of the hard work involved in digitization projects.

Please contact:

Alan W Payne

Deep Visuals Limited, Cambridge, UK

Tel: +44 (0)1223 437163

E-mail:

www.deepvisuals.com