by Henrik I Christensen

Over the last two decades the Internet has been a game changer. It has changed how we interact with people, the world is becoming flat in the sense that we can easily access people and resources across the world. It is, however, characteristic that so far the internet has primarily been used for exchange of information. The premise here is that the next revolution will happen when the internet is connected to the physical world, as typically is seen in robotics.

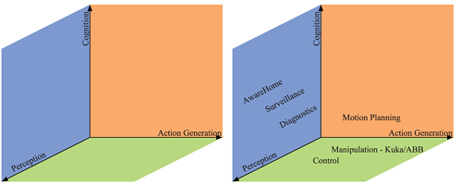

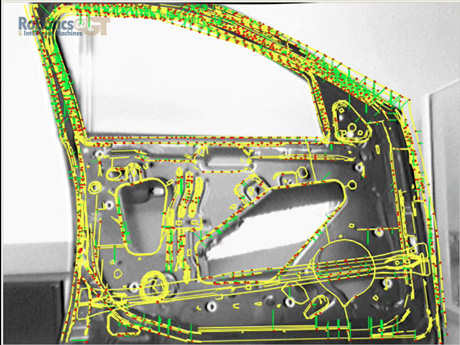

Robot systems are composed of three components: actuation, perception and cognition. Actuation is the physical generation of motion in the environment. Perception is about the estimation of the state of the environment and agents within it. Finally, cognition is acquisition of information about the environment and use of such information to reason about past, current and future events. If we consider the components of perception, actuation and cognition to span a 3-dimensional space as shown in Figure 1. More than 10,000,000 robots are used industrially but less than 5% of them have sensors in the outer control loop. That is, the motion is primarily pre-programmed. There is thus a need to move away from the actuation axis and into the green plane where perception/sensing is integrated into the control loop to provide increased flexibility and adaptability to changes in the environment. We are starting to see such integration of sensing for example for visual servoingas part of assembly operations as illustrated in Figure 3. In this example assembly of a car door is studied. Robot assembly of a car door represents one of the most challenging tasks in car manufacturing and it thus represents a suitable benchmark. In the space of perception and cognition there has been a rich body of research on activity recognition, intelligent home environments and smart diagnostics. In the actuation-cognition plane there has been a number of new efforts on intelligent search and planning such as smart motion planning and exploration strategies. It is, however, characteristic that there has been relatively few efforts to deploy systems that integrate all 3 aspects into system beyond the toy domains or outside of the research laboratory.

Figure 1 (left): The space spanned by perception, actuation and cognition.

Figure 2 (right): Example applications in the robotics space.

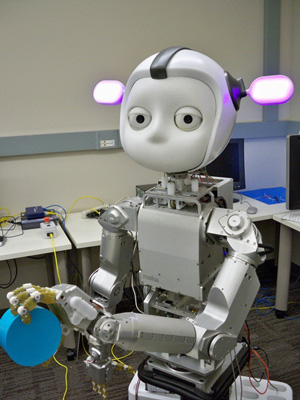

As important aspect of design of next generation robot systems is integration of effective user interfaces. Traditionally robots can only be utilized after a multi-day training course or the functionality is simple enough to allow use of very basic interface modalities such as playing sound patterns to indicate different states. As more and more functionality is integrated into systems there is a need to provide richer modalities for interaction. We have already started to see voice dialog systems on smart phones and when integrated with methods for gaze estimation, gesture interpretation it is possible to provide comprehensive interfaces. People have traditionally developed interaction patterns for pets, toys, etc. It is no surprise that more than 50% of all Roomba owners name their robot! Leveraging the fact that people bond with pets and popular technologies such as GPS units, computer game characters, and smart robots it is possible to consider a new generation of robot systems. One such example is the use of humanoid type robots for interaction with people without a need for extensive training. One such example is the robot Simon that has been developed by Prof. Andrea Thomaz at Georgia Institute of Technology. The robot is shown in Figure 4. Through use of an articulated interface that has facilities for speech, gesture and gaze interaction it is possible to design rich interfaces that can be used for non-expert users such as kids. Typically there is no need to train people prior to deployment of the system for kids play, training of assembly actions for manufacturing and elderly care. For cognitive systems that are to be deployed in general scenarios it is particularly important to consider use of embodied interfaces that lower the bar for adoption.

Figure 3: Example of using vision to drive a robot as part of assembly operations.

Figure 4: The robot Simon used for social HRI studies

(Courtesy of Prof. A. Thomaz, RIM@GT).

There is a tremendous need for utilization of robot systems as part of providing assistance to elderly and disabled people. As an example there are more than 400,000 stroke victims in the US and many of them could live independently if they had a little help. An example would be delivery of food, pick up of items from the floor, recovery of lost items such as glasses, remote control, etc. Today people can request assistance from canines or trained monkeys. Clearly many of the required functions could also be achieved with a robot system. The challenge is here to provide a robust system that can be operated after a minimum of training. One such example is the robot E-Le developed by Prof. Charlie Kemp at Georgia Tech. The robot is shown in Figure 5. It has been developed for basic delivery tasks and to pickup and delivery of medicine to a person that is mobility impaired. The robot has been tested in field trials for adoption by real client with great success. The current challenge is consideration if it can be commercialized at a cost of less than $10,000 to be competitive with competing approaches.

Figure 5: The robot E-LE developed at Georgia Tech for studies of assistance to elderly/disabled people (Courtesy of Prof. C. Kemp, RIM@GT).

Robots endowed with cognitive capabilities that enable communication, interaction and recovery are without doubt the next wave. The confluence of interface technology, cheap actuations and affordable computing with new methods in learning and reasoning will pave the way for a new generation of systems that move away from the factory floor and in the daily lives of citizens across cultures, age and needs. This is an exciting time.

Please contact:

Henrik I Christensen,

Robotics and Intelligent Machines,

Georgia Institute of Technology, USA

E-mail: