by Tanja E. J. Vos

The Future Internet will be a complex interconnection of services, applications, content and media, on which our society will become increasingly dependent for critical activities such as social services, learning, finance, business, as well as entertainment. This presents challenging problems during testing; challenges that simply cannot be avoided since testing is a vital part of the quality assurance process. The European funded project FITTEST (Future Internet Testing) aims to attack the problems of testing the Future Internet with Evolutionary Search Based Testing.

The Future Internet (FI) will be a complex interconnection of services, applications, contents and media, possibly augmented with semantic information, and based on technologies that offer a rich user experience, extending and improving current hyperlink-based navigation. Our society will be increasingly dependent on services built on top of the FI, for critical activities such as public utilities, social services, government, learning, finance, business, as well as entertainment. As a consequence, the applications running on top of the FI will have to satisfy strict and demanding quality and dependability standards.

FI applications will be characterized by an extreme level of dynamism. Most decisions made at design or deploy time are deferred to the execution time, when the application takes advantage of monitoring (self-observation, as well as data collection from the environment and logging of the interactions) to adapt itself to a changed usage context. The realization of this vision involves a number of technologies, including:

- Observational reflection and monitoring, to gather data necessary for run-time decisions.

- Dynamic discovery/composition of services, hot component loading and update.

- Structural reflection, for self-adaptation.

- High configurability and context awareness.

- Composability into large systems of systems.

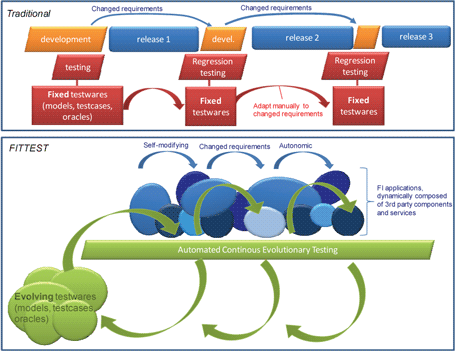

While offering a major improvement over the currently available Web experience, complexity of the technologies involved in FI applications makes testing them extremely challenging. Some of the challenges are described in Table 1. The European project FITTEST (ICT-257574, September 2010-2013) aims at addressing the testing challenges in Table 1 by developing and evaluating an integrated environment for continuous evolutionary automated testing, which can monitor the FI application under test and adapt to the dynamic changes observed. Today's traditional testing usually ends with the release of a planned product version with testing being discontinued after application delivery. All testware (test cases as well as the underlying behavioural model) are constructed and executed before delivering the software. These testwares are fixed, because the System Under Test (SUT )has a fixed set of features and functionalities, since its behaviour is determined before its release. If the released product needs to be updated because of changed user requirements or severe bugs that need to be repaired, a new development cycle will start and regression testing needs to be done in order to ensure that the previous functionalities still work with the new changes. The fixed testwares, designed during post-release testing, need to be adapted manually in order to cope with the changed requirements, functionalities, user interfaces and/or work-flows of the system (See Figure 1 for a graphical representation of traditional testing).

| 1. Dynamism, self-modification and autonomic behaviour |

FI applications are highly autonomous; their correct behaviour for testing cannot be specified and modelled precisely at design-time. |

| 2. Low observability | FI applications are composed of an increasing number of third-party components and services, accessed as a black box, which are hard to test. |

| 3. Asynchronous interactions | FI applications are highly asynchronous and hence harder to test. Each client submits multiple requests asynchronously; multiple clients run in parallel; server-side computations are distributed over the network and concurrent. |

| 4. Time and load dependent behaviour | For FI applications, timing and load conditions make it hard to reproduce errors during debugging. |

| 5. Huge feature configuration space | FI applications are highly customizable and self-configuring, having a huge number of configurable features, such as context, user, environment-dependent configurable parameters that need to be tested. |

| 6. Ultra-large scale | FI applications are often systems of systems; traditional testing adequacy criteria, like coverage, cannot be applied. |

Table 1: Future Internet Challenges.

FI testing will require continuous post-release testing since the application under test does not remain fixed after its release. Services and components could be dynamically added by customers and the intended use could change significantly. Therefore, testing has to be performed continuously after deployment to the customer.

Within FI application, services and components could be dynamically added by customers, and the intended use could change significantly. Since FI applications do not remain fixed after its release, they will require ongoing, continuous testing even after deployment to the customer.

The FITTEST testing environment will integrate, adapt and automate various techniques for continuous FI testing (eg dynamic model inference, model-based test case derivation, log-based diagnosis, oracle learning, classification trees and combinatorial testing, concurrent testing, regression testing, etc.). To make it possible for the above mentioned techniques to deal with the huge search space associated with FI testing, evolutionary search based testing will be used. Search Based Software Testing (SBST) is the FITTEST basis for test case generation, since it can be adopted even in the presence of dynamism and partial observability that characterize FI applications. The key ingredients required by search algorithms are:

- an objective function, that measures the degree to which the current solution (eg test case.) achieves the testing goal (eg, coverage, concurrency problems, etc.)

- operators that modify the current solution, producing new candidate solutions to be evaluated in next iterations of the algorithm.

Figure 1: Traditional testing versus FITTEST testing.

Such ingredients can usually be obtained even in the presence of high dynamism and low observability. Hence, it is expected that most techniques that will be developed to address the project’s objectives will take advantage of a search based approach. For example, model-inference for model-based testing requires execution scenarios to limit under-approximation. We can generate them through SBST, with the objective of maximizing the portion of equivalent states explored. Oracle learning needs training data that are used to infer candidate specifications for the system under test. We can select the appropriate executions through SBST, using a different objective function (eg, maximizing the level of confidence and support for each inferred property). We will generate classification-trees from models and use SBST to generate test cases for classification-trees. Input data generation and feasibility check are clearly good candidates to resort to search based algorithms, with an objective function that quantifies the level to which a test case gets close to satisfying a path of interest. In concurrency testing, test cases that produce critical execution conditions are given a higher objective value defined for this purpose. Coverage targets can be used to define the objective function to be used in coverage and regression testing of ultra-large scale systems.

SBST represents the unifying conceptual framework for the various testing techniques that will be adapted for FI testing in the project. Implementations of such techniques will be integrated into a unified environment for continuous, automated testing of FI applications. Quantification of the actually achieved project objectives will be obtained by executing a number of case studies, designed in accordance with the best practices of empirical software engineering.

The FITTEST project is composed of partners from Spain (Universidad Politecnica de Valencia), UK (University College London), Germany (Berner & Mattner), Israel (IBM), Italy (Fondazione Bruno Kessler), The Netherlands (Utrecht University), France (Softeam) and Finland (Sulake).

Link:

http://www.pros.upv.es/fittest/

Please contact:

Tanja E.J. Vos,

Universidad Politécnica de Valencia / SpaRCIM

Tel: +34 690 917 971

E-mail: