by Michal Haindl

The entire spectrum of recent scientific research is too complex to be fully and qualitatively understood and assessed by any one person, hence, scientometry may have its place, provided it be applied with common sense, being constantly aware of its many limits. However, scientometry, when applied as the sole basis for evaluating performance and funding of research institutions, is our open admission that we are unable to recognize an excellent scientist even if he is working nearby.

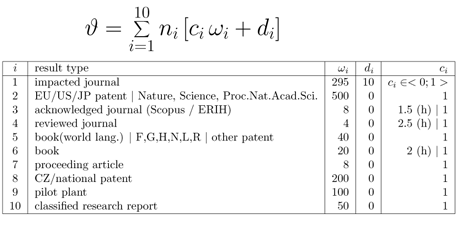

In recent years Czech scientists have been evaluated by the powerful Research and Development Council (RDC), an advisory body to the Government of the Czech Republic. The RDC processes regular annual analyses and assessments of research and development work and proposes financial budgets for single Czech research entities. The RDC’s overambitious aim was to produce a single unique universal numerical evaluation criterion, a magic number used to identify scientific excellence and to determine the distribution of research funding. The resulting simplistic scientometric criterion is shown in Figure 1.

Figure 1: RDC scientometric criterion, where ni is number of results, h humanities, F design, G prototype, H legal norm, N methodology, L map, and R software result, respectively. The coefficient ci value depends on a journal impact rank inside its discipline.

The RDC noticed difficulties in mutual comparison of humanities, natural, and technical sciences so they introduced a corrective coefficient (ci) favouring less prestigious journals and books in humanities. Apart from the fact that it is impossible to compare a range of diverse disciplines using a single evaluation criterion, this system ignores some common assessment criteria (eg citations, grant acquisition, prestigious keynotes, comparison with the state-of-the-art, editorial board memberships, PhDs). Furthermore, single weights (wi) are unsubstantiated, and acquired scores cannot be independently verified.

Negative consequences have been revealed over the last three years. Significant rank changes indicate that scientific excellence can easily be simulated with a cunning tactic. For example, eight unverified software pieces (category R) will outweigh a scientific breakthrough published in the most prestigious high-impact journal within the research area. Some research entities have already opted for such an easy route to the government research pouch.

Publication cultures vary between disciplines; while some prefer top conferences (computer graphics), elsewhere prestige goes to journals (pattern recognition) or books (history and the arts). Some disciplines have one or two authors per article (mathematics) while others typically have large author teams (medicine). These factors are not taken into consideration by the current system.

Finally, this system recklessly ignores significant differences between the financing systems of different types of research entities. While universities have a major source of financing from teaching, the 54 research institutes of the Academy of Sciences of the Czech Republic (ASCR) rely solely on research. Thus last year’s application of the scientometric criterion resulted in anticipated negative consequences for the Czech research community in general, but especially for its most effective contributor - the Academy of Sciences. ASCR, with only 12% of the country’s research capacity, produces 38% of the country’s high impact journal papers, 43% of all citations, and 30% of all patents. Nevertheless the criterion led to 45% drop in funding for ASCR in 2012. It is little wonder then, that our research community has become destabilized, that researchers, for the first time in history, have participated in public rallies, and that young researchers are losing motivation to pursue scientific careers. Over 70 international and national research bodies have issued strong protest against the government’s policies (see details on http://ohrozeni.avcr.cz /podpora/), and several governmental round tables have been held in an attempt to solve this crisis.

What is the moral from the described scientometric experiment? Any numerical criterion requires humble application, careful feedback on its parameters, and wide adoption by the scientific community. We must never forget that any criterion is only a very approximate, and considerably limited, model of scientific reality and will never be an adequate substitute for peer review by true experts. In order to avoid throwing out the baby with the bath water we should consult any criterion on a discipline-specific basis, and only as an auxiliary piece of information. A criterion may be useful to compare extreme scientific performance, but is hardly suitable for assessing subtle differences or breakthrough scientific achievements. Whilst a single number has alluring simplicity, it also carries pricey long-lasting consequences. Excellent research teams are easy to damage by ill-conceived scientometry, but much harder to rebuild.

Links:

http://www.vyzkum.cz/

http://ohrozeni.avcr.cz/podpora/

Please contact:

Michal Haindl

CRCIM (UTIA), Czech Republic

Tel: +420-266052350

E-mail: