by Josiane Zerubia and and Louis Hauseux (Inria)

Remote sensing present various interests for civilian and military applications: automatic traffic monitoring, driving behavioral research, surveillance, etc. We work on images taken by satellite. This particular type of data has specific characteristics, quite different from those obtained by other aerial sensors (drones, planes, high-altitude balloons). The new Pléiades Neo satellites have high native resolution (i.e. without algorithmic processing) of 0.30 meter per pixel; and an impressive swath (photograph’s width) of 14 kilometers. These technical characteristics make classical shape-based detection methods unsatisfactory.

This paper is composed of two parts: the first one focuses on vehicles’ detection (see [1]), the second on the tracking of those vehicles (see [2]). The ideas mentioned in the first part for still images can be adapted to videos.

Geometric Priors for Vehicle’s Detection

Satellite images or videos provided by constellations such as Pléiades (CNES – Airbus D&S – Thales Alenia Space) and Pléiades Neo (Airbus D&S) or, in the near future, CO3D (CNES – Airbus D&S) are taken several hundred kilometers above Earth level. Nevertheless, the high-resolution of these satellites enables to obtain detailed images: one pixel represents ~1 meter, up to 30 cm with Pléiades Neo satellites. However, precision remains much less than for usual images taken from airplanes, balloons or UAVs. Close objects may appear to merge into the image. Moreover, objects – let’s say vehicles (that’s our favorite objects of interest) – have a white-blob-looking appearance. The classical shape-based detection methods via Convolutional Neural Networks (CNN), which give excellent results for large objects, are therefore not reliable in this case.

Here is our first idea: Vehicles are not randomly arranged: the geometric configurations of some objects tell us something about the configuration of the other ones (in terms of shape or size, alignment, overlapping, etc.) Thus, we took back a previous CNN-method and associated it to another term considering geometry. These ‘geometric priors’ lend a hand to the program by encouraging it to promote certain configurations over others.

Let’s take a closer look: a vehicle is represented by a rectangular box defined by its position (the x,y coordinates of its center), its length a, its width b and the angle α w.r.t. the horizontal. We then introduce priors on the possible configurations:

- size prior: a vehicle is a rectangle of particular shape. Thanks to the training-set, one can learn what type of rectangles a vehicle looks like. It prevents rectangles representing vehicles from being too small, too thin, too large, etc. Two parameters are jointly considered: the rectangle’s area a x b and its ratio r = b ÷ a;

- overlap prior: to penalize two vehicles which would overlap;

- alignment prior: to reward a vehicle that would be aligned with its neighbors.

Mathematically, priors are some cost-functions seen as energy-functions. The total energy of a configuration is the weighted sum of these priors, plus the position-energy (obtained via CNNs), where the weights are learnt in a supervised way. The lower a configuration energy is, the more likely this configuration is.

So, seeking the best configuration (i.e. the most likely) leads to an energy-minimization problem. To do this, we use stochastic methods such as Monte-Carlo Markov Chains with reversible jumps.

Our algorithm has been trained and tested on the DOTA-image database. Figure 1 illustrates a difficult situation: a large building’s shadow hides vehicles. (Night remote sensing does not show yet good results.) Alignment constraints make it possible to locate the long line of cars parked in the shadow.

Figure 1: Cars parked in the shade are not well recognized by usual algorithms (left). Ours works fine (right).

Adaptive birth for Multiple Object Tracking

Some satellites (such as those of the future CO3D constellation) are equipped with matrix sensors that allow video capture (it is also possible to reconstruct short videos with linear sensors using image processing). These videos have a frame rate ~ 1 Herz (i.e. one image per second). As a point of comparison: the human eye can perceive up to 24 frames per second (FpS); that’s why many cameras shoot at 24 FpS. Since time is discrete in videos (it is represented by the frame number), a low frame rate will produce the same effect as filming high-velocity objects: the same object can be distant between two frames.

Videos have an additional challenge to address compared to images: not only can we try to detect objects, but we can also try to track them until they disappear from the video.

Multiple Object Tracking (MOT) on videos is the process of jointly determining how many objects are present and what are their states (position, velocity, label) from noisy sets of measurements. In remote sensing, ground vehicles can appear from incoming roads or parking lots and disappear a few moment later. Additionally, noise sources such as illuminations changes, clouds, shadows, wind or rain generate numerous false positive detections. Fortunately, targets’ dynamics in satellite images can be modeled well with linear motion. Therefore, deep-learning approaches used to track objects in highly cluttered and non-linear scenarios, with millions of parameters and astronomical calculations, seem unsuitable.

Conceptually, at each time step k (= at frame k), a set of measurements is received. Each measurement in this set is either a false alarm (= clutter) or is generated by an object. Furthermore, targets’ measurements may be missed. The goal is to detect all the set of real objects. Notice that generally the number of measurements is different from the number of real objects, and both may vary with time. The sets of measurements and objects are thus seen as Random Finite Sets (of random length, varying at each iteration k).

Since a couple of years, frameworks working on these Random Finite Sets (RFS) has gained popularity due to their elegant Bayesian formulation. We have enhanced one RFS filter: The Generalized Labeled Multi-Bernoulli (GLMB) filter. The GLMB filter relies on strong assumptions such as prior knowledge of targets’ initial state. We keep track of previous target states and use this information to sample the initial velocities of new-born targets. This addition significantly improves the performance of the GLMB in videos with low FpS (or, equivalently, with high velocity objects); this is particularly the case for satellite videos. (For another example of a RFS filter approximation by a GM-PHD filter, see [3].)

How to compare two algorithms? Several metrics exist in MOT. Diversity comes from the variety of clues that can be measured: false positives, false negatives, and, in the case of true trajectories detected, the partially tracked ones and the mostly tracked.

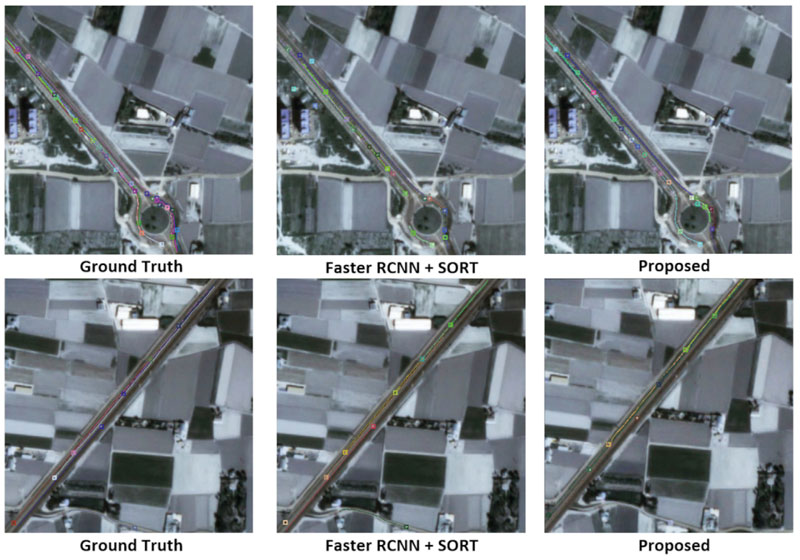

We tested our method with the WPAFB 2009 dataset. Adding our adaptive birth improves significantly the GLMB filter’s results for usual metrics such as MOT-Precision, MOT-Accuracy and self-made metrics. Figure 2 shows very good results on a region which presents fundamental challenges for object trackers: objects move at high speeds, objects are often in crowded environments, and trajectories intersect regularly.

Figure 2: Difficult situations for tracking: vehicles are moving fast and there is a roundabout. Each colour represents a track.

Conclusion

Remote sensing by satellite is both an important domain of computer vision and a very specific one. Algorithms must be designed for this precise use. This fact has been illustrated by two examples in vehicles’ detection and tracking. The curious reader can find other examples on our web page.

This work has been conducted by Jules Mabon (PhD), Camilo Aguilar (Postdoc), Josiane Zerubia (Senior Researcher) and more recently Louis Hauseux (Research Engineer), in collaboration with Mathias Ortner (Senior Expert in data science and artificial intelligence at Airbus D&S).

Links:

[L1] https://team.inria.fr/ayana/

[L2] http://www-sop.inria.fr/members/Josiane.Zerubia/index-eng

References:

[1] J. Mabon, J. Zerubia and M. Ortner. Point Process and CNN for small object detection in satellite images. SPIE, Image and Signal Processing for Remote Sensing, XXVIII (2022).

[2] C. Aguilar, M. Ortner, J. Zerubia. Adaptive birth for the GLMB filter for object tracking in satellite videos. MLSP – IEEE International workshop on Machine Learning for Signal Processing (MLSP) (2022).

[3] C. Aguilar, M. Ortner, J. Zerubia. Small moving target MOT tracking with GM-PHD filter and attention-based CNN. MLSP (2021).

Please contact:

Josiane Zerubia

Inria, Université Côte d'Azur, France