by Antonis Kakas (University of Cyprus)

How can machines think in a human-like way? How can they argue about and debate issues? Could machines argue on our behalf? Cognitive Machine Argumentation studies these questions based on a synthesis of Computational Argumentation in AI with studies on human reasoning in cognitive psychology, philosophy and other disciplines.

Cognitive AI systems are required to operate in a cognitively compatible way with their human users, naturally connecting to human thinking and behaviour. In particular, these systems need to be “explainable, contestable and debatable”, thus able to adapt their knowledge and operation based on rich forms of interaction with humans.

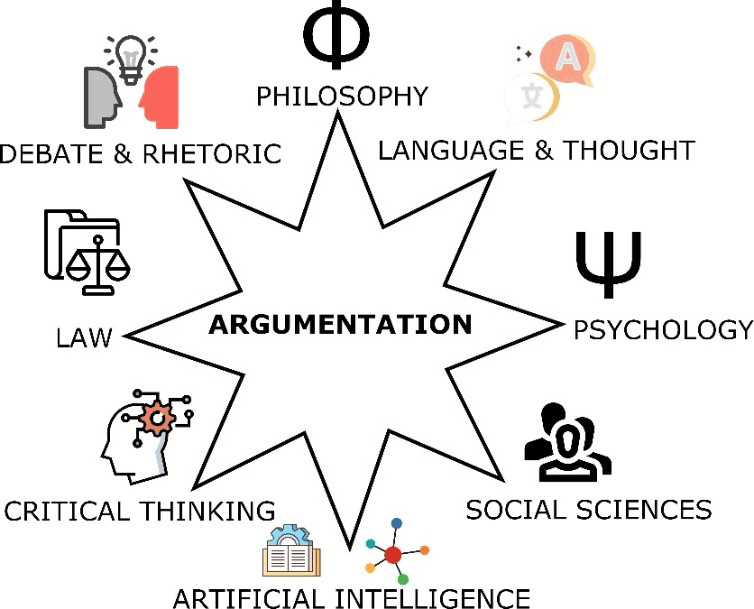

One way to build such systems is to base their design on argumentation, exploiting its strong and natural link to human cognition. The relatively recent work of Mercier and Sperber [1] emphasises that human thinking is regulated by argumentation: humans (are motivated to) reason in order to justify their conclusions against counterarguments that come either from their external social environment or indeed from their own introspection. We can thus consider Computational Argumentation as a possible foundational calculus for Cognitive or Human-centric AI [2]. Nevertheless, in order to serve well the requirements of Cognitive AI it is necessary to inject within the formal framework of computational argumentation, cognitive principles, drawn from the many other disciplines in which human reasoning and in particular human argumentation is studied (Figure 1).

Figure 1: The interdisciplinary nature of Argumentation.

Cognitive Machine Argumentation is built through such a synthesis of argumentation in AI with elements from these other disciplines, such as cognitive psychology, linguistics, and philosophy. The task is to let these cognitive principles regulate the computation of argumentation in order to make it effective and cognitively viable within the dynamic and uncertain environment of application of AI systems. By “humanising” the form of machine argumentation, Cognitive Argumentation aims to facilitate an effective and naturally enhancing human-machine integration.

The framework of Cognitive (Machine) Argumentation has been validated by showing that it models well the empirical data from cognitive science in three classic human reasoning domains, that of Syllogistic Reasoning, the Suppression task and the Wason Selection task. COGNICA is a system that implements the framework of Cognitive Argumentation. Using argumentation it accommodates and extends the mental models theory on human conditional reasoning [3], from individual conditionals to sets of conditionals of different types, that together form a piece of knowledge on some subject of interest and human discourse. The existence of systems, such as COGNICA, opens up the opportunity for carrying out new systematic empirical studies of comparison between human and machine reasoning. In particular, such experiments would allow us to examine the nature of human-machine interaction and how we could beneficially use Machine Argumentation in the way that humans reason.

A program of experiments to examine the interaction of humans with COGNICA and its reasoning explanations has been setup. The aim of the experiments is to examine the effect that different types of machine explanations can have on human reasoning, ranging from no explanation to visual or verbal explanations, to summary explanations or extensive analytical explanations. A first pilot experiment has been completed in which participants were asked to answer questions based on some information that typically included three to five conditionals from everyday life. They were then asked to reconsider the questions after they were shown the answers of the system together with the explanations (verbal and/or visual) offered by the system. The results of this study show that in around 50% of the cases where the conclusion of the human participants differed from that of the machine, the participants changed their answer when they saw the explanations of the system. It was also observed that this kind of interaction with the system motivates participants to “drift” to more “careful reasoning” as they progress in the experiment, in accordance with the argumentation theory of Mercier and Sperber [1].

We are currently performing new experiments in order to investigate how this machine-human interaction varies across the population with different cognitive and personality characteristics. The interested reader can try this type of exercise, used in the COGNICA experiments, by visiting the links [L1, L2] and following the simple instructions given there.

Links:

[L1] http://cognica.cs.ucy.ac.cy/cognica_evaluation_2022/index.html

[L2] http://cognica.cs.ucy.ac.cy/cognica_evaluation_2022_se/index.html

References:

[1] H. Mercier, D. Sperber, “Why do humans reason? Arguments for an argumentative theory”, in Behavioral and Brain Sciences, 34(2):57–74, 2011.

[2] E. Dietz, A. Kakas, L. Michael, “Argumentation: A calculus for Human-Centric AI”, in Frontiers in Artificial Intelligence, 5, 2022. https://doi.org/10.3389/frai.2022.955579

[3] P.N. Johnson-Laird, R.M.J. Byrne, “Conditionals: a theory of meaning, pragmatics, and inference”, in Psychological Review, 109(4):646–678, 2002.

Please contact:

Antonis Kakas , University of Cyprus, Cyprus