by Christian Thomay and Benedikt Gollan (Research Studios Austria FG), Anna-Sophie Ofner (Universität Mozarteum Salzburg) and Thomas Scherndl (Paris Lodron Universität Salzburg)

We propose using Cognitive AI methods for use in multimodal arts installations to create interactive systems that learn from their visitors. Researchers and artists from Research Studios Austria FG and the Universität Mozarteum Salzburg have created a multimodal installation in which users can use hand gestures to interact with individual instruments playing a Mozart string quartet. In this article, we outline this installation and our plans to realise Cognitive AI methods that learn from their users, thereby improving the performance of gesture recognition as well as usability and user experience.

Cognitive AI – AI systems that apply human-like common sense and learn based on their interactions with humans [1] – is a field of AI that has plentiful applications in fields such as Internet of Things, cybersecurity, and content recommendation [2]. Especially in installation art, Cognitive AI offers the potential for a fundamental paradigm shift: allowing machines to learn to adapt interaction modalities to their visitors, instead of the visitors adapting to the installation. Approaching Cognitive AI in interactive systems, as a first step we explore natural, intuitive interaction in an artistic installation.

Computers and interactive art have a storied past, dating back to the 1950s, with early electronic art installations such as Nicolas Schöffer’s CYSP-1 sculpture [L1] moving in reaction to the motions of the people around it. Since the rise of artificial intelligence, artists have begun to use AI algorithms to create visual, acoustic, or multi-sensory art, or to allow visitors to interact with installation art in different ways [3].

A foundation for many installation art projects is an intent to proactively involve their audience, allowing visitors to control and shape immersive experiences in the installation. Regarding how explicit this interaction is, there is a scale with two extreme points: "random" art systems, where art is created based on visitor behaviour using rules that are either opaque to the audience, or entirely non-deterministic; on the other end of the spectrum, there are explicit interactive systems, where the audience is meant to assume conscious control over the content of the installation. Modern AI art projects have fallen on various points on this scale, frequently encouraging the visitor to infer the rules without fully spelling them out. This leads to technical challenges for identifying user input, which may still be vague and fuzzy.

One such art installation is Mozart Contained! [L2], a project in the Spot on MozART [L3] program at the University Mozarteum Salzburg. The project was conceived in 2019 as an approach to create a new level of perception of the music of Mozart, with an interdisciplinary team of artists from Mozarteum and researchers from the Research Studio Austria creating this multimodal art installation (Figure 1). It was first presented in July 2021, and an enhanced version was opened to visitors in October 2022.

Figure 1: Mozart Contained! Installation.

Visitors are able to use hand gestures to influence the individual voices of Mozart’s dissonance string quartet (KV 465). In each corner of the installation there is a light sculpture representing one instrument (violin 1, violin 2, cello, viola). Visitors can interact with the light sculpture using hand gestures, controlling playback and volume of the individual tracks. The light sculpture gives feedback whether the activation was successful and also visually indicates the volume by using more or less pronounced lines and brightness. Visitors can activate each instrument separately: if they activate all four instruments, the quartet is played concurrently as intended; if instead only a subsample of the instruments is activated, visitors can focus solely on the respective instruments and thus experience Mozart’s music in a new way, allowing both single and multiple visitors at the same time to create their own interpretation of the piece.

The technical setup consists of a sound system, four ultra short throw projectors creating the light sculptures, and two Microsoft Kinect skeleton tracking systems that observe the visitors’ movements and thereby recognise input hand gestures. The interaction concept in Mozart Contained! is at this point entirely rule based: the system detects gestures performed by the audience and maps them to control inputs to the installation. However, here Cognitive AI offers the groundwork for a fundamentally novel approach.

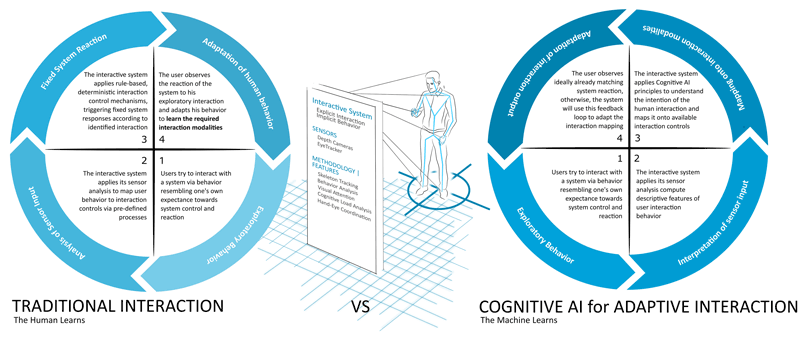

In Mozart Contained!, Cognitive AI offers an inversion of the traditional interaction concept: instead of asking its users to learn to use the system, the AI learning system infers its users’ intent, rather than relying on exact execution of predefined input patterns. This has the potential to offer a much more intuitive experience true to the visitor's intent; Figure 2 illustrates this shift from traditional to Cognitive AI interaction.

Figure 2: Schematic of traditional interaction vs interaction in a Cognitive AI system.

The two active periods of Mozart Contained! so far have served as a fundamental validation step of the interaction concept, and as data gathering. Based on data collected so far and to be collected in the future, AI methods can be trained to use kinetic observables of visitors such as overall position in the installation, body/head/torso orientation, and arm or hand motion to infer which sculpture they wish to engage with, and with which specific intent. It is our aim to use the collected digital data, together with questionnaire data to serve as a baseline of intent and engagement, to create such a Cognitive AI model, and create a learning interactive system that learns together with its audience. Challenges such an AI model would face include the non-deterministic nature of human behaviour and the danger of feedback loops, where human participants adapt to the AI system before the AI can learn from their behaviour; this will be addressed via careful curation of algorithm input data, evaluating participant questionnaire data, and dedicated training sessions.

Consequently, realising such a Cognitive AI-based adaptive interaction would serve as a fundamental improvement in terms of human-centred technologies and interaction quality, and represent a fascinating use case example of how Cognitive AI can enhance and improve user experience.

Links:

[L1] http://dada.compart-bremen.de/item/artwork/670

[L2] https://www.spotonmozart.at/en/projekt/mozart-contained/

[L3] https://www.spotonmozart.at/en/

References:

[1] Y. Zhu, et al., “Dark, beyond deep: A paradigm shift to cognitive AI with humanlike common sense”, in Engineering 6.3 (2020): 310-345. https://doi.org/10.1016/j.eng.2020.01.011

[2] K. C. Desouza, G. S. Dawson, and D. Chenok, “Designing, developing, and deploying artificial intelligence systems: Lessons from and for the public sector”, in Business Horizons 63.2 (2020): 205-213. https://doi.org/10.1016/j.bushor.2019.11.004

[3] Jeon, Myounghoon, et al., “From rituals to magic: Interactive art and HCI of the past, present, and future”, in Int. Journal of Human-Computer Studies 131 (2019): 108-119. https://doi.org/10.1016/j.ijhcs.2019.06.005

Please contact:

Christian Thomay, Research Studios Austria FG, Austria