by Ioulietta Lazarou, Lampros Mpaltadoros, Fotis Kalaganis, Kostas Georgiadis, Spiros Nikolopoulos, Ioannis (Yiannis) Kompatsiaris (Centre for Research & Technology Hellas – Information Technologies Institute - CERTH-ITI)

Recent advancements in the fields of bio-signal processing, computer vision and wearables empower the independence of people with physical and cognitive disabilities.

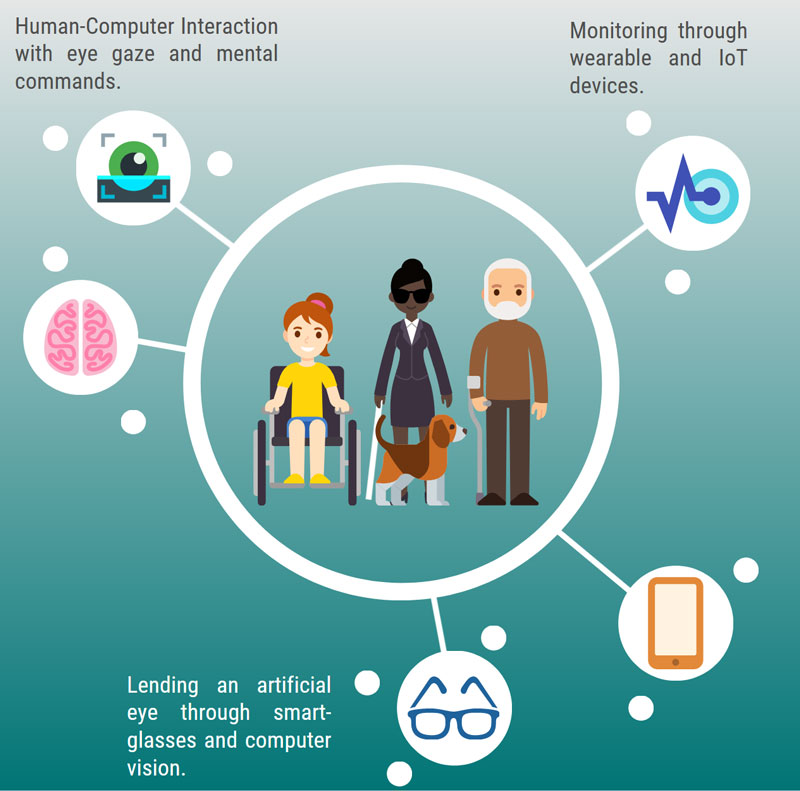

The Activities of Daily Living (ADLs) rely on a very broad spectrum of physical and cognitive functions that need to perform adequately for ensuring an acceptable level of independence. Therefore, people’s independence can be affected by different types of disabilities that, in turn, may require different types of assistive technologies, such as those shown in Figure 1, to alleviate the negative effects. In this article, we describe how the use of bio-signals have allowed people with neuromuscular disorders to operate a computer using their eyes and mind; how the use of computer vision has enabled the visually impaired to do their groceries, visit a public service office or perform a recreation activity; and, finally, how the use of wearable devices has allowed reliable monitoring of elderly people and people with chronic illnesses, leading to more effective remote care, and self-management of illnesses.

Figure 1: Brain computer interfaces, computer vision glasses, wearables and IoT devices for increasing the independence of people with physical and cognitive disabilities.

Loss of voluntary muscular control while preserving cognitive function is a common symptom of neuromuscular diseases, leading to a variety of functional deficits, including the ability to operate software applications that require the use of conventional interfaces like mouse, keyboard, or touchscreens. As a result, the affected individuals are marginalised and unable to keep up with the rest of society in a digitised world. In the context of our research project MAMEM [L1], we have allowed people with neuromuscular disorders to reintegrate into society by endowing them with the skill of operating a computer using their eyes and mind. More specifically, we have produced a mature software system enabling the basic functionalities of human computer interaction through the use of eye-gaze and mental commands [1]. Although solutions for supporting the disabled have been around for some time, the output of MAMEM is among the few solutions that nicely integrate eye-tracking with brain commands, which is also designed for home use. This system was installed at the home of 30 patients with neuromuscular disorders, and was used autonomously for one month to perform social media interaction and other online activities. In addition, our technical solution has gained the attention of relevant user communities and independent users requesting to use the system for their own benefit of improved communication and digital empowerment. Following their request, five systems are now installed in the home of patients with locked-in syndrome, helping them to operate a computer through eye movements and mental commands, helping to make these people feel less marginalised and to become more integrated with the rest of society, reflecting very positively on their mental health.

The autonomy of the visually impaired, expressed by their ability to accomplish everyday tasks on their own when help from others is not available, is of paramount importance. Our goal in the context of the research project e-Vision [L2] has been to leverage the latest advancements in computer vision, with the aim to improve the autonomy of people with visual impairment at both practical and emotional levels. The system developed for this purpose consists of a pair of eyeglasses integrating a camera and a mobile application that encapsulates computer vision algorithms capable of enhancing several daily living tasks for the visually impaired [2]. It is a context-aware solution and builds upon three important day-to-day activities: visiting a supermarket, going on an outdoor walk, and visiting a public administration building for handling a certain case. e-Vision also caters for social inclusion by providing social context and it enhances overall experience by adopting soundscapes that allow users to perceive selected points of interest in an immersive acoustic way. This particular aspect, i.e., the emphasis on the social rather than the strictly practical aspects of autonomy, is what differentiates eVision for similar solutions designed for the visually impaired.

The increasingly aging global population is causing an upsurge in ailments related to old age, primarily dementia and Alzheimer’s disease, frailty, Parkinson’s, and cardiovascular disease, but also a need for general eldercare as well as active and healthy aging. In turn, there is a need for constant monitoring and assistance, intervention, and support, causing a considerable financial and human burden on individuals and their caregivers. Interconnected sensing technology, such as IoT wearables and devices, present a promising solution for objective, reliable, and remote monitoring, assessment, and support through ambient assisted living [3]. In the context of our research project support2Live [L3] we have developed a platform based on intelligent collection and interpretation of IoT devices, to reliably monitor elderly and people with chronic illnesses, leading to more effective, more economical and more accessible remote care, harvesting multiple social and economic benefits. Performing a longitudinal study with wearables and IoT devices being installed in patient homes before and after specialized interventions, can be considered as the most significant contribution of our work.

Links:

[L1] https://www.mamem.eu/

[L2] https://evision-project.gr/en/

[L3] https://www.ypostirizo-project.gr/

References:

[1] F. Kalaganis, et al.: “An error-aware gaze-based keyboard by means of a hybrid BCI system2, Scientific Reports 8, Article number: 13176, 2018.

[2] F. Kalaganis, et al.: (2021). “Lending an Artificial Eye: Beyond Evaluation of CV-Based Assistive Systems for Visually Impaired People” HCII 2021, LNCS, vol 12769, Springer, 2021. https://doi.org/10.1007/978-3-030-78095-1_28.

[3] T.G. Stavropoulos et al.: "IoT Wearable Sensors and Devices in Elderly Care: A Literature Review", Sensors, 2020, 20(10):2826. https://doi.org/10.3390/s20102826

Please contact:

Spiros Nikolopoulos

CERTH-ITI, Greece,

Ioannis (Yiannis) Kompatsiaris,

CERTH-ITI, Greece,