by Tiago Amorim, Daniel Schneider, Viet Yen Nguyen, Christoph Schmittner and Erwin Schoitsch

Cyber-Physical Systems (CPS) offer tremendous promise. Yet their breakthrough is stifled by deeply-rooted challenges to assuring their combined safety and security. We present five major reasons why established engineering approaches need to be rethought.

All relevant safety standards assume that a system’s usage context is completely known and understood at development time. This assumption is no longer true for Cyber-Physical Systems (CPS). Their ability to dynamically integrate with third-party systems and to adapt themselves to changing environments as evolving systems of systems (CPSoS) is a headache for safety engineers in terms of greater unknowns and uncertainties. Also, a whole new dimension of security concerns arises as CPS are becoming increasingly open, meaning that their security vulnerabilities could be faults leading to life-endangering safety hazards.

Despite this, there are no established safety and security co-engineering methodologies (or even standardization). In fact, their respective research communities have traditionally evolved in a disjointed fashion owing to their different roots: namely embedded systems and information systems. With CPSoS, this separation can no longer be upheld. There are five major hurdles to a healthy safety-security co-engineering practice. The EMC² project investigates how these may be overcome.

Reconciliation points

Safety is commonly defined as the absence of unacceptable risks. These risks range from random hardware failures to systematic failures introduced during development. Security is the capacity of a system to withstand malicious attacks. These are intentional attempts to make the system behave in a way that it is not supposed to. Both safety and security contribute to the system’s dependability, each in its own way. The following issues, in particular, are intrinsically in conflict:

- Assumed User Intention: Safety deals with natural errors and mishaps, while security deals with malice from people (i.e., attacks). Thus, safety is able to include the user in its protection concept, whereas security distrusts the user.

- Quantifying Risks: Safety practices utilize hazard probability when defining the acceptable risk and required safety integrity level of a system function. In security, measuring the likelihood of an attack attempt on a system in a meaningful way is impossible. Error and mishaps can, to a certain degree, be quantified statistically, whereas it is unfeasible to estimate the occurrence of an attack. An attacker’s motivation may change over time.

- Protection Effort: Safety is always non-negotiable. Once an unacceptable risk has been identified, it must be reduced to an acceptable level, and the reduction must be made evident based on a comprehensive and convincing argument. Security, in contrast, is traditionally a trade-off decision. Specifically in the information systems domain, where a security issue is associated with a monetary loss (in some form), the decision about how much effort to invest into protection is largely a business decision.

- Temporal Protection Aspects: Safety is a constant characteristic of a static system which, ideally, is never changed once deployed. Security requires constant vigilance through updates to fix newly discovered vulnerabilities or improve mechanisms (e.g. strengthening a cryptographic key). Security depreciates as a result of increases in computational power, development of attack techniques, and detection of vulnerabilities. This is such a huge issue that a system might require a security update the day after it goes into production. Consequently, effort for ensuring safety is mainly concentrated in the design and development phases. In the case of security, the effort is divided among design, development, and operations and maintenance, the latter requiring higher effort.

- COTS: Safety-critical systems benefit from COTS. In such widely used and tested components, design flaws and failure probabilities are known. In terms of security, COTS can be detrimental since the design of these components is usually publicly available and found vulnerabilities can be exploited wherever the component is used.

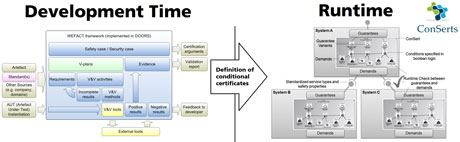

Figure 1: WEFACT addresses safety and security co-engineering at development time and ConSerts addresses CPS certification at Runtime. Both come together in the EMC² project.

EMC²: Safety and security co-Engineered

Current research performed in WP6 [1] of the project EMC² (ARTEMIS Joint Undertaking project under grant agreement n° 621429) aims to bridge the gap between safety and security assurance and certification of CPS-type systems. In EMC² we are looking at overlaps, similarities, and contradictions between safety and security certification, for example between the ISO 26262 safety case and the security target of ISO/IEC 15408 (Common Criteria). Both are aimed at assuring a certain level of trust in the safety or security of a system. Certain parts, such as the Hazard and Risk Analysis, which considers the effects in different driving situations, are relatively similar in intention to the security problem definition with its description of threats.

In addition, there is also some overlap between a security target for which part of the security concept depends on security in the operational environment, and a safety element out of context where the final safety assessment depends on the evaluation of assumptions about the system context. As one of the most prominent traits of CPS is their ability to integrate dynamically, EMC² also strives to develop corresponding runtime assurance methodologies. Formalized modular conditional certificates can be composed and evaluated dynamically at the point of integration to determine valid safety and security guarantees of the emerging system composition.

This work has been partially funded by the European Union (ARTEMIS JU and ECSEL JU) under contract EMC² (GA n° 621429) and the partners’ national programmes/funding authorities.

References:

[1] D Schneider, E Armengaud, E Schoitsch, “Towards Trust Assurance and Certification in Cyber-Physical Systems”, In Proc. of Workshop on Dependable Embedded and Cyber-physical Systems and Systems-of-Systems (DECSoS’14) - Computer Safety, Reliability, and Security, SPRINGER LNCS 8696, Springer International Publishing, pp. 180-191, 2014, ISBN 978-3-319-10556-7.

Please contact:

Tiago Amorim, Viet Yen Nguyen, Daniel Schneider

Fraunhofer IESE, Germany

Tel: +49 631 6800 3917

Email:

Christoph Schmittner, Erwin Schoitsch

Austrian Institute of Technology, Austria

E-mail: