by Thibaud Latour and Romain Martin

Computer-Assisted Testing (CAT) is gaining increasing interest from professional and educational organizations, particularly since the dramatic emergence of the Internet as a privileged communication infrastructure. In a similar fashion to e-learning technology, the generalized use of computers has made possible a wide range of new assessment modalities, as well as widening the range of skills that can be assessed.

Notwithstanding the dramatic simplification of logistic efforts, significant improvements with respect to classical paper and pencil evaluations have also been made to traceability and automatic data processing at both test design and result analysis times. This is of particular importance where large-scale assessment is concerned. Instant feedback to the subject and advanced adaptive testing techniques are made possible through the use of a computer platform. In addition, CAT makes accessible the efficient and economically viable large-scale measurement of skills that were not accessible using classical testing. Finally, in addition to the strict performance evaluation, the analysis of the strategies used by subjects to solve problems affords very valuable insights to both psychometricians and educational professionals. Effective self-assessment and formative testing are now made widely available on Web-based platforms.

CAT has evolved significantly, from its first stages when tests were direct transpositions of paper and pencil tests delivered on standalone machines, to the current use of interactive multimedia content delivered via the Web or through networked computers. During this evolution process, new paradigms have appeared, enabling test standardization, and adaptation to the subjects level of ability, among others. Significant research effort has gone towards the introduction of chronometric and behavioural parameters in the competency evaluation. More advanced testing situations and interaction modes have also been investigated, such as interactive simulations, collaborative tests, multimodal interfaces and complex procedures.

However, even if the use of CAT significantly reduces the effort of test delivery and result exploitation, the production of items and tests, as well as the overall assessment process may remain a potentially costly operation. This is particularly the case for large-scale standardized tests. We are therefore still facing a series of challenges regarding the management of the whole process, the creation of a critical mass of items, the reusability of instruments, and the versatility of evaluation instruments among others.

In addition, the consistent coverage of the numerous evaluation needs remains a crucial challenge for new generation platforms. The diversity of these needs can be structured following three main dimensions.

A chronological dimension: assessment instruments should be based on frameworks that show chronological continuity across different competency levels, thereby allowing users to adopt a lifelong learning perspective.

A steering level dimension: assessment usually takes place in a hierarchical structure, with different embedded steering levels going from individuals to entire organisational or international systems. The assessment frameworks should remain consistent across all levels.

A competency dimension: in a rapidly changing world, the range of skills that must be evaluated is also growing. Apart from certain core competencies (reading, mathematical, scientific and ICT literacy), attempts are now being made to create assessment instruments for specific vocational and important non-cognitive competency domains (eg social skills).

Given that most organizations are evolving and therefore have changing evaluation needs, the approach adopted so far for the development of computer-assisted tests (ie on a test-by-test basis focusing on a unique skill set) is no longer viable. Only the platform approach, where the focus is put on the management of the whole assessment process, allows the entire space of assessment needs to be consistently covered. In addition, this platform should rely on advanced technology that ensure adaptability, extensibility, distributivity and versatility.

With these considerations, the University of Luxembourg and the CRP Henri Tudor have initiated an ambitious collaborative R&D programme funded by the Luxembourg Ministry of Culture, Higher Education, and Research. It aims to develop an open, versatile and generic CBA platform that covers the whole space of evaluation needs.

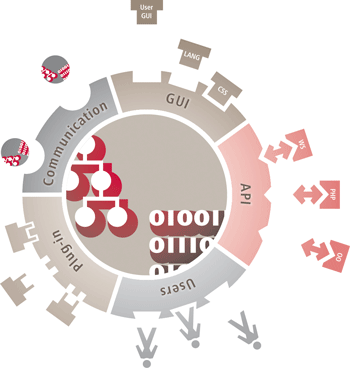

The TAO (French acronym for Testing Assisté par Ordinateur) platform consists in a series of interconnected modules dedicated to the management of subjects, groups, items, tests, planning and results in a peer-to-peer (P2P) network. Each module is a specialization of a more generic kernel application called Generis that was developed in the framework of the project. The specialization consists in adding specific models defining the domains of specialization, several plug-ins providing specific domain-dependent functionalities relying on specific model properties, possibly external applications, and a specific (optional) user graphical interface.

Offering versatility and generality with respect to contexts of use requires a more abstract design of the platform and well-defined extension and specialization mechanisms. Hence, the platform enables users to create their own models of the various CBA domains while ensuring rich exploitation of the metadata produced in reference to these models. Semantic Web technology is particularly suited to this context. It has been used to manage both the CBA process and the user-made characterization of all the assessment process resources, in their respective domains. This layer is entirely controlled by the user and includes distributed ontology management services (creation, modification, instantiation, sharing of models, reference to distant models, query services on models and metadata, communication protocol etc) based on RDF (Resource Description Framework), RDFS (RDF Schema) and a subset of OWL (Web Ontology Language) standards.

Such architecture enables advanced result analysis functionalities. Indeed, rich correlations can be made between test execution results (scores and behaviours) and any user-defined metadata collected in the entire module network.

Recently we began a very close collaboration on Computer-Based Assessment with the Deutsches Institut für Internationale Pädagogische Forschung, and new rich media have been developed to assess the reading of electronic text literacy in the framework of the PISA 2009 survey. The platform has been successfully used for the last two years in the Luxembourg national school system monitoring programme. Contacts have been established with other European country representatives in order to identify opportunities to use TAO in similar programmes. The platform is also used as a research tool by the University of Bamberg in Germany. As a very general Web-based distributed ontology management tool, Generis has also been used in the FP6-PALETTE project.

The platform is currently in a working prototype stage and is still under development. It is made freely available in the framework of research collaborations.

Link:

http://www.tao.lu/

Please contact:

Thibaud Latour

CRP Henri Tudor (Centre for IT Innovation), Luxembourg

Tel: +352 425991 327

E-mail: thibaud.latour![]() tudor.lu

tudor.lu

Romain Martin

University of Luxembourg (Educational Measurment and Applied Cognitive Science), Luxembourg

Tel: +352 466644 9369

E-mail: romain.martin![]() uni.lu

uni.lu