by Giuseppe Ghiani, Fabio Paternò, Carmen Santoro and Davide Spano

Recent technological advances have led to novel interactive software environments for edutainment, such as museum applications. These environments provide new interaction techniques for guiding visitors and improving their learning experience. We propose a multimodal, multi-device and location-aware museum guide, able to opportunistically exploit large screens when they are nearby the user. Various types of game are supported in addition to the museum and artwork descriptions, including games involving multiple visitors.

Museums represent an interesting domain of edutainment because of their increasing adoption of a rich variety of digital information and technological resources. This makes them a particularly suitable context in which to experiment with new interaction techniques for guiding visitors. However, such a wealth of information and devices could become a potential source of disorientation for users if not adequately supported.

In this paper, we discuss a solution that provides users with useful information by taking into account the current context of use (user preferences, position, device etc) and then deriving the information that might be judged relevant for them. In particular, we propose a multimodal, multi-device and location-aware museum guide. The guide is able to opportunistically exploit large screens when they are nearby the user. Various types of game are included in addition to descriptions of the museum and artworks, including games involving multiple visitors.

The mobile guide is equipped with an RFID reader, which allows the users current position to be detected and information on nearby artworks to be received. One active RFID tag is associated with each artwork (or group of neighbouring artworks). Active tags are self-powered and are detectable from many meters, meaning the user need not consciously direct the PDA towards an artwork's tag and can enjoy the visit quite naturally. By taking into account context-dependent information including the current user position, the history of user behaviour, and the device(s) at hand, more personalized and relevant information is provided to the user, resulting in a richer overall experience.

The environment supports a variety of individual games:

- the quiz is composed of multiple-choice questions

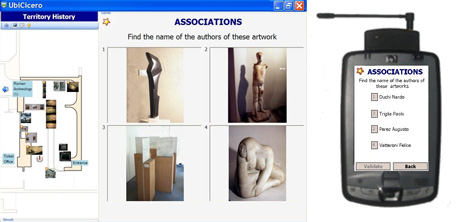

- in the associations games users must associate images with words, eg the author of an artwork, or the material of an artwork

- in the details game an enlargement of a small detail of an image is shown, and the player must guess which of the artwork images the detail belongs to the chronology game requires the user to order the images of the artworks shown chronologically according to the creation date

- in the hidden word game, the user must guess a word: the number of characters composing the word is shown to help the user

- in the memory game, the user memorizes as many details as possible from an image seen only for a short time, and then must answer a question on that image.

While individual games enable visitors to learn at their own pace, it was also judged that group games would be useful in order to heighten social interaction and stimulate cooperation between visitors. A number of group games have been implemented to date. Users can be organized into teams, and the various players in a team have mutual awareness of each other: coloured circle icons are placed beside representations of different artworks and are associated with various players in a team, so that a player can see which artworks have already been accessed by other players.

One of the main features of our solution is to support visit and game applications by exploiting both mobile and stationary devices. The typical scenario involves users freely moving and interacting through the mobile device, which can also exploit a larger, shared stationary screen (which can be considered a situated display) when the users are nearby. Shared screens connected to stationary systems can increase social interaction and improve user experience, otherwise limited to individual interaction with a mobile device. They also stimulate social interaction and communication with other visitors, though they may not know each other. A larger shared screen extends the functionality of a mobile application, enabling individual games to be presented differently, social game representations to be shared, the positions of the other players in the group to be shown, and also a virtual pre-visit of the entire museum to be experienced.

Each shared display can be in different states:

- STANDALONE: the screen has its own input devices (keyboard and mouse) and can be used for a virtual visit of the museum. It can be used by visitors who do not have the PDA guide.

- SPLIT: indicates that one visitor has taken control of the display, which shows the name and group of the controller.

- SEARCH: the display shows the last artwork accessed by the players of a group and their scores.

- GAME: the display shows one individual game.

- SOCIAL GAME: the display shows the state of one social game.

Since a shared display has to go through several states, the structure of its layout and some parts of the interface remain unchanged in order to avoid disorientating users. This permanent part of the user interface provides information such as a map of the current section, its position in the museum, an explanation of the icons used to represent the artworks and the state of the shared enigma. In standalone mode, users can select from three kinds of views of the section map depending on whether icons and/or small photos are used to represent each artwork.

Exploiting the user model data from the connected PDA, the large-screen application generates an interest evaluation for each artwork in the selected room. The user may look up the ratings, which are expressed by LED bars on a scale of 0 to 5. When a user selects an artwork or a game, the layout of the screen interface changes, adapting its focus to magnify the corresponding panel and show the artwork details or the game interface.

The screen changes its state to split when a player selects the connection through the PDA interface. In this case the large screen is used both to show additional information and also to focus the attention of multiple users on a given game, thus exploiting the screen size. When a player is connected to the large screen, its section map view is automatically changed to thumbnails, while the artwork types are shown on the PDA screen. The artwork presentation uses a higher resolution image on the large display, adding description information.

The game representation on the large screen is presented in such a way that it can be shared among visitors and used for discussions. In the distributed representation, the game answer choices are shown only on the PDA interface, while the question and higher-resolution images are shown on the larger screen (see Figure 1).

The player accessing the large screen can also locate other players. The RFID readers detect the visible tags and their signal strength, allowing the mobile application to locate the user and send the position to the large-screen device.

Links:

HIIS Lab: http://giove.isti.cnr.it

or http://www.isti.cnr.it/ResearchUnits/Labs/hiis-lab/

http://giove.isti.cnr.it/cicero.html

Please contact:

Fabio Paternò

ISTI-CNR, Italy

E-mail: fabio.paterno![]() isti.cnr.it

isti.cnr.it