In 2004, the Software Improvement Group (SIG) introduced a new software monitoring service. Then a recent spin-off of CWI with a couple of clients and a vision, SIG has transformed itself ten years later into a respected (and sometimes feared) specialist in software quality assessment that helps a wide variety of organizations to manage their application portfolios, their development projects, and their software suppliers.

Over the last 10 years, a range of technological, organizational and infrastructure innovations have allowed SIG to grow to the point that it provides an assessment service currently processing 27 million lines of code each week. In this article, we present a brief discussion of a few of those innovations.

Analysis tools

The software analysts at SIG are also software developers who create and continuously improve their own suite of software analysis tools. Not only are these tools adept at picking apart source code, they can be easily extended to support a range of additional computer languages. To date, this strength has been exploited to develop support for around 100 different languages, 50 of which are used on a continuous basis. These tools are also good at operating autonomously and scale appropriately. After an initial configuration, new batches of source code can be automatically analyzed quickly, allowing the analysts to focus their attention on the quality anomalies that are found [1]. On average, across all systems types, serious anomalies occur in approximately 15% of the analysed code.

Evaluation models

Whilst all software systems differ (i.e., their age, technologies used, functionality and architecture), common patterns do exist between them. These become apparent through on going, extensive analysis. SIG’s analysts noticed these patterns in the software quality measurements and consolidated their experience to produce standardized evaluation models that operationalize various aspects of software quality (as defined by the ISO-25010 international standard of software product quality). The first model focused on the “maintainability” of a system. First published in 2007, this model has since been refined, validated and calibrated against SIG’s growing data warehouse [2]. Since 2009, this model has been used by the Technischer Überwachungs-Verein (TÜV) to certify software products. Recently, two applications used by the Dutch Ministry of Infrastructure (Rijkswaterstaat) to assist with maintaining safe waterways, were awarded 4-star quality certificates by this organisation (from a possible 5 stars). Similar models for software security, reliability, performance, testing and energy-efficiency, have recently become available and these are continuously being refined.

Lab organization

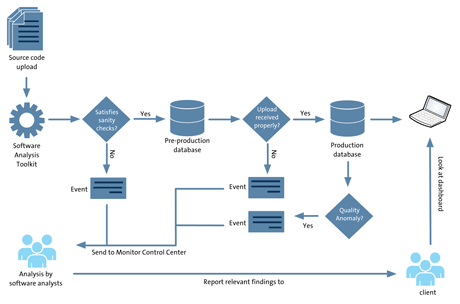

Scalable tools and models that can effectively be applied are extremely valuable, but to achieve this, proper organization is paramount. SIG organizes its software analysis activities in an ISO-17025 certified lab. This means that analysts undergo proper training and follow standardized work procedures, consequently producing reliable measurement results that can be repeated. When quality anomalies are detected, they undergo triage in the Monitor Control Centre (Figure 1). Here, the false positive results are separated out. Then, depending on the severity and/or type of finding, the analyst works with the client to determine an appropriate resolution. If the anomaly cannot be resolved, then senior management becomes involved. Currently, SIG monitors over 500 software systems and takes over 200 code snapshots each week. From these, their analysts are responsible for assessing over 27 million lines of code, in over 50 different languages from COBOL and Scala to PL/SQL and Python.

Figure 1: The workflow that is executed whenever a snapshot of a system is received.

Value adding: beyond software quality

On the foundation of tools and models, SIG has built an advisory practice. Working together with the analysts, the role of the advisors is to translate technical software quality findings into risks and recommendations. Thus, SIG is able to provide cost comparisons (e.g., the cost of repairing quality defects versus not repairing them [3]) or provide project recommendations (e.g., suboptimal quality may be a reason to postpone deployment, cancel a project or provide better conditions to the developers). By providing this context to the software findings, SIG offers meaningful value for its client’s technical staff and decision-makers.

Ongoing R&D

The growth of SIG thus far, and its future path depends on ongoing investment in R&D. Into the future, SIG is looking to keep working with universities and research institutes from around the Netherlands (including Delft, Utrecht, Amsterdam, Tilburg, Nijmegen and Leiden) and beyond (e.g., the Fraunhofer Institute) to explore new techniques to control software quality. A number of questions still remain unanswered. For example, “how can the backlogs of agile projects be analysed to give executive sponsors confidence in what those agile teams are doing?”, “how can security vulnerabilities due to dependencies on third-party libraries be detected?”, “how can development teams be given insight into the energy footprint of their products and ways to reduce them?” or, “what are the quality aspects of the software-defined infrastructure that support continuous integration and deployment?”. By continuing to explore the answers to these questions and others, SIG will continue to grow in the future.

References:

[1] D. Bijlsma, J. P. Correia, J. Visser: “Automatic event detection for software product quality monitoring”, QUATIC 2012

[2] R. Baggen et al.: “Standardized Code Quality Benchmarking for Improving Software Maintainability”, Software Quality Journal, 2011

[3] A. Nugroho, T. Kuipers, J. Visser: “An empirical model of technical debt and interest”, MTD 2011.

Please contact:

Eric Bouwers, Per John or Joost Visser

Software Improvement Group,

The Netherlands

E-mail: