InterpreterGlove is an assistive tool for hearing- and speech-impaired people that enables them to easily communicate with the non-disabled community through the use of sign language and hand gestures. Put on the glove, put the mobile phone in your pocket, use sign language and let speak for you!

Many hearing- and speech-impaired people use sign, instead of spoken, languages to communicate. Commonly, the only sectors of the community fluent in these languages are the affected individuals, their immediate friend and family groups and selected professionals.

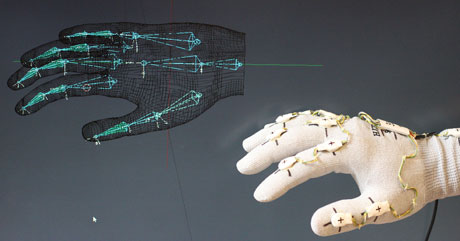

We have created an assistive tool, InterpreterGlove, that can reduce communication barriers for the hearing- and speech-impaired community. The tool consists of a hardware-software ecosystem that features a wearable motion-capturing glove and a software solution for hand gesture recognition and text- and language-processing. Using these elements it can operate as a simultaneous interpreter, reading the signed text of the glove wearer aloud (Figure 1).

Figure 1: The key components of InterpreterGlove are motion-capturing gloves and a mobile-supported software solution that recognises hand gesture and completes text- and language-processing tasks.

Prior to using the glove it needs to be configured and adapted to the user’s hand. A series of hand poses are recorded including bended, extended, crossed, closed and spread fingers, wrist positions and absolute hand orientation. This allows the glove to generate the correct gesture descriptor for any hand state. This personalization process not only enables the glove to translate every hand position into a digital handprint but also ensures that similar hand gestures will result in similar gesture descriptors across all users. InterpreterGlove is then ready for use. A built-in gesture alphabet, based on the international Dactyl sign language (also referred to as fingerspelling), is provided. This alphabet includes 26 one-handed signs, each representing a letter of the English alphabet. Users can further customize this feature by fine-tuning the pre-defined gestures. Thus, the glove is able to recognize and read aloud any fingerspelled word.

Feedback suggested that fingerspelling long sentences during a conversation could become cumbersome. However, the customization capabilities allow the user to eliminate this inconvenience. Users can define their own gesture alphabet by adding new, customised gestures and they also have the option of assigning words, expressions and even full sentences to a single hand gesture. For example, “Good morning”, “Thank you” or “I am deaf, please speak slowly” can be delivered with a single gesture.

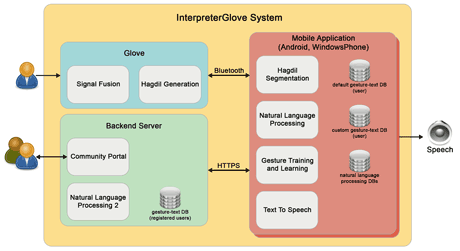

Figure 2: The building blocks of the InterpreterGlove ecosystem.

The main building blocks of the InterpreterGlove ecosystem are the glove, the mobile application and a backend server that supports the value-added services (Figure 2). The glove’s prototype is made of a breathable elastic material and the electronic parts and wiring are ergonomically designed to ensure seamless daily use. We used data from twelve ‘9 DoF (Degree of Freedom)’ integrated motion-tracking sensors to calculate the absolute position of the hand and to determine the joints’ deflections in 3D. The glove creates a digital copy of the hand which is denoted by our custom gesture descriptor. The glove connects to the user’s cell phone and transmits these gesture descriptors via a Bluetooth serial interface to the mobile application to be processed by the high-level signal- and natural-language processing algorithms.

Based on the biomechanical characteristics and kinematics of the human hand [1], we defined the semantics of Hagdil, the gesture descriptor. We use this descriptor to communicate the users’ gestures to the mobile device (Figure 3). Every second, 30 Hagdil descriptors are generated by the glove and transmitted to the mobile application.

Figure 3: Hand gestures are transmitted to the mobile application using Hagdil gesture descriptors.

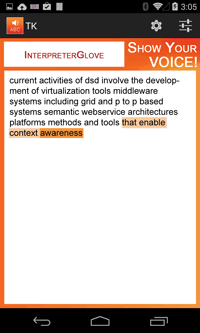

Figure 4: Two algorithms are applied to complete the segmentation and natural language processing components necessary for transforming hand gestures into understandable text. These are both intergrated into the mobile application.

Two types of algorithm are applied on the generated Hagdil descriptor stream to transform it into understandable text (Figure 4). To begin with, raw text is generated as a result of the segmentation, by finding the best gesture descriptor candidates. To achieve this a signal processing algorithm is required. Based on our evaluation, the sliding window and dynamic kinematics based solutions produced the best results and consequently these have been used in our prototype. This raw text may contain spelling errors caused by the user’s inaccuracy and natural signing variations. To address this issue, a second algorithm which performs an auto-correction function processes this raw text and transforms it into understandable words and sentences. This algorithm is based on a customised natural language processing solution that incorporates 1- and 2-gram database searches and a confusion matrix based weighting. The corrected output can then be read aloud by the speech synthesizer. Both algorithms are integrated into the mobile-based software application.

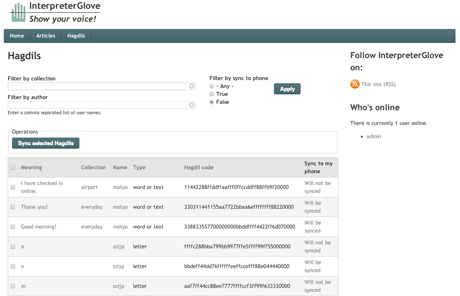

Our backend server operates as the central node for value-added services. It offers a community portal that facilitates gesture sharing between users (Figure 5). It also supports higher-level language processing capabilities than available from the offline text processing built into the mobile application.

Figure 5: The InterpreterGlove portal allows users to share their customised gestures.

Throughou t the whole project, we worked closely with the deaf and blind community to ensure we accurately captured their needs and used their expertise to ensure the prototypes of InterpreterGlove were properly evaluated. Their feedback deeply influenced our work and achievements. We hope that this device will improve and expand communication opportunities for hearing- and speech-impaired people and thus, enhance their social integration into the wider community. It may also boost their employment possibilities.

Although originally targeted to meet the needs of hearing-impaired people, we have also realised that this tool has considerable potential for many others, for example, those with a speech-impairment, physical disability or being rehabilitated following a stroke. We plan to address additional, targeted needs in future projects. Presently, two major areas have been identified for improvement that may have huge impacts on the application-level possibilities of this complex system. Integrating the capability to detect dynamics, i.e., perceive the direction and speed of finger and hand movements, opens up new interaction possibilities. Expanding the coverage of motions capture, by including additional body parts, opens the door for more complex application scenarios.

The InterpreterGlove (“Jelnyelvi tolmácskesztyű fejlesztése” KMR_12-1-2012-0024) project was a collaborative effort between MTA SZTAKI and Euronet Magyarország Informatikai Zrt. that ran between 2012 and 2014. The project was supported by the Hungarian Government, managed by the National Development Agency and financed by the Research and Technology Innovation Fund.

Links:

http://dsd.sztaki.hu/projects/TolmacsKesztyu

Dactyl fingerspelling: http://www.lifeprint.com/ASL101/fingerspelling/index.htm

Reference:

[1] C. L. Taylor, R. J. Schwarz: “The Anatomy and Mechanics of the Human Hand”, Artificial limbs 06/1955; 2(2):22-35.

Please contact:

Péter Mátételki or László Kovács, SZTAKI, Hungary

E-mail: {peter.matetelki, laszlo.kovacs}@sztaki.mta.hu