by Oliver Zendel, Wolfgang Herzner and Markus Murschitz

VITRO is a methodology and tool set for testing computer vision solutions with measurable coverage of critical visual effects such as shadows, reflections, low contrast, or mutual object occlusion. Such “criticalities” can cause the computer vision (CV) solution to misinterpret observed scenes and thus reduce its robustness with respect to a given application

Usually, a large numbers of recorded images are used for testing in order to cover as many of these “criticalities” as possible. There are several problems with this approach:

- Even with a very large set of recorded images there is no guarantee that all relevant criticalities for the target application are covered. Hence, this approach is inappropriate for certification.

- Recording large numbers of images is expensive. Additionally, several situations cannot be arranged in reality due to reasons of safety or effort.

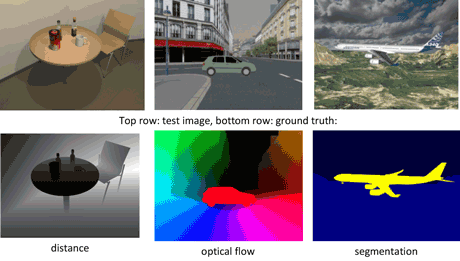

- For evaluating test outputs, the expected results (called “ground truth” or GT, see Figure 1) are needed. Usually these are generated manually, which is expensive and error prone.

Figure 1: Examples of test images and ground truths generated by VITRO

A number of test data sets are currently publicly available, but mostly are not dedicated to specific applications. Their utility for assessing a computer’s vision solution with respect to a target application is limited. Overall, the use of recorded test data does not provide a satisfactory solution.

Approach

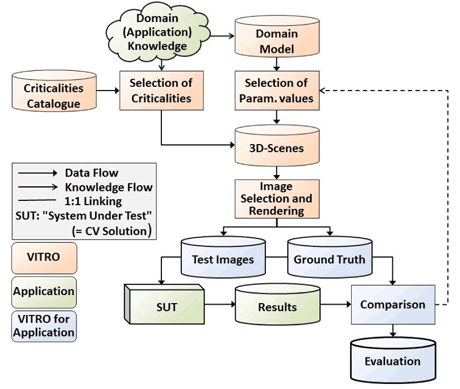

To address this situation, AIT is developing VITRO, a model-based test-case generation methodology and tool set that produces test data with known coverage of application-specific typical and critical scenes. This development was started in the ARTEMIS project R3-COP (Resilient Reasoning Robotic Co-operating Systems). Figure 2 gives a schematic overview of all VITRO components.

Figure 2: VITRO – schematic application process.

Modelling: The “domain model” describes the objects (geometry, surface appearance etc.), which can occur in rendered scenes, together with relationships between and constraints on them, as given by the application (e.g. on their size and relative positioning). It also contains information about background, illumination, climatic conditions, and cameras, i.e. the used sensors. For certain families of objects, such as clouds, generators are available or can be developed on demand.

Criticalities: A catalogue comprising over one thousand entries has been created by applying the risk analysis method HAZOP (Hazard and Operability Study) to the realm of computer vision (CV). This process considered light sources, media (e.g. air phenomena), objects with their interactions and observer effects. The latter include artifacts of the optics (e.g. aberration), the electronics (e.g. noise), and the software. Only application-relevant entries of this catalogue are selected for the actual testing.

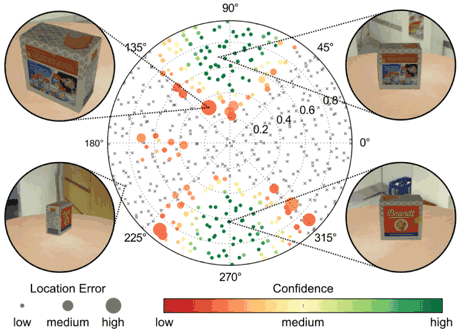

Scene generation: The parameters defined in the domain model, (e.g. object positions) establish a parameter space. This space is sampled with so-called “low discrepancy” which achieves an optimal coverage. Criticalities are included by means of further constraints or their occurrence is checked. Specific scenarios can be defined for the generation of characteristic curves or diagrams (see Figure 3).

Figure 3: Example of test results visualization.

Test data selection and generation: The previous step can yield redundant test images that are very similar to others. Therefore, test image candidates are characterized with properties derived from their original scenes, e.g. visual fraction of certain objects. These are used to group candidates and select representatives, which are rendered, and finally GT is generated for them.

Application: Generated test data can be applied to existing CV solutions for assessing their robustness with respect to the modelled application. But VITRO can already be used during development (e.g. test-driven development). In this case, simple scenes are generated first. Once these are processed robustly, increasingly challenging test data follow iteratively. Finally, adaptive and learning approaches can also be trained and tested.

An Application Example

Figure 3 shows just one of the possible visualizations of test results. The ability of a home service robot to locate a certain box depending on object distance and orientation was tested. Each dot represents a test case. Coloured dots denote successful detection, with colour corresponding to the algorithm’s own confidence measure, and with size representing the location error against GT. Grey dots denote no detection. From this test run and its visualization we can derive that the confidence measure is good (no large green dots), but the object is detected only in few orientations.

Conclusion

VITRO provides test data sets perfectly tailored to specific applications with maximum expressive power about the robustness of the user’s computer vision solution. Generated from models, the data are intrinsically consistent and precisely evaluable. Its use provides the following benefits:

- verifiable coverage of typical and critical situations

- automatic test data generation and test evaluation

- strongly reduced recording effort for real test data

- no manual definition of expected results

- testing of dangerous situations without risks

- applicable even during development

- support for adaptive/learning computer vision solutions

- results usable for future certification.

Link: http://www.ait.ac.at/vitro/

References:

[1] O. Zendel, W. Herzner, M. Murschitz: "VITRO – Model based vision testing for robustness." ISR 2013 - 44th International Symposium on Robotic (2013)

[2] W. Herzner, E. Schoitsch: “R3-COP – Resilient Reasoning Robotic Co-operating Systems”; DECS-Workshop at SAFECOMP 2013; in proc. of SAFECOMP 2013 Workshops, ISBN 2-907801-09-0, pp.169-172

[3] W. Herzner, M. Murschitz, O. Zendel; “Model-based Test-Case Generation for Testing Robustness of Vision Components of Robotic Systems“; in proc. of SAFECOMP 2013 Workshops, ISBN 2-907801-09-0, pp.209-212

Please contact:

Wolfgang Herzner; AIT Austrian Institute of Technology GmbH/ AARIT

E-mail: