by Harald Sack and Margret Plank

In addition to specialized literature, 3D objects and research data, the German National Library of Science and Technology also collects scientific video clips, which will now be opened up via semantic media analysis, and published on the web with the help of Linked Open Data.

The German National Library of Science and Technology (TIB) is one of the largest specialized libraries worldwide. The TIB's task is to comprehensively acquire and archive literature from around the world pertaining to all areas of engineering, as well as architecture, chemistry, information technology, mathematics and physics. The TIB´s information portal GetInfo provides access to more than 150 million datasets from specialized databases, publishers and library catalogues. The Competence Centre for non-textual Materials at the TIB aims to improve ease of access and use of non-textual material, such as audiovisual media, 3D objects and research data. To address these challenges, tools and infrastructure are being developed that actively support users in scientific work processes, enabling the easy publication, finding and long-term availability of non-textual objects.

TIB collects digital audiovisual media (AV-media) in the form of computer visualizations, explanatory images, simulations, experiments, interviews and recordings of lectures and conferences. TIB also holds a historical film collection of almost 11,500 research films, university teaching films and documentaries, some of which date back to the 1910s. Given the rapidly increasing volume of AV-media and the need to index even individual film sequences and media fractions, an intellectual, “manual” indexing is too expensive: an efficient automated content-based metadata extraction is required. In cooperation with the Hasso-Plattner-Institut for IT Systems Engineering (HPI) a web-based platform for TIB’s audiovisual media, the AV-Portal has been developed, combining state-of-the art multimedia analysis with Linked Open Data based semantic analysis and retrieval.

The processing workflow of the AV-Portal starts with the media ingest, where additional authoritative (textual) metadata can be provided by the user. After structural analysis based on shot detection, representative keyframes for a visual index are extracted, followed by optical character recognition, automated speech-to-text transcription, and visual concept detection. The visual concept detection classifies video image content according to predefined context related visual categories, for example, “landscape”, “drawing”, or “animation” [1]. All metadata are further processed via linguistic and subsequent semantic analysis, ie, named entities are identified, disambiguated and mapped to an authoritative knowledge base. The underlying knowledge base consists of parts of the Integrated German authority files relevant for TIB’s subject areas, which is available as Linked Data Services of the German National Library.

Because of the heterogeneous origin of the available metadata, ranging from authoritative metadata provided by reliable experts to unreliable and faulty metadata from automated analysis, a context-aware approach for named entity mapping has been developed that considers data provenance, source reliability, source ambiguity, as well as dynamic context boundaries [2]. For disambiguation of named entities, the Integrated German Authority files do not provide sufficient information, ie, they only provide taxonomic structures with hypernyms, hyponyms, and synonyms, while cross-references are completely missing. Thus, named entities must also be aligned to Dbpedia entities as well as to Wikipedia articles. DBpedia graph structure, in combination with Wikipedia textual resources and link graphs, enables reliable disambiguation based on property graph analysis, link graph analysis, as well as text-based coreference and cooccurrence analysis.

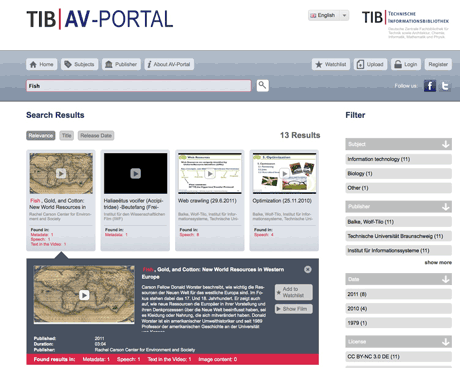

Semantic video search at the TIB AV-Portal is supported by content-based filter facets for search results that enable the exploration of the ever-increasing number of video assets in order to make searching for AV-media as easy as it already is for textual information (Figure 1). In 2011, a low fidelity prototype of the AV-Portal was developed, in 2012-2013 the beta operation of the system followed and, for 2014, the full operation of the portal is scheduled.

In addition to improving the quality of automated analysis, multilinguality is a main focus of future work. Scientific AV-media collected by TIB often comprise content and metadata in different languages. Whilst taking into account the preferred language of the user, text in different languages has to be aligned with a language dependent knowledge base (Integrated German Authority files) and potentially displayed in a second language in the GUI of the AV-Portal. Therefore, the integration and mapping of international authority files, such as the Library of Congress Subject Headings or the Virtual International Authority File is the subject of future and ongoing work.

Links:

TIB: http://www.tib-hannover.de/en/

GetInfo: http://www.tib-hannover.de/en/getinfo/

Competence Centre for non-textual Materials: http://www.tib-hannover.de/en/ services/competence-centre-for-non-textual-materials/

Linked Data Services of the German National Library: http://www.dnb.de/EN/lds

Dbpedia: http://dbpedia.org/

References:

[1] Ch. Hentschel, I. Blümel, H. Sack: “Automatic Annotation of Scientific Video Material based on Visual Concept Detection”, in proc. of i-KNOW 2013, ACM, 2013, article 16, dx.doi.org/10.1145/2494188.2494213

[2] N. Steinmetz, H. Sack: “Semantic Multimedia Information Retrieval Based on Contextual Descriptions”, in proc. of ESWC 2013, Semantics and Big Data, Springer LNCS 7882, 2013, pp. 283-396, dx.doi.org/10.1007/978-3-642-38288-8_26

Please contact:

Harald Sack

Hasso-Plattner-Institute for IT Systems Engineering, University of Potsdam, Germany

E-mail:

Margret Plank

German National Library of Science and Technology (TIB)

E-mail: