by Thomas Kadiofsky, Robert Rößler and Christian Zinner

In the foreseeable future it will be commonplace for various land vehicles to be equipped with 3D sensors and systems that reconstruct the surrounding area in 3D. This technology can be used as part of an advanced driver assistance system (ADAS) for semi-autonomous operation (auto-pilot), or for fully autonomous operation, depending on the level of technological maturity and legal regulations. Existing robotic systems are mostly equipped with active 3D sensors such as laser scanning devices or time-of-flight (TOF) sensors. 3D sensors based on stereo cameras cost less and work well even in bright ambient light, but the 3D reconstruction process is more complex. We present recent results from our visual 3D reconstruction and mapping system based on stereo vision, which has been developed within the scope of several research projects.

{jcomments on}The system of 3D reconstruction and mapping based on stereo vision, also known as “Simultaneous Localization and Mapping” (SLAM), has largely been addressed with robotic platforms in indoor scenarios [1]. In such man-made environments, various simplifying assumptions can be made (horizontal floor, flat walls and perpendicular geometry). Thus, simple laser devices scanning a single horizontal plane are sufficient to create a 2D map (floor plan). When moving to outdoor environments, which is the working area for the vast majority of vehicles, this kind of 2D representation becomes insufficient and has to be extended to a 2.5D representation called elevation map. 3D sensors are now required to capture the scene as a whole and not just along a single scan line. Stereo vision systems have this ability.

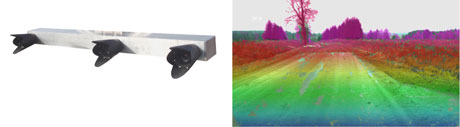

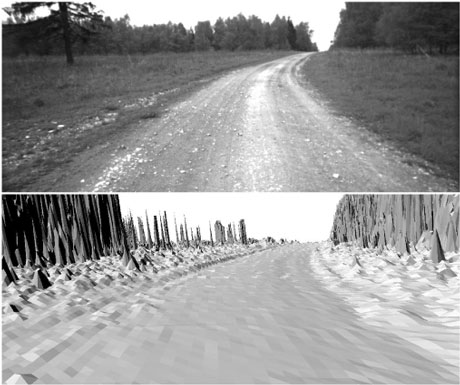

Stereo vision systems project distance values within a solid angle onto an image plane – the result is a depth image. A range of factors in the design of a stereo vision system determine the quality and accuracy of the depth images, which together determine the feasibility of stereo for the intended application: The stereo matching algorithm has to perform at interactive frame rates and at high image resolution. The stereo geometry and camera resolution must provide sufficient depth resolution even for larger ranges. We use a trinocular camera setup with 2-megapixel-class cameras and a large stereo baseline of 1.1 m delivering useful distance data even at ranges >100 m (Figure 1). The stereo matching process is performed by the real-time stereo engine S3E [2].

Single views generated by 3D sensors suffer from limited fields of view and resolutions as well as occlusions. Therefore, obtaining a more complete reconstruction of a vehicle’s surroundings requires the combination of multiple views from different positions and perspectives. The 3D data has to be processed and fused in a common coordinate frame, but it is typically captured relative to a sensor coordinate system. Hence, an important task is to recover the ego-motion of the vehicle between consecutive measurements and to localize it within the common frame. Currently we are using the following sensors for computing the trajectory:

- The intensity data of the stereo camera is used as input for a visual odometry. Feature points are tracked across consecutive images and the established correspondences are used to compute the motion of the camera over time.

- An attitude and heading reference system (AHRS) uses inertial measurements to provide an orientation.

- Although we are aiming to be independent of GPS, it is currently used as absolute measurement of the vehicle’s position.

All of the mentioned sources of the vehicle’s pose are noisy and therefore their results are inconsistent. We resolve these inconsistencies by using an Extended Kalman Filter (EKF) that computes the most probable position and orientation of the vehicle at a given sampling rate, based on the input measurements and their noise described by their covariance matrices. The relative positions of the sensors, which are rigidly mounted on the vehicle to one other, are determined in an offline calibration process.

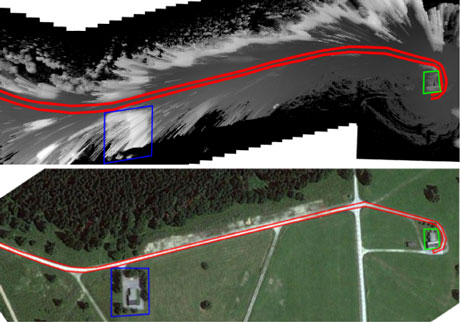

To support terrains of varying morphology we have chosen the 2.5D digital elevation model for map data representation. In addition to height information a cost map encoding the traversability of the terrain is also generated [3]. For instance grassland and a path are both quite flat, but the path should be preferred over grassland in a motion planning module. Another important feature of the representation is its ability to handle changes in the scene in order to correctly represent features such as moving obstacles.

Example visualizations of map data that have been generated by our system in real-time are shown in Figures 2 and 3.

This research has been funded by the Austrian Security Research Program KIRAS – an initiative of the Austrian Federal Ministry for Transport, Innovation and Technology (bmvit).

Link:

http://www.ait.ac.at/research-services/research-services-safety-security/3d-vision-and-modelling

References:

[1] Andreas Nüchter: “3D Robotic Mapping”. Springer, Berlin 2009, ISBN 978-3540898832

[2] M. Humenberger et al.: “A fast stereo matching algorithm suitable for embedded real-time systems”, J.CVIU, 114 (2010), 11

[3] T. Kadiofsky, J. Weichselbaum, C. Zinner: “Off-Road Terrain Mapping Based on Dense Hierarchical Real-Time Stereo Vision”, Advances in Visual Computing, Springer LNCS, Vol. 7431 (2012).

Please contact:

Christian Zinner, Thomas Kadiofsky, Robert Rößler

AIT Austrian Institute of Technology GmbH, Austria (AARIT)

E- mail: