by Iason Oikonomidis, Nikolaos Kyriazis and Antonis A. Argyros

The FORTH 3D hand tracker recovers the articulated motion of human hands robustly, accurately and in real time (20Hz). This is achieved by employing a carefully designed model-based approach that capitalizes on a powerful optimization framework, GPU processing and the visual information provided by the Kinect sensor.

{jcomments on}Humans use their hands in most of their everyday life activities. Thus, the development of technical systems that track the 3D position, orientation and full articulation of human hands from markerless visual observations can be of fundamental importance in supporting a diverse range of applications.

Developing such a system is a complex task owing to a number of complicating factors such as the high dimensionality of dexterous hand motion, the ambiguity in identifying hand parts because of their colour uniformity and the absence of observations when fingers or the palm occlude one another. Last but not least, hands often move fast and tracking needs to be performed by relatively low resolution cameras that are placed at a considerable distance from the scene.

To alleviate some of these problems, some successful methods employ specialized hardware for motion capture and/or visual markers. Unfortunately, such methods require a complex and costly hardware setup that interferes with the observed scene. Furthermore, there is limited potential to move such methods out of the lab. Several attempts have been made to address the problem by considering markerless visual data only. Existing approaches can be categorized into appearance- and model-based.

Appearance-based methods have much lower computational cost and hardware complexity but they recognize a discrete number of hand poses that correspond typically to the method’s training set. Model-based implies that the appearance of an arbitrarily positioned and articulated hand can be simulated and compared to actual observations. 3D hand tracking is then performed by systematically searching for hand motions whose simulated appearance best matches the observed images. Such methods provide a continuum of solutions but are computationally costly.

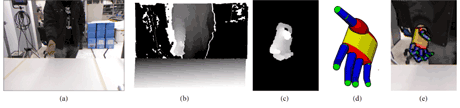

The FORTH 3D hand tracker is an accurate, robust and real time model-based solution to the problem of 3D hand tracking. The input is provided by a Kinect sensor and comprises an RGB image and a depth map, which assigns a depth measurement to each RGB value (Figures 1a and 1b). Skin colour detection is used to isolate the hand in the RGB and depth images (Figure 1c). The adopted 3D hand model comprises a set of appropriately assembled geometric 3D primitives (Figure 1d). Each hand pose is represented as a vector of 27 parameters: three for global position; four for global orientation (quaternion representation); and 20 for the relative articulation of the fingers.

Having a parametric 3D model of a hand, the goal is then to estimate the 27 parameters that make the model most compatible to the visual observations. Compatibility between observations and hypotheses is judged by differentiating depth maps, pixel by pixel. Actual observations already contain depth maps. Hand hypotheses are converted to depth maps using 3D rendering. Comparable 3D rendering is made possible by the established 3D hand model and the knowledge of the camera parameters. Simply stated, we can approximate how a hand in 3D might look through the Kinect sensor. The sum of pixel-wise differences constitutes the objective function and is parameterized over hand configurations. Optimization of this function is performed with a variant of Particle Swarm Optimization (PSO). The result of this optimization is the output of the method for a given frame (Figure 1e). Temporal continuity is exploited to track hand articulation in a sequence of frames.

The computationally demanding parts of the process have been implemented so as to run efficiently on a GPU. The resulting system tracks hand articulations with an accuracy of 5mm at a rate of 20Hz on a quad core Intel i7 920 CPU with 6GB RAM and an NVidia GTX580 GPU. Better accuracy can be traded off for slower tracking rates.

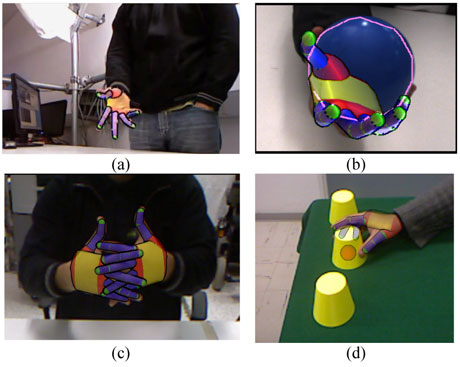

Our work [1] (Figure 2a) is the first to demonstrate that a model-based approach can produce a practical hand tracking system. The core of the tracking framework has also been employed to provide state-of-the-art solutions for problems of even higher dimensionality and complexity, e.g., for tracking a hand interacting with an object [2] (Figure 2b), for tracking two strongly interacting hands (Figure 2c) and for tracking the state of a complex scene where a hand interacts with several objects [4] (Figure 2d).

The FORTH 3D hand tracker has been implemented as a library and can be downloaded as a middleware of the OpenNI 2.0 framework, so that it can be used freely for research purposes. Several researchers around the world have already used this library to support their research. Additionally, several European, American and Asian companies have expressed interest in using it as enabling technology for developing applications in the fields including health, education, gesture recognition, gaming and automated robot programming.

An extension of our work was awarded the 1st prize at the CHALEARN Gesture Recognition demonstration competition, organized in conjunction with ICPR 2012 (Tsukuba, Japan, Nov. 2012).

This research is partially supported by the EU-funded projects GRASP, FP7-ROBOHOW.COG and WEARHAP.

Links:

FORTH 3D Hand Tracker web page:

http://cvrlcode.ics.forth.gr/handtracking/

FORTH 3D Hand Tracker OpenNI middleware library download:

http://www.openni.org/files/3d-hand-tracking-library/

References:

[1] I. Oikonomidis, N. Kyriazis, A. Argyros: “Efficient model-based 3d tracking of hand articulations using Kinect”, in BMVC 2011, http://youtu.be/Fxa43qcm1C4

[2] I. Oikonomidis, N. Kyriazis, A. Argyros: “Full DOF tracking of a hand interacting with an object by modeling occlusions and physical constraints”, in ICCV 2011, http://youtu.be/e3G9soCdIbc

[3] I. Oikonomidis, N. Kyriazis, A. Argyros: “Tracking the articulation of two strongly interacting hands”, in CVPR 2012, http://youtu.be/N3ffgj1bBGw

[4] N. Kyriazis, A. Argyros, Physically Plausible 3D Scene Tracking: The Single Actor Hypothesis. In CVPR 2013, http://youtu.be/0RCsQPXeHRQ

Please contact:

Antonis Argyros

ICS-FORTH, Greece

Tel: +30 2810 391704

E-mail: