by Sergio Escalera Guerrero

The Human Pose Recovery and Behaviour Analysis group (HuPBA), University of Barcelona, is developing a line of research on multi-modal analysis of humans in visual data. The novel technology is being applied in several scenarios with high social impact, including sign language recognition, assisted technology and supported diagnosis for the elderly and people with mental/physical disabilities, fitness conditioning, and Human Computer Interaction.

The analysis of human actions in visual and sensor data is one of the most challenging topics in Computer Vision. Recently driven by the need for user friendly interfaces for the new generation of computers comprising smart phones, tablets and game consoles, the field of gesture recognition is thriving. Given the inherent difficulties of automatically analysing humans in standard images, alternative visual modalities from different input sensors, including 3D range sensors, infrared or thermal cameras, have attracted a lot of attention. The next challenge is to integrate and analyse these different modalities within the smart devices.

The Multi-modal Human Pose Recovery and Behaviour Analysis project, developed by the HuPBA group and the Computer Vision Centre at the University of Barcelona, has been running since 2009. It is a frontier research project addressing the development of novel computer vision and pattern recognition techniques capable of capturing human poses from data captured via digital images, depth maps, thermal data, and inertial sensors, under a variety of conditions including: changes in illumination, low resolution, appearance, partial occlusions, presence of artefacts, and changes in point of view. Moreover, the project aims to develop new machine learning techniques for the analysis of temporal series and behaviours. The main members of the group are: Albert Clapes, Xavier Pérez-Sala, Victor Ponce, Antonio Hernández-Vela, Miguel Angel Bautista, Miguel Reyes, Dr. Oriol Pujol, and Dr. Sergio Escalera. To date, in collaboration with different universities (Aalborg, Berkley, Boston, Carnegie Mellon), we have developed a range of new generation applications, including:

- Automatic sign language recognition: Using RGB data to detect hands and arms, track their trajectory and automatically translate gestures to words within a vocabulary of 20 lexicons from the Spanish Sign Language.

- Assisted technology for the elderly and people with mental and physical disabilities: Using RGB-Depth and inertial sensors (such as accelerometers) to detect and recognize users within an indoor environment in a non-invasive way, identifying daily activities and objects present in the scene, and providing feedback by means of audio-visual reminders, including alerting the family/specialist if a risk event is produced. This system was designed in collaboration with the Spanish Government.

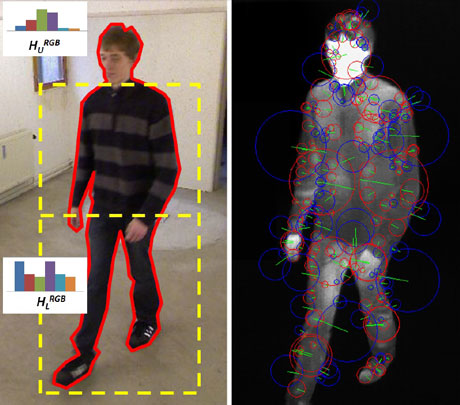

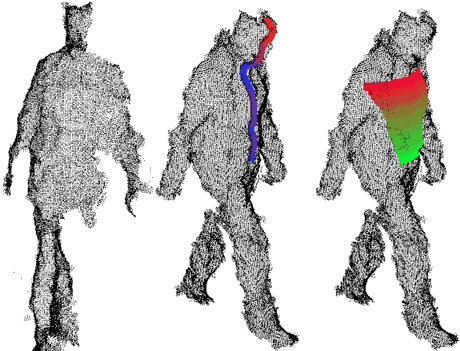

- Intelligent security 2.0: Using bi-modal RGB-Depth and tri-modal RGB-Depth-Thermal analysis to recognize users and object membership in different environments so that thefts can be automatically recognized even with no light in the scene. Figures 1 and 2 show an example of the automatically computed soft biometrics from RGB, thermal, and depth input data sources, respectively [1,2].

- Supported diagnosis in psychiatry: Using RGB-Depth computer vision techniques to automatically recognize a set of behavioural indicators related to Attention Deficit Hyperactivity Disorder (ADHD). As a result, our system is able to summarize the behaviour of children with ADHD diagnosis, giving support to psychiatrists in both diagnosis and evolution analysis during the treatment. This collaboration was performed with Tauli Hospital in Barcelona.

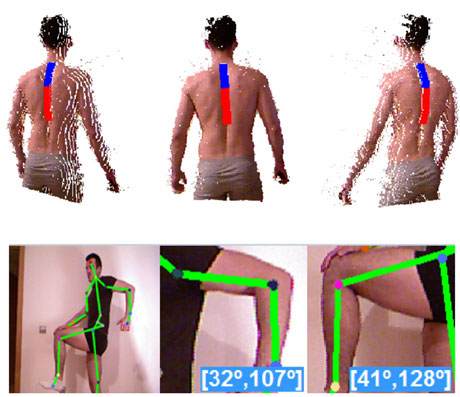

- Intelligent assistance in physiotherapy, rehabilitation, and fitness conditioning: Using RGB-Depth data from patients in order to detect particular body relations and range of movements, giving support in the diagnosis and evolution of physiotherapy and rehabilitation treatment of patients with muscle-skeletal disorders. The system was designed in collaboration with the Instituto de Fisioterapia Global Mezieres (IFGM) in Barcelona. Validations of the method in sport centres demonstrated its reliability in analyzing athletes’ training programs and making recommendations for performance improvements. Figure 3 shows an example of the system analysis [3].

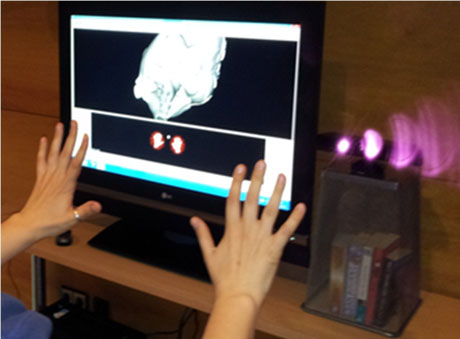

- Human Computer Interaction systems: We have applied human hand pose recognition techniques from depth data in order to define a new generation of human computer interaction interfaces. This design has been tested for retail, medical image navigation, and in living labs. Figure 4 shows an example of the system.

From a leadership point of view, our group won the Human Layout Analysis challenge in the Pascal 2010 VOC (http://pascallin.ecs.soton.ac.uk/challenges/VOC/voc2010/), and achieved the 3rd prize in the Kinect© 2012 challenge at the ICPR conference in Japan (http://gesture.chalearn.org/dissemination). Currently, we continue performing research on novel multi-modal descriptors and human behaviour analysis in time series. As a result of our research, we expect to increase the number of real applications in leisure, security and health, and transfer them to society.

References:

[1] Albert Clapés, Miguel Reyes, Sergio Escalera: “Multi-modal User Identification and Object Recognition Surveillance System”, PRL, 2013.

[2] A. Møgelmose, C. Bahnsen et al.: “Tri-modal Person Re-identification with RGB, Depth and Thermal Features, Perception Beyond the Visible Spectrum”, CVPR, 2013.

[3] M. Reyes, A. Clapés et al.: “Automatic Digital Biometry Analysis based on Depth Maps, Computers in Industry”, 2013.

Please contact:

Sergio Escalera Guerrero

Universitat de Barcelona, Computer Vision Centre, Spain

Tel +34 93 4020853

E-mail:

http://www.maia.ub.es/~sergio/