by Csaba Benedek, Zsolt Jankó, Dmitry Chetverikov and Tamás Szirányi

Two labs of SZTAKI have jointly developed a system for creation and visualization of mixed reality by combining the spatio-temporal model of a real outdoor environment with the models of people acting in a studio. We use a LIDAR sensor to measure an outdoor scene with walking pedestrians, detect and track them, then reconstruct the static part of the scene. The scene is then modified and populated by human avatars created in a 4D reconstruction studio.

{jcomments on}Real-time reconstruction of outdoor dynamic scenes is essential in intelligent surveillance, video communication, mixed reality and other related applications. Compared to conventional 2D video streams, 3D data flows of real-world events (4D scenes) provide a more authentic visual experience and additional functionality. A reconstructed 4D scene can be viewed and analysed from any viewpoint. Furthermore, it can be virtually modified by the user. However, building an interactive 4D video system is a challenging task that requires processing, understanding and real-time visualization of a large amount of spatio-temporal data.

This issue is being addressed by “Integrated 4D”, a joint internal R&D project of two labs of SZTAKI. We have built an original system for spatio-temporal (4D) reconstruction, analysis, editing, and visualization of complex dynamic scenes. The i4D system [1] efficiently integrates two very different kinds of information: the outdoor 4D data acquired by a rotating multi-beam LIDAR sensor, and the dynamic 3D models of people obtained in a 4D studio. This integration allows the system to understand and represent the visual world at different levels of detail: the LIDAR provides a global description of a large-scale dynamic scene, while the 4D studio builds a detailed model, an avatar, of an actor (typically, a human) moving in the studio.

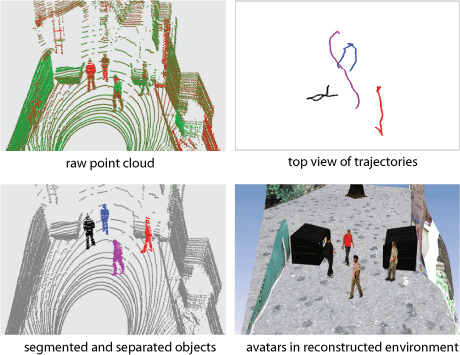

In our project, a typical scenario is an outdoor environment with multiple walking people. The LIDAR measures the scene from a fixed position and yields a time-varying point cloud. This data is processed to build a 3D model of the static part of the scene and detect and track the moving people. Each pedestrian is represented by a sparse, moving point cluster and a trajectory. A sparse cluster is then substituted by an avatar created in the 4D studio of MTA SZTAKI. This results in a reconstructed and textured scene with avatars that follow in real time the trajectories of the pedestrians.

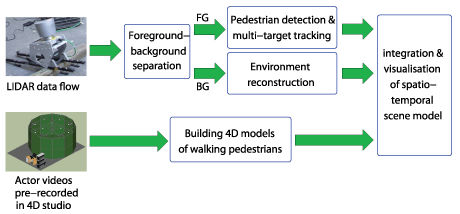

Figure 1 shows a flowchart of the i4D system. The LIDAR data flow involves environment scanning for point cloud sequence acquisition, foreground and background segmentation by a robust probabilistic approach, detection and tracking of the moving pedestrians and generating motion trajectories, geometric reconstruction of the ground, walls and other field objects, and, finally, texturing the obtained 3D models with images of the scene. Technical details are given in [2]. Our 4D reconstruction studio creates textured dynamic avatars of people walking in the studio. The hardware and software components of the studio are presented in [3]. The integration and visualization block combines the graphical elements into a joint dynamic scene model, provides editing options and visualizes the final 4D model where the avatars move in the scene along the assigned trajectories.

Sample results of the 4D reconstruction and visualization process are shown in Figure 2. The pedestrian trajectories are obtained by motion-based segmentation and tracking in the dynamic point cloud. Each avatar follows the prescribed 3D trajectory. Its orientation and rotation to the proper direction are automatically determined from the trajectory.

The main novelty of the i4D system is that it integrates two different modalities of spatio-temporal perception operating at different scales. This may open a way towards real-time, free viewpoint, scalable visualization of large time-varying scenes, which is crucial for future mixed reality and multimodal communication applications. We plan to extend the project to LIDAR data collected from a moving platform and add modules for automatic field object recognition and surface texturing.

Link: http://web.eee.sztaki.hu/i4d/

References:

[1] “Procedure and system for creating integrated three-dimensional model”, in Hungarian,

Hun PO: P1300328/4 patent pending, 2013.

[2] C. Benedek et al: “An Integrated 4D Vision and Visualisation System”,

Computer Vision Systems, Lecture Notes in Computer Science, vol. 7963, pp 21-30, 2013

[3] J. Hapák, Z. Jankó, D. Chetverikov: “Real-Time 4D Reconstruction of Human Motion”, Articulated Motion and Deformable Objects, Lecture Notes in Computer Science, vol. 7378, pp. 250-259, 2012.

Please contact:

Csaba Benedek

SZTAKI, Hungary

E-mail: