by Patrick Ruch, Thomas Brunschwiler, Werner Escher, Stephan Paredes and Bruno Michel

The spectacular progress in the development of computers has been following Moore’s Law for at least six decades. Now we are hitting a barrier. While neuromorphic computing architectures have been touted as a promising basis for low-power bio-inspired microprocessors down the road, imitating the packaging of mammalian brains is a new concept which may open new horizons independent of novel transistor technologies or non-Van Neumann architectures.

If one looks at the transistor count in microprocessors over the last four decades we have gone from 2500 to 2,500,000,000, a gain of six decades.

But today, two major roadblocks to further progress have arisen: power density and communication delays. The currently fastest computer was built by Fujitsu and is at the Riken Institute in Japan; it has a capacity of 8 petaflops and a power consumption of more than twelve megawatts --- enough for some ten thousand households. As to communication, while pure on-chip processing tasks can be completed in shorter and shorter times as technology improves, the transmission delays between processors and memory or other processors grow as a percentage of total time and severely limit overall task completion.

Energy considerations

A processor architecture that imitates the mammalian brain promises a revolution. Compared with the mammalian brain, today’s computers are terribly inefficient and wasteful of energy, with the number of computing operations per unit energy of the best man-made machines being in the order of ~0.01% of the human brain depending on the workload. This inefficiency occurs not only in the processing chips themselves but also in the energy-hungry air conditioners that are needed to remove the heat generated by the processors.

In the October 2009, No. 79, issue of ERCIM News the Aquasar project was described. In Aquasar, the individual semiconductor chips are water cooled, with water being 4,000 times more effective than air in removing heat. Aquasar uses micro-fluidic channels in chip-mounted water coolers to transport the heat. This result is achieved with relatively hot coolant so that the recovered thermal energy is used for heating a building. Aquasar is installed and running at the ETH in Zurich; a successor machine with a three petaflop capacity is currently being installed in Munich. The integration of microchannels into the semiconductor chips themselves promises to sustain very high power dissipation as the industry strives to increase integration density further and also move to 3D chip stacks.

Going further in the new paradigm, another cause of energy inefficiency is the loss (in power and in space) in delivering the required electrical energy to the chips. The pins dedicated to power supply in a chip package easily outnumber the pins dedicated to signal I/O in high-performance microprocessors, and the number of power pins has been growing faster than the total number of pins for all processor types. This power problem is essentially a wiring problem, which is aggravated by the fact that wiring for signal I/O has a problem of its own. The energy needed to switch a 1 mm interconnect is more than an order of magnitude larger than the energy needed to switch one MOSFET; 25 years ago, the switching energies were roughly equal. This disparate evolution of communication and computation is even more pronounced for another vital performance metric, latency. The reason behind this trend is simply that transistors have become smaller while the chip size has not followed suit, leading to substantially longer total wire lengths. A proposed new solution to this two-fold wiring problem, again patterned after the mammalian brain, is to use the coolant fluid as the means of delivering energy to the chips. Probably it is easiest to think of this in terms of a kind of electrochemical, distributed battery where the cooling electrolyte is constantly “recharged” while the heat is removed. This is how energy is delivered to the mammalian brain and with great effectiveness.

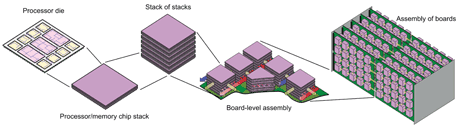

Vision of an ultra-dense assembly of chip stacks in a 3D computer with fluidic heat removal and power delivery.

Using the example of the human brain as the best low-power density computer we use technological analogs for sugar as fuel and the trans-membrane proton pumps which evolution has brought about as the chemical to electrical energy converters. These analogs are inorganic redox couples which have been mainly studied for grid-scale energy storage in the form of redox flow batteries. These artificial electrochemical systems offer superior power densities than their biological counterparts, but would still be pressed to meet the weighty challenge of satisfying the energy need of a fully loaded microprocessor. However, future high-performance computers which could be built around this fluidic power delivery scheme would be much less power-intensive due to their reduced communication cost.

Integration density and communication

The number of communication links between logic blocks in a microprocessor depends on the complexity of the interconnect architecture and on the number of logic blocks in a way that can be described by a power-law known as Rent’s Rule. Today, all microprocessors suffer from a break-down of Rent’s Rule for high logic block counts because the number of interconnects does not scale smoothly beyond the chip edge. The limited number of package pins is one of the main reasons behind the performance limitation faced by modern computing systems known as the memory wall, in which it takes several hundred to thousand CPU clock cycles to fetch data from main memory. A dense, three-dimensional physical arrangement of semiconductor chips would allow much shorter communication paths and a corresponding reduction in internal-delay roadblocks. Such a dense packaging of chips is physically possible were it not for the problems of heat removal and energy delivery using today’s architecture. Using the techniques just described for handling electrical energy delivery and heat removal, this dense packaging can be achieved and communication bottlenecks with associated delay avoided.

The fluidic means of removing heat allows huge increases in packaging density while the fluidic delivery of power with the same medium saves the space used by conventional hard-wired energy delivery. The savings in space allow much denser architectures, not to mention the sharply reduced energy needs and improved latency as communication paths are chopped up into much shorter fragments

Conclusion

Using this new paradigm, the hope is that a petaflop supercomputer could eventually be built in the space taken by today’s desktop PC. This is only a factor of eight smaller in performance than the above-mentioned fastest computer in the world today! The second reference below describes the paradigm in detail.

Links:

Gerhard Ingmar Meijer, Thomas Brunschwiler, Stephan Paredes, and Bruno Michel, “Using Waste Heat from Data Centres to Minimize Carbon Dioxide Emission”, ERCIM News, No. 79, October, 2009:

http://ercim-news.ercim.eu/en79/special/using-waste-heat-from-data-centres-to-minimize-carbon-dioxide-emission

P. Ruch, T. Brunschwiler, W. Escher, S. Paredes, and B. Michel, IBM J. Res. Develop.,“Toward 5-Dimensional Scaling: How Density Improves Efficiency in Future Computers”, Vol. 55 (5), 15:1-15:13, October 2011:

http://ieeexplore.ieee.org/xpl/freeabs_all.jsp?arnumber=6044603

Please contact:

Bruno Michel, IBM Zurich Research Laboratory

E-mail: