The Distributed Interactive Engineering Toolkit, or DIET, project started with the goal of implementing distributed scheduling strategies on compute Grids. In recent times, the Cloud phenomenon has you-go billing approach. This led to a natural step forward in the evolution of DIET, with the inclusion of Cloud platforms in resource provisioning. DIET will be used to test resource provisioning heuristics and to port new applications that mix grids and Clouds.

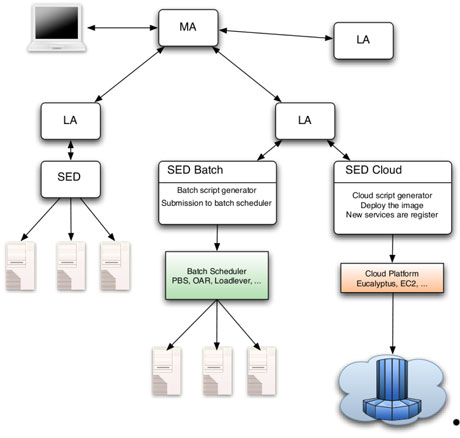

In 2000, the Grids and Algorithms, or GRAAL, research team of INRIA, located in Ecole Normale Supérieures de Lyon, France, initiated the Distributed Interactive Engineering Toolbox project under the supervision of Frédéric Desprez and Eddy Caron. The project is focused on the development of scalable middleware with initial efforts on distributing the scheduling problem across a hierarchy of agents, at the top of which sits the Master Agent, or MA. At the bottom level of a DIET hierarchy one can find the Service Daemon, or SeD, agents. SeDs are connected to the MA by means of Local Agents, or LAs. Over the last few years, the Cloud phenomenon has been gaining more and more traction in the industry and in research communities because of its qualities, the most interesting of which is its on-demand resource provisioning model and its pay-as-you-go billing approach. We deem these features to be highly interesting for DIET.

From Grid to Cloud

The first step towards the Cloud was to enable DIET to take advantage of on-demand resources. This should be done at the platform level and be transparent to the DIET user. The authors and David Loureiro have targeted the Eucalyptus Cloud platform as it implements the same management interface as Amazon EC2, but unlike the latter it allows for customized deployments on the user’s hardware. The team has implemented the scenario in which the DIET platform sits completely outside of the Eucalyptus platform and treats the Cloud only as provider of compute resources when needed. This opened the path towards new researches around grids and Cloud resource provisioning, data management over these platforms and hybrid scheduling in general.

Cloud application resource scaling

The on-demand provisioning model for resource allocation and the pay-as-you-go billing approach that Cloud systems offer makes possible the creation of more cost-effective approaches for application resource provisioning. A Cloud application can scale its resources up or down to better match its usage and to reduce the number of unused, yet paid for, resources. This leads to smart auto-scaling strategies.

By taking into account research done around self-similarities in web traffic, the authors have developed a resource usage prediction model that identifies similar past resource usage patterns from a historic archive. Once identified, they provide an insight into what the short-term usage of the platform will be. This approach can be used to predict the usage of the most important types of resources of a Cloud client and thus give an insight of what type of virtual machine to instantiate or terminate when the Cloud application is rescaled. We have tested this approach against resource usage traces from one Cloud client and three production grids and obtained encouraging results.

Economy-based resource allocation

The dynamics that Cloud systems bring in combination with the agent-based DIET platform led us towards an economic model for resource provisioning. The ultimate goal is to guarantee resource sharing fairness and avoid starvation. The authors have done this by simulating the dynamics of a tender/contract-net market. In this market contracts are established between platform users (the DIET clients) and resource providers (the DIET SeDs). Users send requests to the DIET platform for the execution of their tasks and resource providers reply with offers, each containing the cost and duration of the task execution. A user-defined utility function is applied to identify the best offer and the corresponding SeD will run the task.

In this scenario, platform users compete against each other for resource usage while the resource providers compete against each other for profit. Resource prices, which determine the offer costs, fluctuate depending on each provider’s resource usage level. Hence, users will tend to choose SeDs with more free resources and so lower prices.

Figure 1: The cloud-enabled DIET hierarchy.

What’s next?

Future directions include implementing a complete automatic resource scaling strategy for Cloud clients and testing against real-life situations.

We are also looking forward towards integrating Cloud-specific elements into the DIET scheduler for existing applications. The final goal is to see if deployment on a Cloud platform would yield a better performance and if so then with what scheduling modifications. Finally, we plan to study hybrid scheduling strategies mixing static grids and dynamic Clouds for a more efficient resource management of large scale platforms.

Link:

The DIET project: http://graal.ens-lyon.fr/DIET

Please contact:

Adrian Muresan

Ecole Normale Supérieure de Lyon, France

Tel: +33 4 37 28 76 43

E-mail: