For a more sustainable Cloud Computing scenario the paradigm must shift from “time to solution” to “kWh to the solution”. This requires a holistic approach to the cloud computing stack in which each level cooperates with the other levels through a vertical dialogue.

Due to the escalating price of power, energy-related costs have become a major economic factor for Cloud Computing infrastructures. Our research community is therefore being challenged to rethink resource management strategies, adding energy efficiency to a list of critical operating parameters that already includes service performance and reliability.

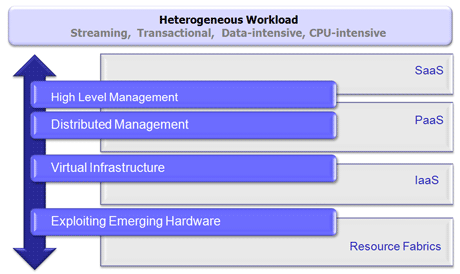

Current workloads are heterogeneous, including not only CPU-intensive jobs, but also streaming, transactional data-intensive, and other types of jobs.. These jobs are currently performed using hardware that includes heterogeneous clusters of hybrid hardware (with different types of chips, accelerators, GPUs, etc.). In addition to the goal of improved performance, the research goals that will direct research proposals at the Barcelona Supercomputing Center (BSC) include fulfilling the Service Level Agreements (SLA), considering energy consumption and taking into account the new wave of popular programming models like MapReduce. These cloud goals however, have made resource management a burning issue in today’s systems. For BSC, self-management is considered the solution to this complexity and a way to increase the adaptability of the execution environment to the dynamic behaviour of Cloud Computing. We are considering a whole control cycle with a holistic approach which involves each level cooperating with other levels through a vertical dialogue. Figure 1 shows a diagram that summarizes the role of each approach and integrates our current proposals.

Figure 1: Cloud computing stack organization and BSC contributions.

Virtual Infrastructure

At Infrastructure-as-a-Service (IaaS) level BSC is contributing the EMOTIVE framework to the research community. This framework simplifies the development of new middleware services for the Cloud. The EMOTIVE framework is an open-source software infrastructure for implementing Cloud computing solutions that provides elastic and fully customized virtual environments in which to execute Cloud services. One of the main distinguishing features of EMOTIVE framework is its functionalities that ease the development of new resource management proposals, thus contributing to innovation in this research area. Recent work extends the framework with plugins for third-party providers and federation support for simultaneous access to several clouds that can take into consideration energy-aware parameters.

Distributed Management

At Platform-as-a-Service (PaaS) level we are working on application placement to decide where applications run and the allocated resources required. To this end, applications must be designed to make proper placement decisions in order to obtain a solution that considers energy constraints as well as performance parameters. We are paying particular attention to MapReduce workloads (currently the most prominent emerging model for cloud scenario), working on the runtimes that allow control and dynamic adjustment of the execution of applications of this type with energy awareness. Finally the energy awareness is addressed at two levels: compute infrastructure (data placement and resource allocation) and network infrastructures (improving data locality and placement to reduce network utilization).

High Level Management

We are considering extending the Platform-as-a-Service layer functions to provide better support to Software-as-a-Service (SaaS) layer, according to high level parameters for resource allocation process. The main goal is to propose a new resource management aimed to fulfil the Business Level Objectives (BLO) of both the provider and its customers in a large-scale distributed system. We have preliminary results that describe the way decision-making processes are performed in relation to several factors in a synergistic way depending on provider’s interests, including business-level parameters such as risk, trust, and energy.

Exploiting Emerging Hardware

BSC is interested in studying and development of both new hardware architectures that deliver best performance/ energy ratios, and new approaches to exploit such architectures. Both lines of research are complementary and will aim to improve the efficiency of hardware platforms at a low level. Preliminary results demonstrate that the energy modelling in real time (based on processor characterization) will be leveraged to make decisions. We are focusing on leveraging hybrid systems to improve energy-saving, thereby addressing the problem of low-level programmability of such systems that can result in poor resource utilization and, in turn, poor energy efficiency.

The Autonomic Systems and e-Business Platforms department at the Barcelona Supercomputing Center (BSC) is proposing a holistic approach to the cloud computing stack in which each level (SaaS, PaaS, IaaS) cooperates with the other levels through a vertical dialogue, trying to build a “Smart Cloud” that can address the present challenges of the Cloud. The current research at BSC is about autonomic resource allocation and heterogeneous workload management with performance and energy-efficiency goals for Internet-scale virtualized data centres comprising heterogeneous clusters of hybrid hardware.

Link:

http://www.bsc.es/autonomic

Please contact:

Jordi Torres

Barcelona Supercomputing Center UPC Barcelona Tech / SpaRCIM, Spain

Tel: +34 93 401 7223

E-mail:

http://people.ac.upc.edu/torres