Green Building Blocks - Software Stacks for Energy-Efficient Clusters and Data Centres

by Dimitrios S. Nikolopoulos

The Green Building Blocks (GBB) project is a joint effort between the Computer Architecture and VLSI Laboratory (CARV) of the Institute of Computer Science at the Foundation for Research and Technology – Hellas (FORTH-ICS), and the Department of Computer Science at the Virginia Polytechnic Institute and State University (CS@VT). GBB is a stacked assembly of device drivers, performance monitors, operating system and runtime modules, which interoperate to provide software capabilities for reducing the energy footprint of applications running on clusters and data centres, while sustaining performance near the maximum levels achievable with the hardware at hand.

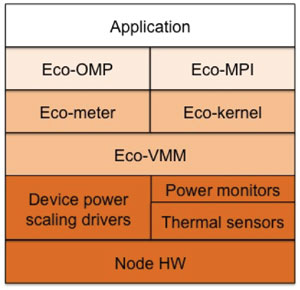

GBB Architecture Layers

The architecture of GBB is shown in Figure 1. GBB provides a software backbone for fully instrumented, energy-friendly clusters. GBB targets clusters and data centres built entirely from power-scalable devices, that is, devices with multiple power states and programmable power state transitions, including cores, CPUs, DRAM and disks. The instrumentation layer of GBB uses hardware event monitors on processor, memory, networking and I/O devices, continuous AC and DC power monitors, and on-board and ambient thermal sensors. The runtime layers implement dynamic power management policies, based on performance and energy signatures of applications collected at runtime. The policies target holistic optimization of energy efficiency at the system level.

GBB Implementation

The current GBB prototype is implemented on non-virtualized deployments of Linux clusters, and includes the Eco-meter, Eco-kernel, Eco-OpenMP and Eco-MPI modules (Figure 1).

Figure 1: Green Building Blocks architecture.

Eco-meter is a hardware monitor that collects periodic samples of device-specific event counters, from which it builds a performance, power and thermal signature (the PPT profile) of a running application. Application signatures are partitioned into phases of computation separated by communication or synchronization events. The signatures derived by Eco-meter are formulated as polynomial models, which correlate samples of event rates and configurations of hardware resources –specifically, the allocated capacity and power mode of each resource – with performance and power consumption. Eco-meter is used by user-level runtime systems in their policy modules, to predict the performance and power consumption of each phase in an application, in response to probes that change temporarily the underlying configuration and capacity of hardware resources.

Eco-kernel provides an API for scaling device capacities and power modes on demand. The purpose of eco-kernel is to replace application-oblivious power management policies in the operating system with application-specific, phase-aware policies controlled explicitly by the runtime.

Eco-OpenMP is an energy-efficient implementation of OpenMP. The Eco-OpenMP runtime uses interfaces to Eco-meter and Eco-kernel to implement optimization policies that improve energy efficiency while maintaining a hard lower performance bound. Eco-OpenMP operates simultaneously two software ‘knobs’ for controlling power efficiency, dynamic concurrency throttling (DCT) and dynamic voltage and frequency scaling (DVFS). By alleviating contention between threads for shared resources, such as memory bandwidth and cache space, DCT provides opportunities for reducing dynamic power consumption while sustaining and occasionally improving performance. DVFS also reduces dynamic power consumption while sustaining performance. Both are most effective during memory-intensive phases of computation. The DCT and DVFS policies in Eco-OpenMP are based on multi-linear regression models correlating performance with samples of event counters, thread count and core layout on systems with multiple multi-core processors. The combined phase-aware DCT-DVFS optimization scheme used in Eco-OpenMP reduces execution time by 13.7%, total system power consumption by 5.9%, overall energy consumption by 18.8% and energy-delay product by 39.5% in the NAS OpenMP benchmark suite.

Eco-MPI is an energy-efficient implementation of the Message Passing Interface (MPI) communication and tasking runtime substrates. The power-efficiency optimization policy of Eco-MPI is based on a model of slack time arising during the interaction between MPI tasks in communication phases. Eco-MPI is built on top of Eco-meter and Eco-kernel and follows the phase-sensitive optimization strategy used also on Eco-OpenMP. Applications are decomposed into phases separated by communication events. Eco-MPI estimates slack time due to communication and computation load imbalance, using a novel model which calculates the rippling effects of slack on interacting tasks, both within a node and across nodes. Eco-MPI is the first software prototype to achieve real-time power reduction on clusters of up to 256 nodes, yielding up to 14% total energy savings in the Lawrence Livermore National Laboratory (LLNL) Sequoia Benchmark suite. Eco-MPI is integrated with Eco-OpenMP to implement power-aware execution of hybrid MPI-OpenMP programs.

Current and Future Work

GBB is extended in four directions. The first is virtualization of the power, thermal and performance instrumentation infrastructure, followed by the derivation of appropriate metrics that would apportion resources to applications in order to improve energy efficiency under performance constraints. The second direction is the implementation of cluster-level static and dynamic task aggregation. Static task aggregation amounts to clustering application tasks in fewer nodes than the number of nodes apportioned to applications at submission time. Dynamic task aggregation amounts to dynamic clustering of tasks after application submission and is enabled through virtual machine migration mechanisms. The third direction is the integration of multi-device scaling capabilities in GBB, in particular DRAM and disk scaling, in a unified modeling and policy framework. This research will enable GBB to target data-intensive workloads dominated by I/O and memory traffic. The fourth direction is the development of a GBB runtime for the MapReduce programming model, targeting large-scale data processing tasks on compute clouds.

Links:

http://www.ics.forth.gr/carv

http://www.cs.vt.edu

http://people.cs.vt.edu/~dsn/papers/ PACT08.pdf

http://people.cs.vt.edu/~dsn/papers/ TPDS08.pdf

http://people.cs.vt.edu/~dsn/papers /ICS06.pdf

Please contact:

Dimitrios S. Nikolopoulos

University of Crete and FORTH-ICS

Tel: +30 2810 391657

E-mail:

http://www.ics.forth.gr/~dsn