by Christoph Sulzbachner, Jürgen Kogler, Wilfried Kubinger and Erwin Schoitsch

Conventional stereo vision systems and algorithms require very high processing power and are costly. For the many applications where moving objects (either source or target, or both) are the main goal to be detected, a new kind of bio-inspired analogue sensor that provides only event-triggered information, so-called silicon retina sensors, are providing a very fast and cost-efficient alternative.

The challenge of stereo vision is the reconstruction of depth information in images of a scene captured from two different points of view. State-of-the-art stereo vision sensors use frame-based monochromatic or colour cameras. The corresponding stereo-matching algorithms are based on pixel patch correlation for the search of pixel correspondences. Generally, these algorithms contain the following steps: computation of matching cost, aggregation of cost, computation of disparity, and refinement of disparity. As a pre-processing step, the sensor needs to be calibrated and the images delivered by the cameras rectified; various methods and algorithms are available. This procedure is applied on whole images that are captured at a fixed frame rate. Due to the resolution of the sensors and the processing-intensive algorithms, the performance of these systems is quite low.

The silicon retina (SR) stereo sensor uses new sensor technology and an algorithmic approach to processing 3D stereo information. The SR sensor technology is based on bio-inspired analogue circuits. These sensors exploit a very efficient, asynchronous, event-driven data protocol that delivers information only on variations of intensity (‘event-triggered’), meaning data redundancy processing requirements are reduced considerably. Unaltered parts of a scene that have no intensity variation need neither be transmitted nor processed, and the amount of data depends on the motion of the scene. The second generation of the sensor has a resolution of 302x240 pixels, and measurements show that for a typical scene we anticipate about 2 mega data events per second. Depending on the protocol used, one data event requires a minimum of 32 bits.

The requirements of the Silicon Retina Stereo Sensor (SRSS) are:

- Detection Range: objects must be detected with high confidence well before activation of countermeasures. Due to the system reaction time of 350ms, a detection range of 6 metres at an angular resolution of 4.2 pixel/° is required.

- Field of view: for the given sensor resolution of 128x128 pixels and the baseline 0.45m, lenses with a field of view of 30° are chosen. The large baseline is necessary to reach the required depth resolution of three consecutive detections during one meter of movement in a distance of six meter.

- System Reaction Time: due to the requirement for reliability reasons of at least three consecutive detections, a time resolution of 5ms for the sensor is needed.

- Location: depends on application conditions; for example, if applied to moving vehicles in automotive applications, the sensor must be mounted on the side of the car and must be protected against typical outdoor conditions.

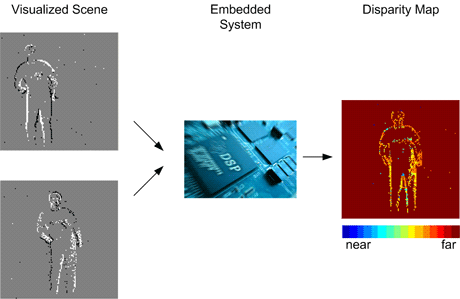

Figure 1 shows the components of the SRSS, including the captured scene and the resulting disparity map. The SRSS consists of two silicon retina imagers that are synchronized to have a common understanding of the local timing where variations of the intensity occur. The pair of imagers is called a silicon retina stereo imager (SRSI). One imager acts as a slave device, permitting a synchronization mechanism with the master imager. In Figure 1, only the scene visualized by accumulating the events for a specified time is shown. Both imagers are connected to the embedded system, which consists of two multi-core fixed-point digital signal processor (DSP) with three cores each. One DSP is responsible for data acquisition, pre-processing of the asynchronous data, and miscellaneous objectives of the embedded framework. Another performs the main part of the event-based stereo vision algorithm in parallel on three cores.

Figure 1: Overview of the SR stereo sensor, including a visualization of the scene and the resulting disparity map.

A scene captured by the SRSI delivers two streams of variations of intensity as digital output. These asynchronous data streams are acquired and processed by the embedded system resulting in a disparity map of the captured scene based on the left imager. Conventional block-based and feature-based stereo algorithms have been shown to reduce the advantage of the asynchronous data interface and throttle the performance. Our novel algorithm approach is based on locality and timely correlation of the asynchronous data event streams of both imagers. First, the sensor is calibrated to affect the distortion coefficients and the camera parameters. Then, the events received by the embedded system are undistorted and rectified to obtain matchable events lying on parallel and horizontal epipolar lines. The events from the left imager are correlated to the right imager and vice versa. This allows the disparity information of the scene to be calculated. Early prototypes showed that by using this algorithm the advantage of the SR technology could be fully exploited at very high processing framerates.

This technology is applied in the EC-funded (grant 216049) FP7 project ‘Reliable Application-Specific Detection of Road Users with Vehicle On-Board Sensors (ADOSE)’. The project focuses on the development and evaluation of new cost-efficient sensor technology for automotive safety solutions. For an overview of the ADOSE project, please see ERCIM News No. 78.

Links:

http://www.ait.ac.at

http://www.adose-eu.org

Please contact:

Christoph Sulzbachner, Jürgen Kogler, Wilfried Kubinger, Erwin Schoitsch

AIT Austrian Institute of Technology GmbH /AARIT, Austria

E- mail: {christoph.sulzbachner, juergen.kogler, wilfried.kubinger, erwin.schoitsch}@ait.ac.at