by Athanasios Mouchtaris and Panagiotis Tsakalides

Art, entertainment and education have always served as unique and demanding laboratories for information science and ubiquitous computing research. The ASPIRE project explores the fundamental challenges of deploying sensor networks for immersive multimedia, concentrating on multichannel audio capture, representation and transmission. The techniques developed in this project will help augment human auditory experience, interaction and perception, and will ultimately enhance the creative flexibility of audio artists and engineers by providing additional information for post-production and processing.

Realizing the potential of large, distributed wireless sensor networks (WSN) requires major advances in the theory, fundamental understanding and practice of distributed signal processing, self-organized communications, and information fusion in highly uncertain environments using sensing nodes that are severely constrained in power, computation and communication capabilities. The European project ASPIRE (Collaborative Signal Processing for Efficient Wireless Sensor Networks) aims to further basic WSN theory and understanding by addressing problems including adaptive collaborative processing in non-stationary scenarios; distributed parameter estimation and object classification; and representation and transmission of multichannel information. This highly diverse field combines disciplines such as signal processing, wireless communications, networking, information theory and data acquisition.

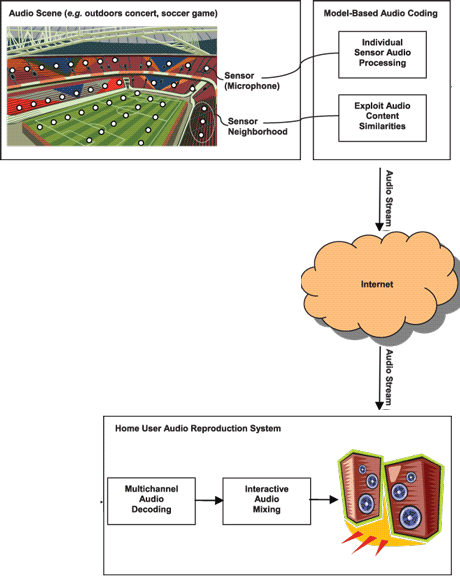

In addition to basic theoretical research, ASPIRE tests the developed theories and heuristics in the application domain of immersive multimedia environments. The project aims to demonstrate that sensor networks can be an effective catalyst for creative expression. We focus on multichannel sound capture via wireless sensors (microphones) for immersive audio rendering. Immersive audio, as opposed to multichannel audio, is based on providing the listener with the option of interacting with the sound environment. This translates to listeners having access to a large number of recordings that they can process and mix themselves, possibly with the help of the reproduction system using some pre-defined mixing parameters.

These objectives cannot be fulfilled by current multichannel audio coding approaches. Furthermore, in the case of large venues which are possibly outdoors or even underwater, and for recording times of days or even months, the traditional practice of deploying an expensive high-quality recording system which cannot operate autonomously becomes impossible. We would like to enable 'immersive presence' for the user at any event where sound is of interest. This includes concert-hall performances; outdoor concerts performed in large stadiums; wildlife preserves and refuges, studying the everyday activities of wild animals; and underwater regions, recording the sounds of marine mammals. The capture, processing, coding and transmission of the audio content through multiple sensors, as well as the reconstruction of the captured audio signals so that immersive presence can be facilitated in real time to any listener, are the ultimate application goals of this project.

So far, we have introduced mathematical models specifically directed towards facilitating the distributed signal acquisition and representation problem, and we have developed efficient multichannel data compression and distributed classification techniques. In the multisensor, immersive audio application, we tested and validated novel algorithms that allow audio content to be compressed and allow this processing to be performed on resource-constrained platforms such as sensor networks. The methodology is groundbreaking, since it combines in a practical manner the theory of sensor networks with audio coding. In this research direction, a multichannel version of the sinusoids plus noise model was proposed and applied to multichannel signals, obtained by a network of multiple microphones placed in a venue, before the mixing process produces the final multichannel mix. Coding these signals makes them available to the decoder, allowing for interactive audio reproduction which is a necessary component in immersive applications.

The proposed model uses a single reference audio signal in order to derive an error signal per spot microphone. The reference can be one of the spot signals or a downmix, depending on the application. Thus, for a collection of multiple spot signals, only the reference is fully encoded; the sinusoidal parameters and corresponding sinusoidal noise spectral envelopes of the remaining spot signals are retained and coded, resulting in bitrates for the side information in the order of 10 kbps for high-quality audio reconstruction. In addition, the proposed approach moves the complexity from the transmitter to the receiver, and takes advantage of the plurality of sensors in a sensor network to encode high-quality audio with a low bitrate. This innovative method is based on sparse signal representations and compressive sensing theory, which allows sampling of signals significantly below the Nyquist rate.

In the future, we hope to implement exciting new ideas; for example, immersive presence of a user in a concert hall performance in real time, implying interaction with the environment, eg being able to move around in the hall and appreciate the hall acoustics; virtual music performances, where the musicians are located all around the world; collaborative environments for the production of music; and so forth. A central direction in our future plans is to integrate the ASPIRE current and future technology with the Ambient Intelligence (AmI) Programme recently initiated at FORTH-ICS. It is certain that the home and work environments of the future will be significantly enhanced by immersive presence, including entertainment, education and collaboration activities.

ASPIRE is a Euro 1.2M Marie Curie Transfer of Knowledge (ToK) grant funded by the EU for the period September 2006 to August 2010. The University of Valencia, Spain and the University of Southern California, USA are valuable research partners in this effort.

Link:

http://www.ics.forth.gr/~tsakalid

Please contact:

Panagiotis Tsakalides

Athanasios Mouchtaris

FORTH-ICS, Greece

Tel: +30 2810 391 730

E-mail: {tsakalid, mouchtar}![]() ics.forth.gr

ics.forth.gr