by Nikolaos Laoutaris and Pablo Rodriguez

From its conception the Internet has been a communication network, meaning its development has been driven by the assumption that connections and data transfers are sensitive to delay. Spatial optimization in the form of routing has therefore been the main tool for improving services offered by the network. Temporal optimization, in the form of scheduling, has been limited to millisecond-second scales and aligned with the requirements of interactive delay-sensitive traffic. In recent years however, the network has been progressively shifting from communication to content dissemination. Unlike communication, content dissemination can often tolerate much larger delays, eg in the order of hours. This higher tolerance to delay allows scheduling to go beyond congestion avoidance. Here, we briefly illustrate how to use store-and-forward scheduling to perform bulk data transfers that may be impossible or, under current pricing schemes for bandwidth, prohibitively expensive.

Bulk Data

Residential and corporate bulk data have fuelled an unprecedented increase in overall Internet traffic over the last few years. On the end-user side, these include high-definition movies from commercial Web sites or peer-to-peer (P2P) networks, large-scale software updates and remote backups. Adding to this, data centres hosting cloud computing applications exchange large amounts of synchronization, accounting and data-mining traffic, while large corporate and government organizations contribute increasing numbers of economic, engineering and scientific datasets. Internet service providers (ISPs) and manufacturers of networking equipment are finding it increasingly difficult to keep up with this traffic without substantial new investments that are hard to find in the competitive ISP market. The once euphoric belief of infinite network capacity, triggered largely by the fibre glut of the 90s, has thus been quickly replaced by headlining news on the discriminatory practices of ISPs against P2P traffic.

Bulk Bottlenecks under Flat-Rate Pricing

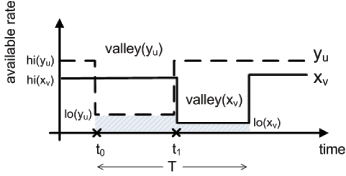

Several ISPs throttle P2P traffic from flat-rate residential customers during peak hours in order to free capacity for interactive traffic that is valued more highly by most users. Popular P2P applications like BitTorrent tend to be targeted, but in the future it may be any other bulk application that becomes popular among flat-rate residential customers. Such throttling bottlenecks introduced by cascades of traffic-shaping devices can have a severe impact on flows across multiple ISPs with different throttling times. In Figure 1 we give an example of a difficult case of combined throttling using hypothetical uplink and downlink rates of a sender and receiver at different access ISPs. In particular, we observe that by chaining such throttling bottlenecks, the combined throttling of receiver (valley(u)) and sender (valley(v)) results in a small transferred volume indicated by the shaded area behind the two rates. Notice here that had it not been for the combined effect, each throttling behaviour alone would have allowed for much higher volumes (individual areas under the solid and dashed lines). In the extreme case of sender and receiver pairs with long, non-overlapping valleys, the transfer could be throttled across the day. Such situations can occur with end points in remote time zones, or within the same time zone but on ISPs of different types, eg, a residential access ISP peaking in the evening and a corporate access ISP peaking at noon.

Bulk Bottlenecks under Percentile Pricing

Similar problems can arise under the 95-percentile pricing often applied to corporate customers or hosting services that pay based on (nearly) peak usage. Such pricing is justified by the fact that the cost of networking equipment depends on the maximum load it has to carry with a certain quality of service (QoS). Given that customers pay according to peak traffic, and granted that loads typically exhibit strong diurnal patterns, this leaves much already-paid-for offpeak capacity that can be used to send additional bulk data at no extra cost. Nonetheless, as before, time-zone differences can present a barrier. For example, it might be impossible to use the capacity during the load valley in the early morning hours to send bulk data to a receiver in a distant time zone that is currently going through its evening peak hours. The end result could be additional transit costs as the bulk flow cannot avoid increasing the peak load.

Store 'n' Forward to the Rescue

As such bottlenecks (due either to pricing or throttling) become more prevalent, we argue that to restore the performance of bulk transfers and minimize transmission costs will require a new 'Store'n Forward' (SnF) service based on 'temporal redirection' techniques. Existing 'spatial redirection' techniques like native and overlay routing perform path selections over short periods to avoid bottlenecks at the Internets core, but have no way of escaping complex accumulated constraints that can occur in the future via the combination of various bottlenecks in different time zones.

To solve the problem, we propose breaking end-to-end flows into smaller segments and performing SnF scheduling through intermediate storage nodes to achieve the best utilization of the capacity available between two end points for long-lived bulk transfers. Storage nodes decouple the end-point constraints: when a sender is not throttled (charged) then the data is uploaded quickly (cheaply) to a storage node where it accumulates until the receiver can also download it quickly (cheaply). In previous work, we used data from a large transit provider to show that SnF can provide Tbyte-sized daily bulk transfers at low cost, or for free, whereas end-to-end connection transfers, and even parcel delivery services would incur a much higher cost. We have also argued for the benefits of SnF scheduling for residential broadband users. This work provides some initial direction in what we believe to be a promising new field of research on delay-tolerant networks.

Link:

A full PDF version of this article including references:

http://research.tid.es/nikos/images/bulk_overview.pdf

Please contact:

Nikolaos Laoutaris, Pablo Rodriguez

Telefonica Research, Barcelona, Spain

E-mail: {nikos,pablorr}![]() tid.es

tid.es